ENGLISH|简体中文

Although the impressive performance in visual grounding, the prevailing approaches usually exploit the visual backbone in a passive way, i.e., the visual backbone extracts features with fixed weights without expression-related hints. The passive perception may lead to mismatches (e.g., redundant and missing), limiting further performance improvement. Ideally, the visual backbone should actively extract visual features since the expressions already provide the blueprint of desired visual features. The active perception can take expressions as priors to extract relevant visual features, which can effectively alleviate the mismatches.

Inspired by this, Xi Li Lab cooperated with the MindSpore team to conduct a series of innovative research, and proposed an active perception Visual Grounding framework based on Language Adaptive Weights, called VG-LAW.

arxiv »

cvpr open access paper pdf »

Table of Contents

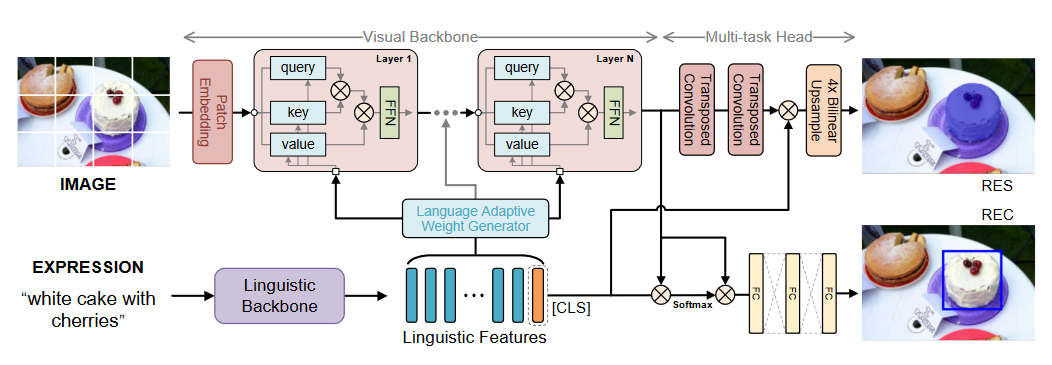

We propose an active perception visual grounding framework based on the language adaptive weights, called VG-LAW, which can actively extract expression-relevant visual features without manually modifying the visual backbone architecture.

Benefiting from the active perception of visual feature extraction, we can directly utilize our proposed neat but efficient multi-task head for REC and RES tasks jointly without carefully designed cross-modal interaction modules.

Extensive experiments demonstrate the effectiveness of our framework, which achieves state-of-the-art performance on four widely used datasets, i.e., RefCOCO, RefCOCO+, RefCOCOg, and ReferItGame.

The overall architecture of our proposed VG-LAW framework.

The overall architecture of our proposed VG-LAW framework.

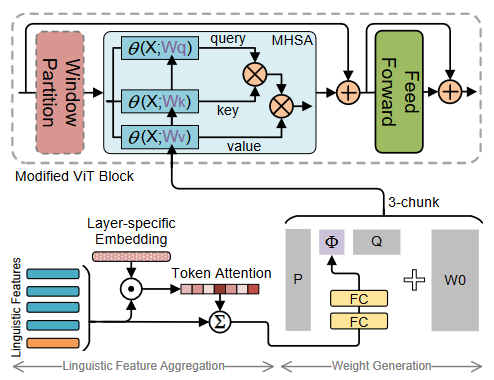

The detailed architecture for language adaptive weight generation.

This is an example of how you may give instructions on setting up your project locally. To get a local copy up and running follow these simple example steps.

Download the datasets and run python tools/preprocess.py.

More details here

- Download the ViTDet,ViT-B Mask R-CNN model

cd checkpoint

wget link TODORunning following commands to start trainning

mpirun -n DEVICE_NUM python src/train.py --batch_size=BATCH_SIZE --batch_sum=BATCH_SUM --dataset=DATASET_NAME --splitBy=SPLITBY --experiment_name=vglaw_DATASET --short_comment=vglaw_DATASET --law_type=svd --img_size=448 --vit_model=vitdet_b_mrcnn --pretrained_path=PRETRAINED_PATH --translate --lr_lang=1e-5 --lr_visual=1e-5 --lr_base=1e-4 --lr_scheduler=step --max_epochs=20 --drop_epochs=10 --log_freq=10 --use_mask --mode_name=PYNATIVE --save_ckpt_dir=SAVE_CKPT_DIRor running the scripts after setting them up correctly. (DATASET can be refcoco/refcoco+/refcocog/refclef)

sh scripts/train_DATASET.sh| dataset | REC(Prec@0.5) | RES(mIoU) | download |

|---|---|---|---|

| refcoco (val) | 86.62 | 75.62 | ![law_fpt_vit_refcoco_shareSD_mt.ckpt][link TODO] |

| refcoco+ (val) | 76.37 | 66.63 | ![law_fpt_vit_refcoco+_shareSD_mt.ckpt][link TODO] |

| refcocog (val) | 76.90 | 65.63 | ![law_fpt_vit_refcocog_shareSD_mt.ckpt][link TODO] |

| refclef (test) | 77.22 | - | ![law_fpt_vit_refclef_shareSD_mt.ckpt][link TODO] |

Use the above checkpoint or the checkpoint you trained yourself in scr/eval_visualize.py to obtain an image output.

Remember to make the correct settings first. (dataset/pretrained_path/indexes etc.)

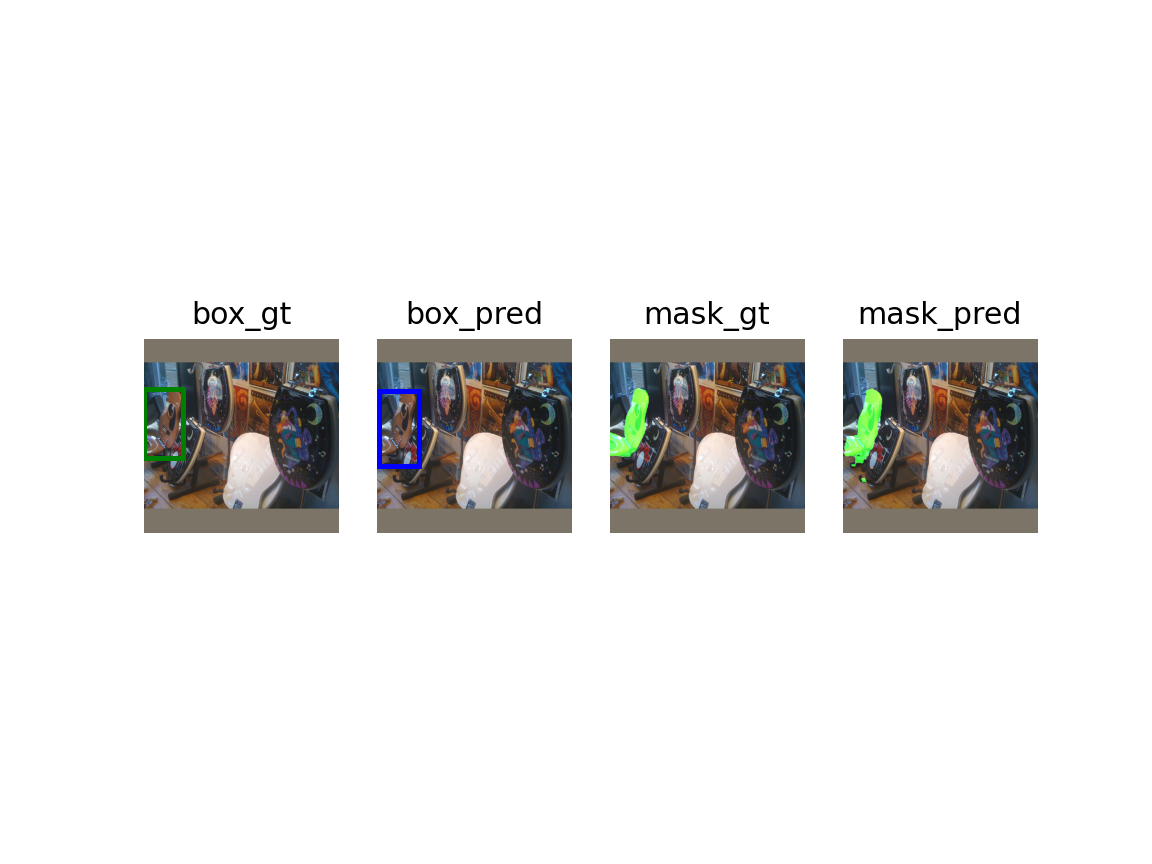

python src/eval_visualize.pyOutput to the folder named visualize by default. Like this.