I/O benchmark for shared filesystems. Only writes are supported now, as usually it slower that reads.

Shared filesystem usually can handle more IO than the local ones, so it is needed to test them using several nodes (clients). Because of the nature of concurrent access and probability theory, not all clients are operating with the same speed. Some are faster, other are slower. Situation getting even worse if shared storage consists of several building blocks (several storage and meta-data servers) like BeeGFS or Lustre The common approach of bencharking is to split desirable amount of data into equal relatively large pieces (number of pieces is equal of the number of clients) and ask client to write (or read) those pieces independently. For instance if we specify 10TB for 10 clients, each client will write (read) 1TB of the data from shared file system. As a result fastest process will write its 1TB and go to idle and benchmark will finish when the slowest processes put their data onto the storage. In many cases I/O from one or several client processes cannot saturate the bandwidth of the server and benchmark will measure not the performance of the shares storage, but the performance of the slowest processes: "a herd of buffalo can only move as fast as the slowest buffalo".

The other approach is a operate like a team: "do work for your lazy colleagues". Here the whole amount of the data is split onto equal small pieces (chunks), and a single dedicated process is tracking how many of those pieces are fallen down to the filesystem. As a result, steady speed of I/O is all along the benchmark.

More detailed explanation can be found here.

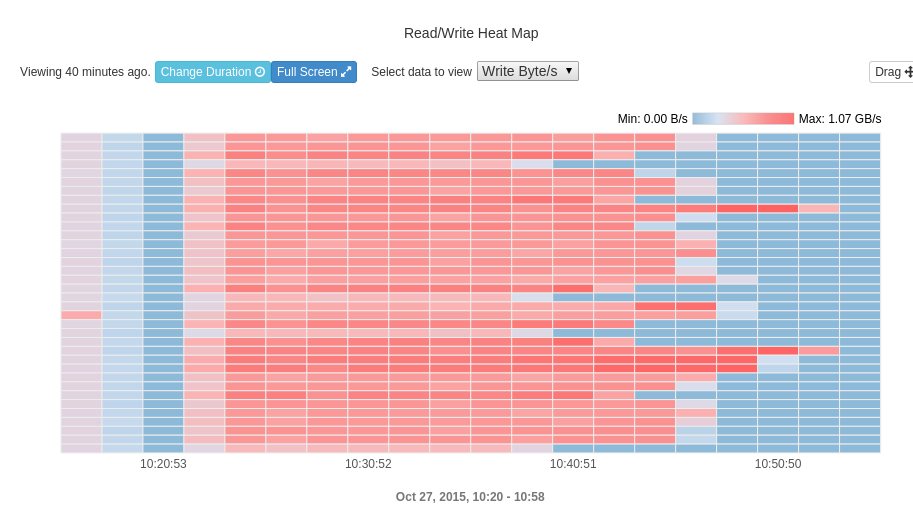

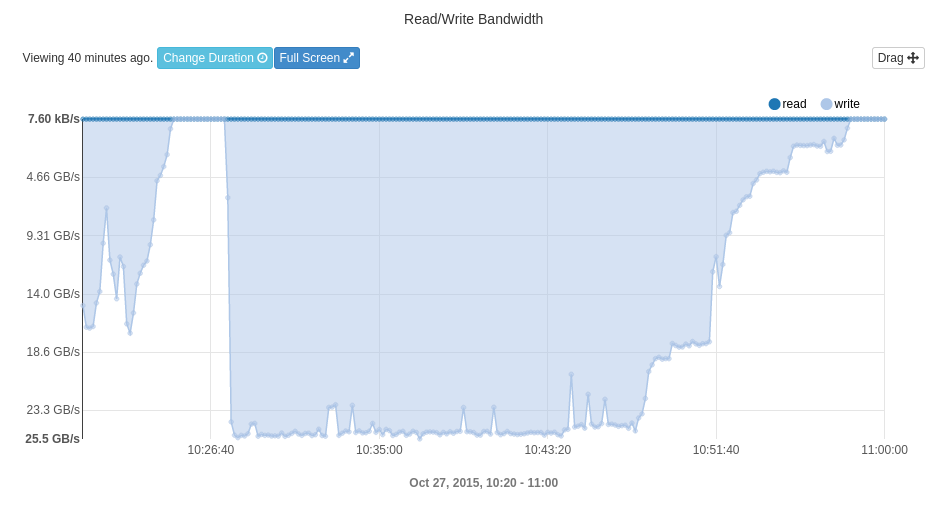

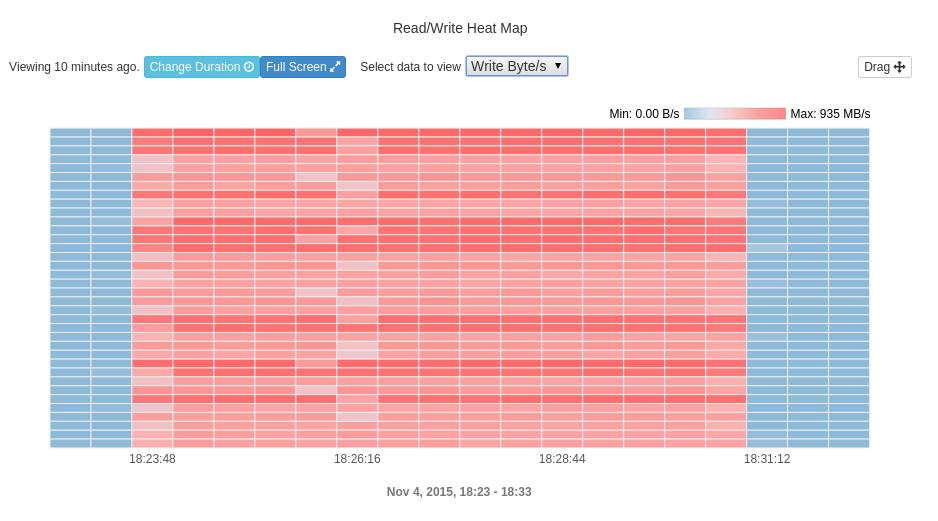

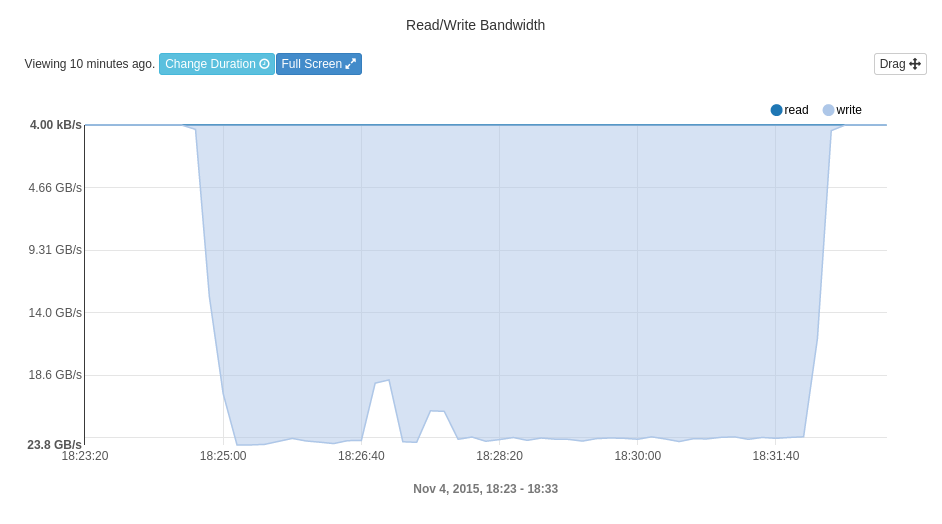

All pictures were captured from IEEL web-interface (Enterprise Lustre FS by Intel).

Here 2 slow processes are 1.5 slower that the fastest ones, and the test lasts 1.5 longer than it could be.

Resulting speed was about 17 GB/sec

Resulting speed was about 17 GB/sec

Slowest processes are not limiting the duration of the test.

Resulting speed was 24 GB/sec

Resulting speed was 24 GB/sec

As MPI is using to track the chunks, so some flavour of MPI is required. Tested on OpenMPI only.

mpicc -lrt mpiio.c

-lrt - for posix AIO.

-b <N> Block size

-r <N> Max outstanding request

-f <N> File name

-c <N> Chunk size

-s <N> Number of bytes to write

-h This help

N accepts multipliers [k,K,m,M,g,G,t,T,p,P,e,E], 1k=1000, 1K=1024