Official PyTorch implementation of MT4SSL: Boosting Self-Supervised Speech Representation Learning by Integrating Multiple Targets

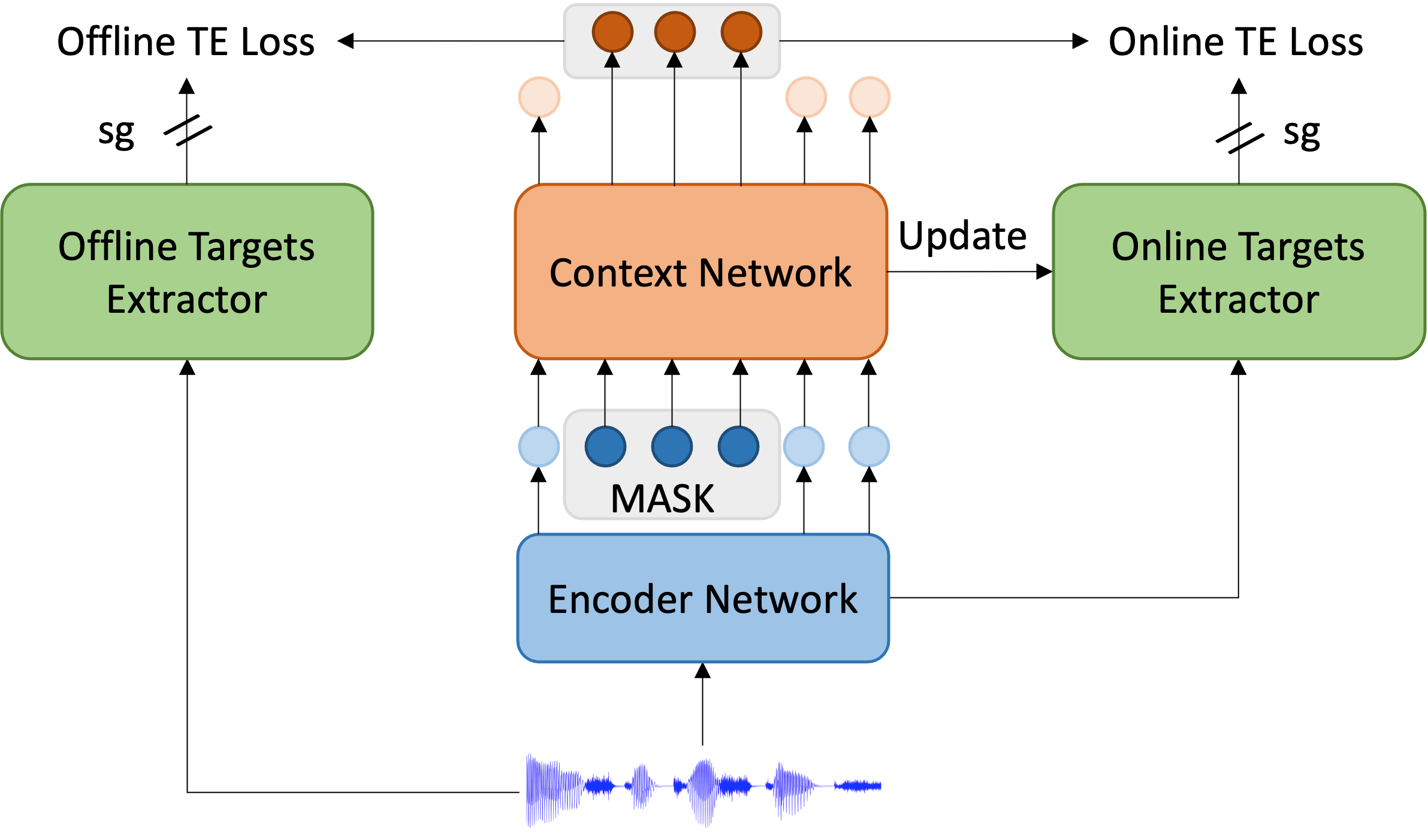

MT4SSL is a multi-task learning framework for speech-based self-supervised learning.

MT4SSL optimizes the model with offline targets and online targets simultaneously.

MT4SSL achieves good performance and convergence. Relatively low WER on the speech recognition task can be obtained with only a few pre-training steps.

The implementation is mainly based on the fairseq codebase.

git clone https://github.com/pytorch/fairseq

cd fairseq

pip install --editable ./

git clone https://github.com/ddlBoJack/MT4SSLWe use the LibriSpeech dataset for implementation.

Please follow the steps here to prepare *.tsv (sources), here to prepare *.km (K-means targets), and here to prepare *.ltr (supervised targets).

python fairseq_cli/hydra_train.py \

--config-dir MT4SSL/code/config/pretraining \

--config-name base_librispeech \

checkpoint.save_dir=${pretrain_dir} \

task.data=${data_dir} \

task.label_dir=${label_dir} \

task.label_type=km \

common.user_dir=MT4SSL/code \You can simulate distributed_training.distributed_world_size=k +optimization.update_freq='[x]' where

python fairseq_cli/hydra_train.py \

--config-dir MT4SSL/code/config/finetuning \

--config-name base_10h \

checkpoint.save_dir=${finetune_dir} \

task.data=${data_dir} \

model.w2v_path=${pretrain_dir} \

common.user_dir=MT4SSL/code \You can simulate distributed_training.distributed_world_size=k +optimization.update_freq='[x]' where

Decode with Viterbi algorithm:

python examples/speech_recognition/new/infer.py \

--config-dir examples/speech_recognition/new/conf \

--config-name infer \

task=audio_finetuning \

task.data=${data_dir} \

task.labels=ltr \

task.normalize=true \

dataset.gen_subset=dev_clean,dev_other,test_clean,test_other \

decoding.type=viterbi \

decoding.beam=1500 \

common_eval.path=${finetune_dir}/checkpoint_best.pt \

common.user_dir=MT4SSL/code \Decode with the 4-gram language model using flashlight and kenlm:

python examples/speech_recognition/new/infer.py \

--config-dir examples/speech_recognition/new/conf \

--config-name infer \

task=audio_finetuning \

task.data=${data_dir} \

task.labels=ltr \

task.normalize=true \

dataset.gen_subset=dev_clean,dev_other,test_clean,test_other \

decoding.type=kenlm \

decoding.lmweight=2 decoding.wordscore=-1 decoding.silweight=0 \

decoding.beam=1500 \

decoding.lexicon=${lexicon_dir} \

decoding.lmpath=${lm_dir} \

common_eval.path=${finetune_dir}/checkpoint_best.pt \

common.user_dir=MT4SSL/code \@inproceedings{ma2022mt4ssl,

title={MT4SSL: Boosting Self-Supervised Speech Representation Learning by Integrating Multiple Targets},

author={Ma, Ziyang and Zhen, Zhisheng and Tang, Changli and Wang, Yujin and Chen, Xie},

booktitle={Proc. Interspeech},

year={2023}

}This repository is under the MIT license.