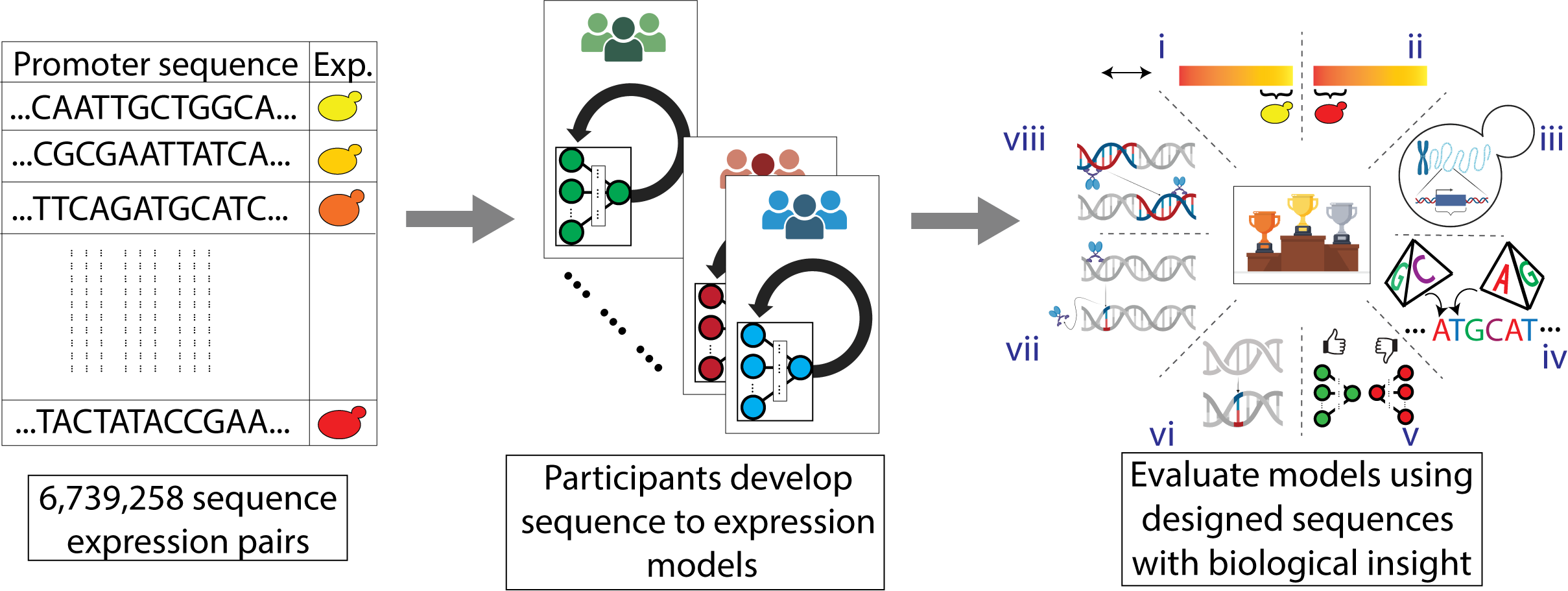

To address the lack of standardized evaluation and continual improvement of genomics models, we organized the Random Promoter DREAM Challenge 2022. Here, we asked the participants to design sequence-to-expression models and train them on expression measurements of random promoter sequences. The models would receive regulatory DNA sequence as input and use it to predict the corresponding gene expression value. We designed a separate set of sequences to test the limits of the models and provide insight into model performance.

The top-performing solutions in the challenge exceeded performance of all previous state-of-the-art models for similar data. The top-performing models included features inspired by the nature of the experiment and the state-of-the-art models from computer vision and NLP, while incorporating novel training strategies that are better suited to genomics sequence data. To determine how individual design choices affect performance, we created a Prix Fixe framework that enables modular testing of individual model components, revealing further performance gains.

Finally, we benchmarked the DREAM models on Drosophila and human datasets, including predicting expression and open chromatin from DNA sequence, where they consistently surpassed existing state-of-the-art performances. Overall, we demonstrate that high-quality gold-standard genomics datasets can drive significant progress in model development.

More details about the findings of the consortium are available in biorxiv. The raw and processed data are available in GEO and Zenodo, respectively. All submissions (code+report) made at the end of DREAM Challenge are available here.

The environment.yml file specifies all required libraries for this project. If you're managing virtual environments with Anaconda, you can directly install these dependencies using the command:

conda env create -n dream -f environment.yml

Details regarding the Prix Fixe framework is presented here. For a comprehensive example of setting up and running a complete pipeline of the DREAM-optimized models using the Prix Fixe framework, refer to this tutorial.

To convert your own model architectures within the prixfixe framework, refer to this tutorial, which contains ResNet implementation example.

You can use the prixfixe framework to benchmark your model architecture on the DREAM Challenge dataset. All the DREAM-optimized models share the same training strategy, even some network components like first layers block and final layers block (more details on the paper). You can use them and focus on designing your core architecture block. You can also attempt to design better first layer blocks and final layer blocks that further improve the performance of the DREAM-optimized models.

You can directly train the DREAM-optimized models on your MPRA/STARR-seq/ATAC-seq data by following these tutorials.

[single-task] [multi-task] [atac-seq]

If you wish to add new models components to prixfixe that further improve the performance of DREAM-optimized models, please follow the standard GitHub pull request process.

You can also contribute by converting the DREAM submissions into the prixfixe framework and reporting their performance. Please use GitHub issues to let us know if you want to work on a particular model so we can coordinate.