SAT arXiv BMVC2020 | AdaBits arXiv CVPR2020 | Model Zoo | BibTex

Illustration of neural network quantization with scale-adjusted training and adaptive bit-widths. The same model can run at different bit-widths, permitting instant and adaptive accuracy-efficiency trade-offs.

- Requirements:

- python3, pytorch 1.0, torchvision 0.2.1, pyyaml 3.13.

- Prepare ImageNet-1k data following pytorch example.

- Training and Testing:

- The codebase is a general ImageNet training framework using yaml config under

appsdir, based on PyTorch. - To test, download pretrained models to

logsdir and directly run command. - To train, comment

test_onlyandpretrainedin config file. You will need to manage visible gpus by yourself. - Command:

python train.py app:{apps/***.yml}.{apps/***.yml}is config file. Do not missapp:prefix.

- The codebase is a general ImageNet training framework using yaml config under

- Still have questions?

- If you still have questions, please search closed issues first. If the problem is not solved, please open a new.

All settings are

fp_pretrained=True

weight_only=False

rescale=True

clamp=True

rescale_conv=False

For all adaptive models, switchbn=True, switch_alpha=True

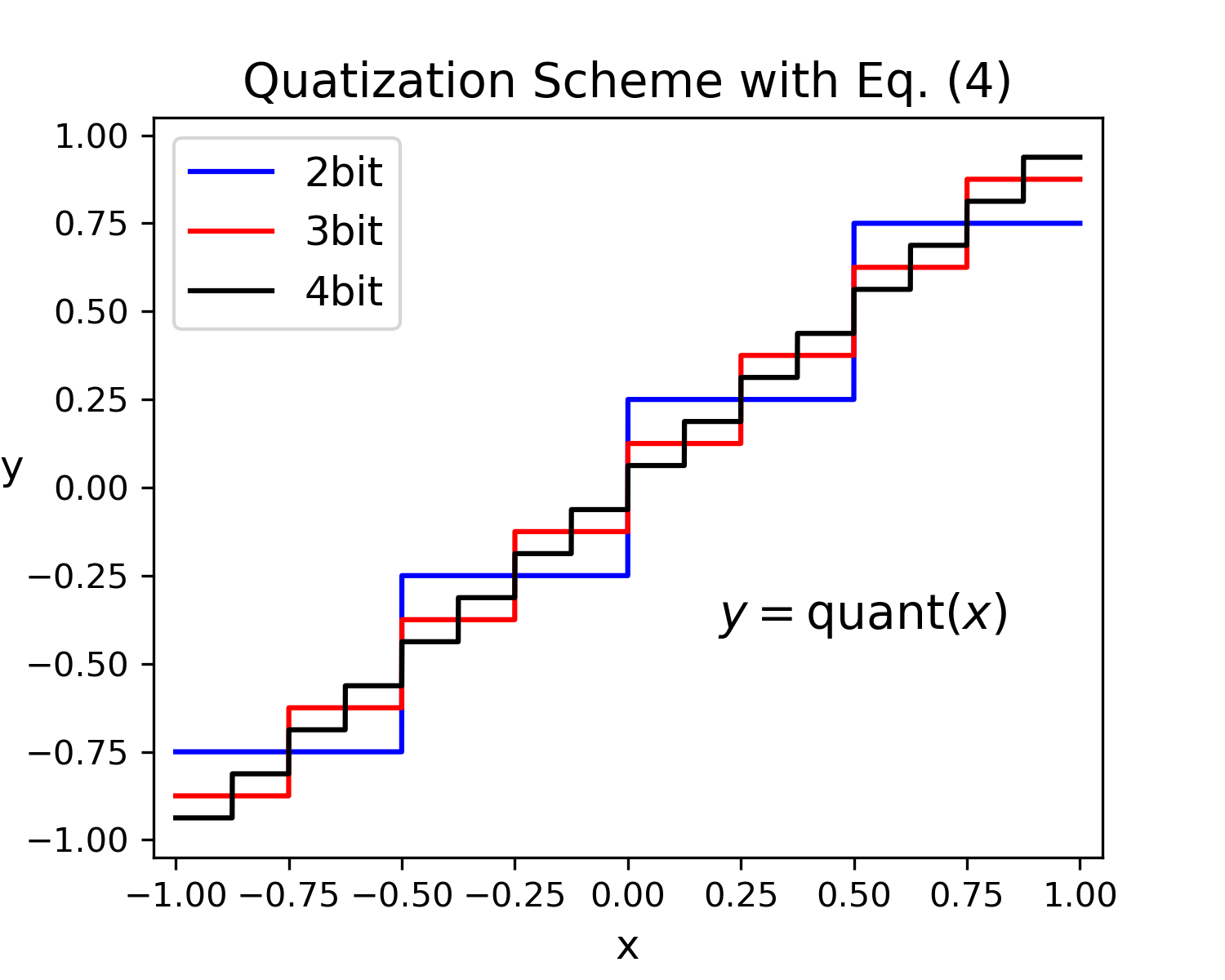

For convergent training, it is beneficial to have vanishing mean for weight distribution, besides proper variance. To this end, we should use the following quantization method for weights:

(also check this)

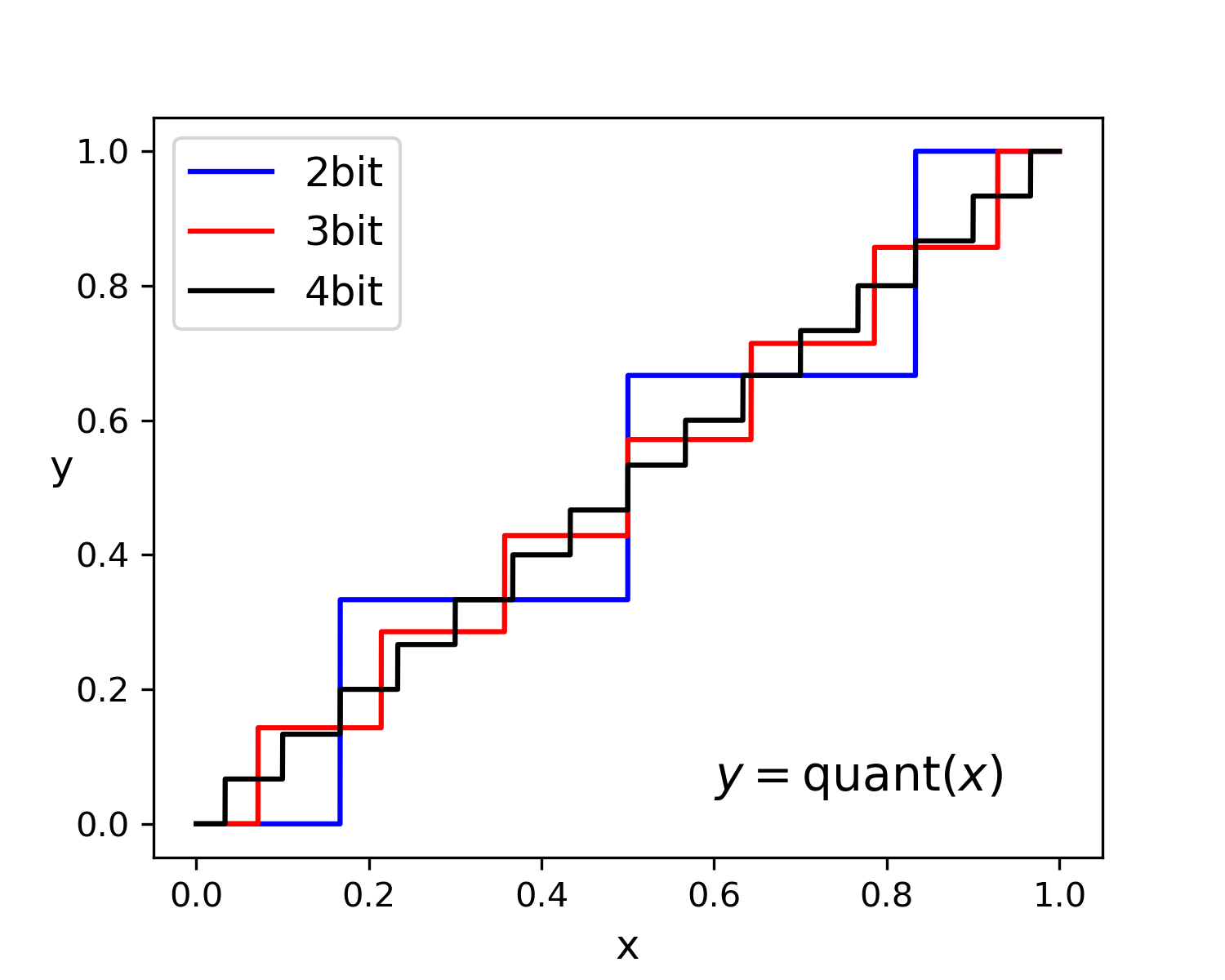

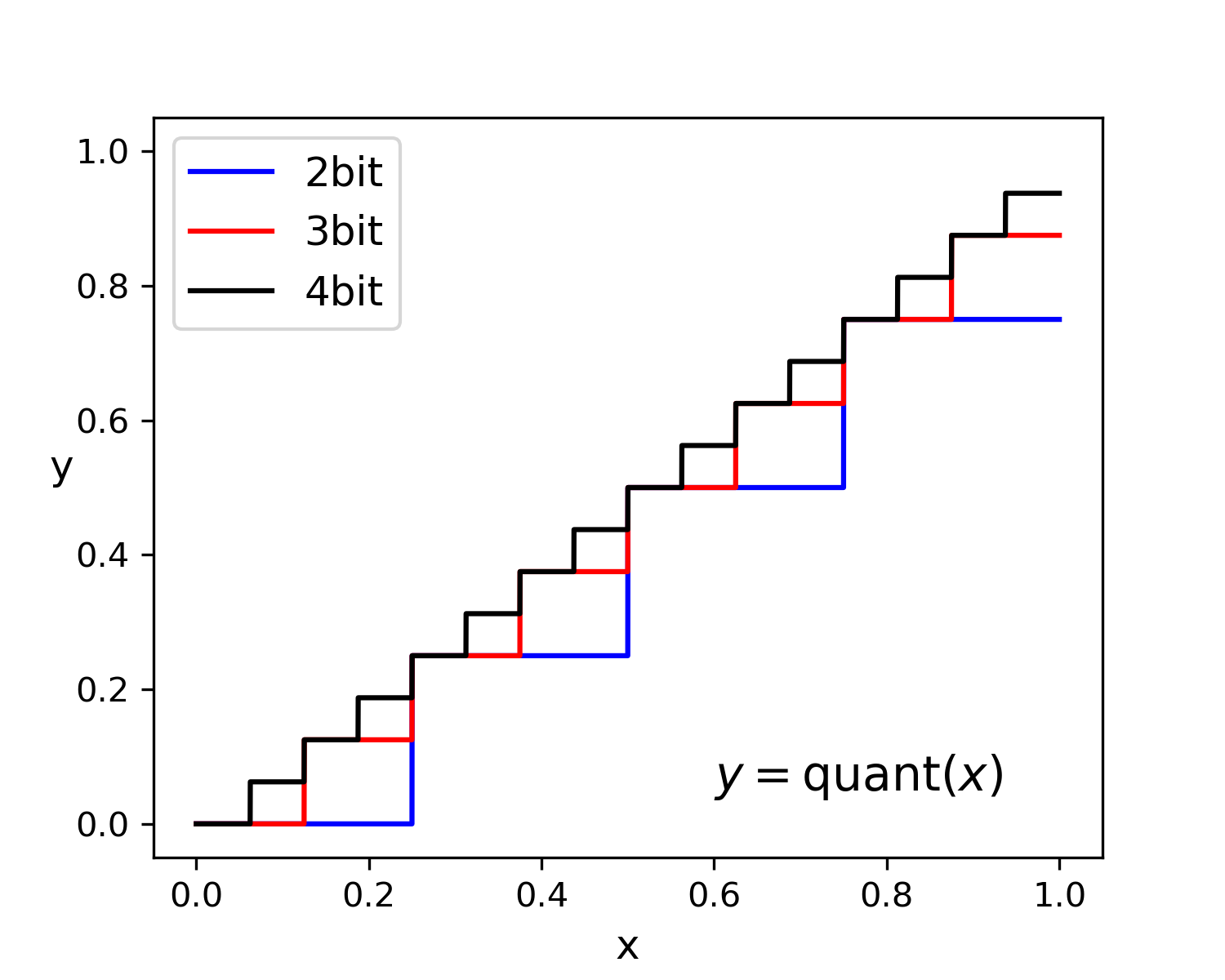

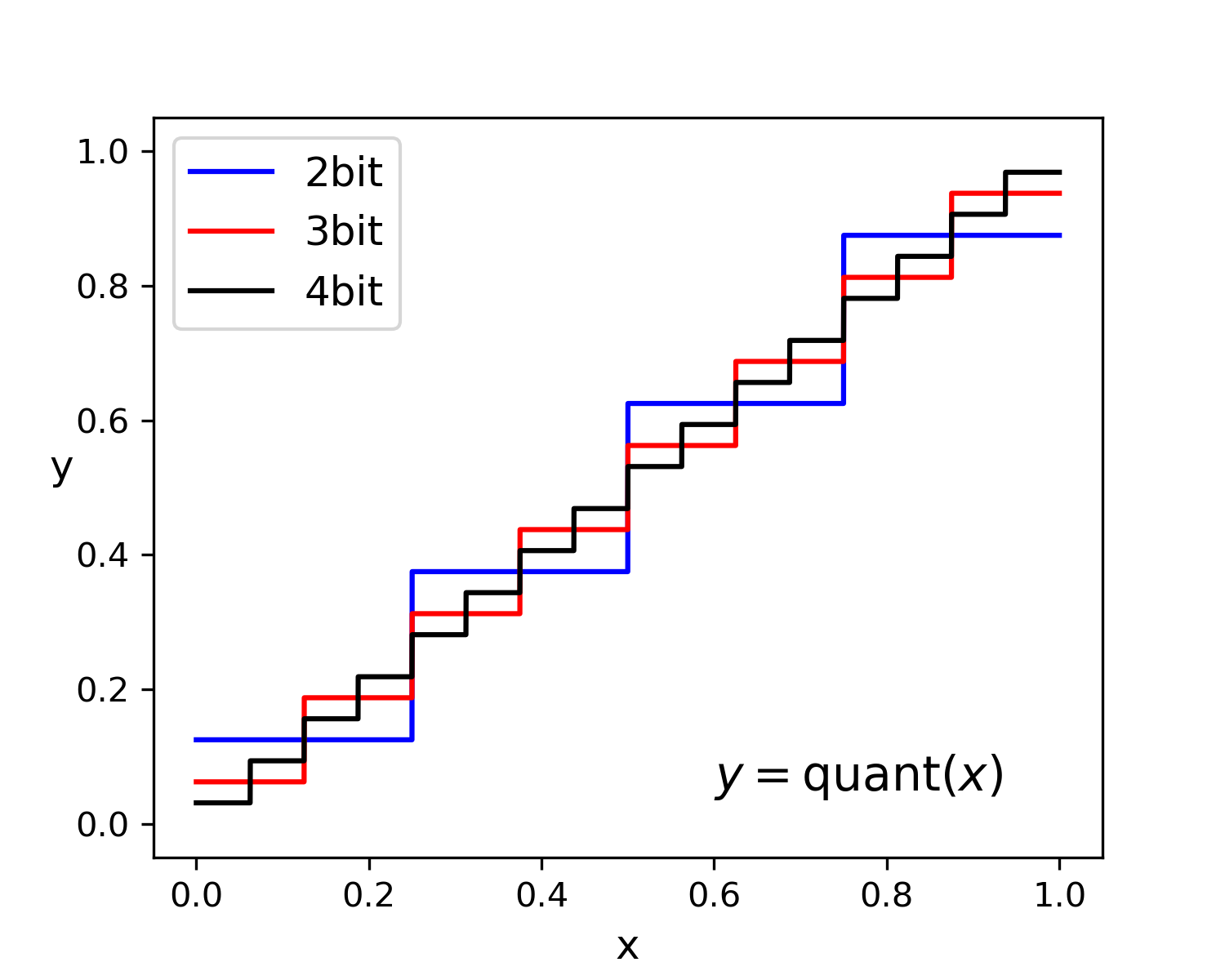

The following table compares the different quantization schemes.

Original |

Modified |

Centered Symmetric |

Centered Asymmetric |

The code for the above is also available.

Implementing network quantization with adaptive bit-widths is straightforward:

- Switchable batchnorm and adaptive bit-widths layers are implemented in

models/quant_ops. - Quantization training with adaptive bit-widths is implemented in the [

run_one_epoch] function intrain.py.

This repo is based on slimmable_networks and benefits from the following projects

CC 4.0 Attribution-NonCommercial International

The software is for educaitonal and academic research purpose only.

@article{jin2019quantization,

title={Towards efficient training for neural network quantization},

author={Jin, Qing and Yang, Linjie and Liao, Zhenyu},

journal={arXiv preprint arXiv:1912.10207},

year={2019}

}

@article{jin2020sat,

title={Neural Network Quantization with Scale-Adjusted Training},

author={Jin, Qing and Yang, Linjie and Liao, Zhenyu and Qian, Xiaoning},

booktitle={The British Machine Vision Conference},

year={2020}

}

@article{jin2020adabits,

title={AdaBits: Neural Network Quantization with Adaptive Bit-Widths},

author={Jin, Qing and Yang, Linjie and Liao, Zhenyu},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2020}

}