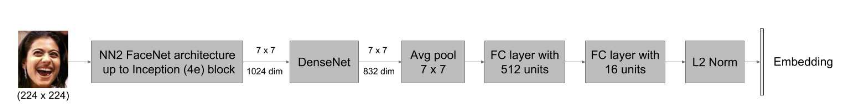

We find that densenet block is not import for Model. you can easily replace it with Conv3*3

Our model structure is the same as maskrcnn-benchmark

- PyTorch 1.0

pip3 install torch torchvision

- Easydict

pip3 install easydict

- Apex

- Ninja

sudo apt-get install ninja-build

- tqdm

pip3 install tqdm

- ResNet18

- Inception N22

- Inception Resnet

- ResNet50

Be Careful! Our model does not use all the triple pairs because of the loss of crawler data. In addition, we use our own align model. If you need our processed data, please email me qiulingteng@stu.hit.edu.cn. google original dataset is in here.

For the benefit of everyone, hence I upload my processed data to Baidu WebDisk so that everyone can download and research.

Note that our collected have a lit bit loss due to orignial datasets website link error. link address is given by:

The format of annotations you can refer to train_list and test_list.

Key: ndcm

-- PROJECT_ROOT

-- Triple Dataset

|-- train_align

|-- annotations

In annotations, your training triple data name save into *.txt

All of it, you can change in paths_catalog.py

we must build the env for training

make linksoft link to result

| Backbone | ONE_CLASS ACC | TWO_CLASS ACC | THREE_CLASS ACC | ACC |

|---|---|---|---|---|

| InceptionNN2+denseblock | 76.4 | 78.8 | 77.8 | 77.3 |

| InceptionResnet+denseblock | 64.1 | 70.0 | 65.2 | 66.9 |

| Resnet18+Conv3*3 | 78.2 | 80.5 | 80.2 | 79.7 |

| Resnet50+Conv3*3 | 78.5 | 81.3 | 80.9 | 80.3 |

- create the config file of dataset:

train_list.txt - modify the

config/*.yamlaccording to your requirements

We use the official torch.distributed.launch in order to launch multi-gpu training. This utility function from PyTorch spawns as many Python processes as the number of GPUs we want to use, and each Python process will only use a single GPU.

For each experiment, you can just run this script:

export NGPUS=8

python -m torch.distributed.launch --nproc_per_node=$NGPUS train.pyThe above performance are all conducted based on the non-distributed training. For each experiment, you can just run this script:

bash train.shIn the evaluator, we have implemented the multi-gpu inference base on the multi-process. In the inference phase, the function will spawns as many Python processes as the number of GPUs we want to use, and each Python process will handle a subset of the whole evaluation dataset on a single GPU.

- input arguments in shell:

bash inference.sh