Repository for deploying multiple machine learning models for inference on AWS Lambda and Amazon EFS

In this repo, you will find all the code needed to deploy your application for Machine Learning Inference using AWS Lambda and Amazon EFS.

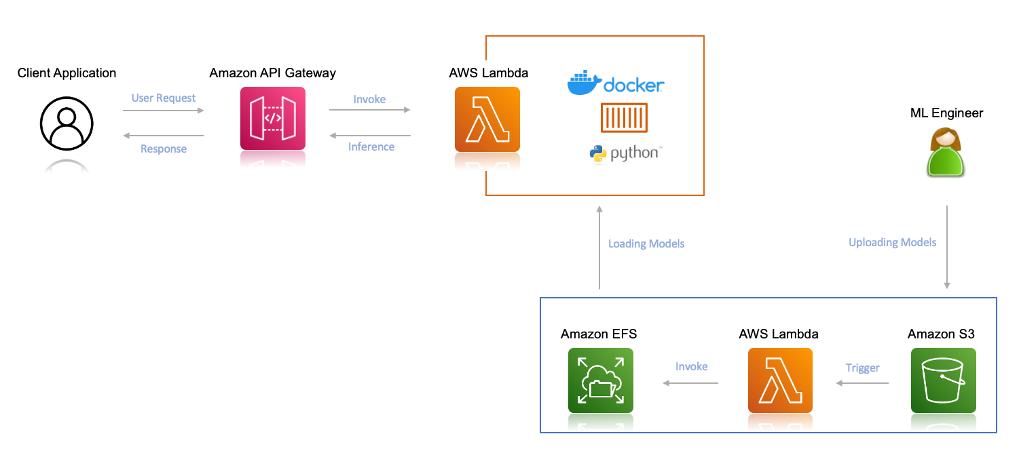

Here is the architectural work flow of our application:

-

Create a serverless application which will trigger a Lambda function upon a new model upload in your

S3 bucket. And the function would copy that file from your S3 bucket toEFS File System -

Create another Lambda function that will load the model from

Amazon EFSand performs the prediction based on an image. -

Build and deploy both the application using

AWS Serverless Application Model (AWS SAM)application.

To use the Amazon EFS file system from Lambda, you need the following:

- An Amazon Virtual Private Cloud (Amazon VPC)

- An Amazon EFS file system created within that VPC with an access point as an application entry point for your Lambda function.

- A Lambda function (in the same VPC and private subnets) referencing the access point.

The following diagram illustrates the solution architecture:

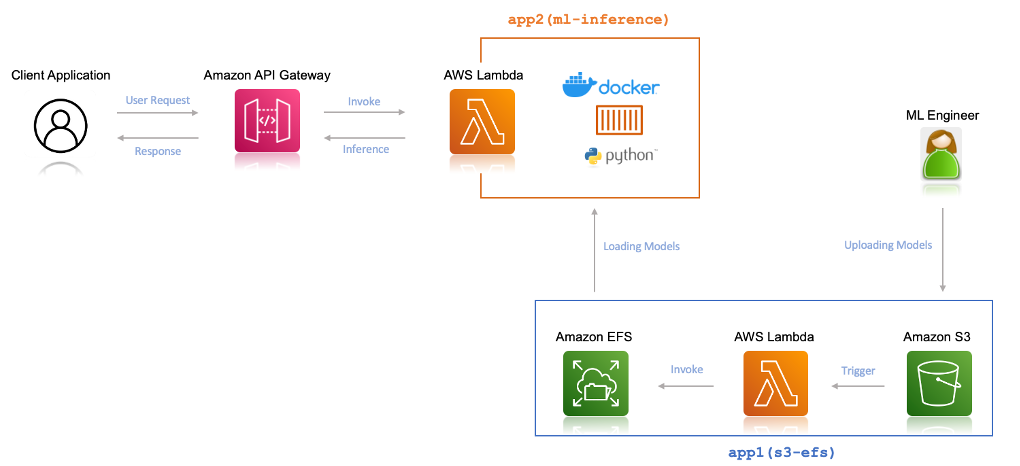

Now, we are going to use a single SAM deployment to deploy this, which will create the following two serverless applications, let’s call it :

- app1(s3-efs): The serverless application which will transfer the uploaded ML models from your S3 bucket to the your EFS file system

- app2(ml-inference): The serverless application which will perform the ML Inference from the client.

Here is a quick walkthrough of the demo: