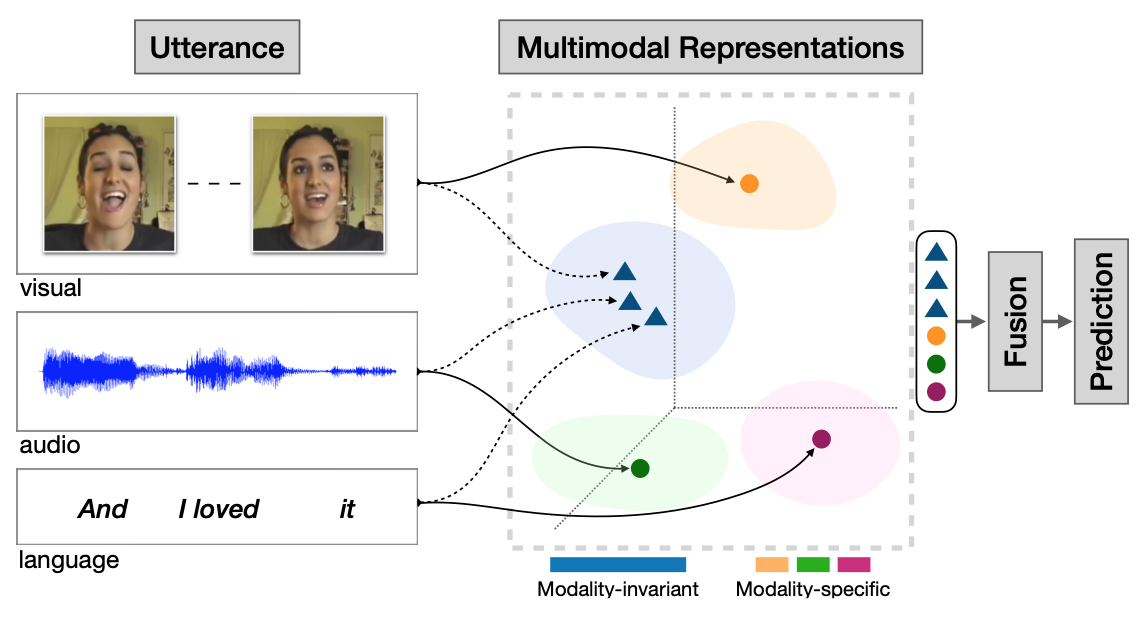

Code for the ACM MM 2020 paper MISA: Modality-Invariant and -Specific Representations for Multimodal Sentiment Analysis

We work with a conda environment.

conda env create -f environment.yml

conda activate misa-code

- Install CMU Multimodal SDK. Ensure, you can perform

from mmsdk import mmdatasdk. - Option 1: Download pre-computed splits and place the contents inside

datasetsfolder. - Option 2: Re-create splits by downloading data from MMSDK. For this, simply run the code as detailed next.

cd src- Set

word_emb_pathinconfig.pyto glove file. - Set

sdk_dirto the path of CMU-MultimodalSDK. python train.py --data mosi. Replacemosiwithmoseiorur_funnyfor other datasets.

If this paper is useful for your research, please cite us at:

@article{hazarika2020misa,

title={MISA: Modality-Invariant and-Specific Representations for Multimodal Sentiment Analysis},

author={Hazarika, Devamanyu and Zimmermann, Roger and Poria, Soujanya},

journal={arXiv preprint arXiv:2005.03545},

year={2020}

}

For any questions, please email at hazarika@comp.nus.edu.sg