NTIRE 2021 Depth Guided Relighting Challenge Track 2: Any-to-any relighting (CVPR Workshop 2021) 3rd Solution.

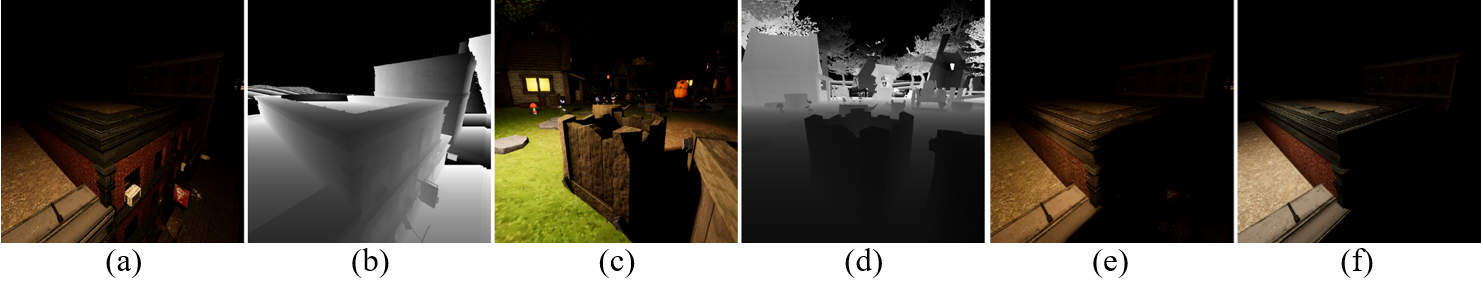

Any-to-any relighting aims to relight an input image with a certain color temperature and light source position setting to match the illumination setting of another guided image. Furthermore, the depth maps of two images are given. The example images are plotted. (a) and (c): Original image and deepth map. (b) and (d): Guided image and depth map. (e): Relit image. (f) Ground truth.

Report paper,S3Net paper [CVPRW2021, Accepted]

$ git clone https://github.com/dectrfov/NTIRE-2021-Depth-Guided-Image-Any-to-Any-relighting.git

$ cd NTIRE-2021-Depth-Guided-Image-Any-to-Any-relighting2. Download the pre-trained model and testing images

Put model into model/

Put images into test/

Images contain guide and input folders

cd track2/code

python demo.py --input_data_path <'input' folder> --output_dir <output folder> --name <folder name in output folder> --model_path <pre_trained model path>

Ex:

python demo.py --input_data_path ../test/input --output_dir ../submit_result --name test --model_path ../model/weight.pklHere is the original images from VIDIT dataset

We also provide the relit images by our S3Net: val and test.

Another solution (1st Solution, NTIRE 2021 Depth Guided Relighting Challenge Track 1: One-to-one relighting) of our team:

https://github.com/weitingchen83/NTIRE2021-Depth-Guided-Image-Relighting-MBNet

Please cite this paper in your publications if it is helpful for your tasks:

Bibtex:

@inproceedings{yang2021S3Net,

title = {{S3N}et: A Single Stream Structure for Depth Guided Image Relighting},

author = {Yang, Hao-Hsiang and Chen, Wei-Ting and Kuo, Sy-Yen},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)},

year = {2021}

}