GAUDI: A Neural Architect for Immersive 3D Scene Generation, Arxiv.

Samples from GAUDI (Allow a couple minutes of loading time for videos.)

Miguel Angel Bautista*,

Pengsheng Guo*,

Samira Abnar,

Walter Talbott,

Alexander Toshev,

Zhuoyuan Chen,

Laurent Dinh,

Shuangfei Zhai,

Hanlin Goh,

Daniel Ulbricht,

Afshin Dehghan,

Joshua M. Susskind

Apple (*equal contribution)

- We introduce GAUDI, a generative model that captures the distribution of 3D scenes parametrized as radiance fields.

- We decompose generative model in two steps: (i) Optimizing a latent representation of 3D radiance fields and corresponding camera poses. (ii) Learning a powerful score based generative model on latent space.

- GAUDI obtains state-of-the-art performance accross multiple datasets for unconditional generation and enables conditional generation of 3D scenes from different modalities like text or RGB images.

Expand Abstract

We introduce GAUDI, a generative model capable of capturing the distribution of complex and realistic 3D scenes that can be rendered immersively from a moving camera. We tackle this challenging problem with a scalable yet powerful approach, where we first optimize a latent representation that disentangles radiance fields and camera poses. This latent representation is then used to learn a generative model that enables both unconditional and conditional generation of 3D scenes. Our model generalizes previous works that focus on single objects by removing the assumption that the camera pose distribution can be shared across samples. We show that GAUDI obtains state-of-the-art performance in the unconditional generative setting across multiple datasets and allows for conditional generation of 3D scenes given conditioning variables like sparse image observations or text that describes the scene.

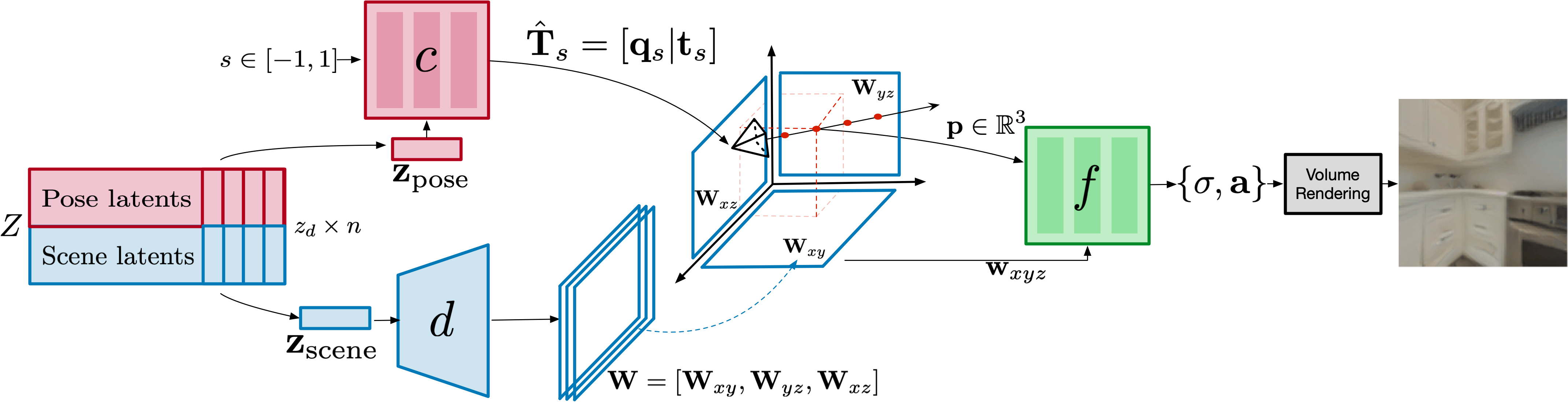

Our model is composed of two stages: latent representation optimization and generative modeling. Finding powerful latent representation for scene radiance fields and camera poses is critical to obtain good performance. To achieve this, we design a decoder with three modules:

- A scene decoder

$d$ that takes as input scene latents and outputs a tri-plane latent representation to condition a the radiance field MLP. - A camera pose decoder

$c$ that takes as input a camera pose latent and a timestamp and outputs a camera pose. - A radiance field

$f$ that takes as input a 3d point and is conditioned on the tri-plane representation.

The parameters of all the modules and the latents for scene and camera poses are optimized in the first stage. In the second stage, we learn a score-based generative model in latent space.

We present qualitative results for both unconditional and conditional generative modeling. During inference, we sample latents from the generative model and feed them through the decoder to obtain a radiance field and camera path. In the conditional setting we train the generative model using pairs of latents and conditioning variables (like text or images) and sample latents given conditioning variables during inference.

Random samples from the unconditional version of GAUDI for 4 different datasets: Vizdoom, Replica, VLN-CE and ARKITScenes.

Random samples from a text conditional GAUDI model trained on VLN-CE.

Prompt: "go down the stairs"

Prompt: "go through the hallway"

Prompt: "go up the stairs"

Prompt: "walk into the kitchen"

Random samples from a image conditional GAUDI model trained on VLN-CE.

Image prompt

Image prompt

Image prompt

Image prompt

We can linearly interpolate the latent representation of two scenes (leftmost and rightmost columns) and move the camera to explore the interpolated scene.

@article{bautista2022gaudi,

title={GAUDI: A Neural Architect for Immersive 3D Scene Generation},

author={Miguel Angel Bautista and Pengsheng Guo and Samira Abnar and Walter Talbott and Alexander Toshev and Zhuoyuan Chen and Laurent Dinh and Shuangfei Zhai and Hanlin Goh and Daniel Ulbricht and Afshin Dehghan and Josh Susskind},

journal={arXiv},

year={2022}

}

The author's copyright under the videos provided here are licensed under the CC-BY-NC license.

Source code will be available in the following weeks.

Check out recent related work on making radiance fields generalize to multiple objects/scenes: