This lab is provided as part of AWS Innovate Data Edition, it has been adapted from an AWS Workshop Click here to explore the full list of hands-on labs.

ℹ️ You will run this lab in your own AWS account and running this lab will incur some costs. Please follow directions at the end of the lab to remove resources to avoid future costs.

- Overview

- Architecture

- Pre-Requisites

- Setting up Amazon Kinesis Data Generator Tool

- Kinesis Analytics Pipeline Application setup

- Configure Output stream to a Destination

- Test E2E Architecture

- Cleanup

- Survey

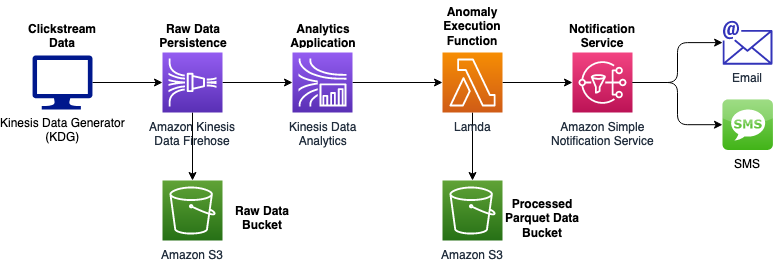

This lab helps you to use Kinesis Data Analytics to perform Real-Time Clickstream Anomaly Detection. The use case demonstrated in this lab is analysing clickstream data. Amazon Kinesis Data Analytics is a managed service that makes it easy to analyse web log traffic that drive business decisions in real-time. In this lab there are a few digital ad concepts in use:

An impression (also known as a view-through) is when a user sees an advertisement. In practice, an impression occurs any time a user opens an app or website and an advertisement is visible.

A Click or an Engagement is when a customer clicks on an ad.

A CTR is computed as Clicks / Impressions * 100. A CTR is a measure of the ad's effectiveness. While digital marketers are interested in the CTR to determine the performance of the ad, they are also interested if anamolous low-end CTRs that could be a result of a bad image or bidding model.

This lab helps identify anomalous data points within a data set using an unsupervised learning algorithm RANDOM_CUT_FOREST.

The lab uses the Kinesis Data Generator (KDG) tool to generate data and sends it to Amazon Kinesis. The tool provides a user-friendly UI that runs directly in your browser.

Duration - Approximately 2 hours

The below cloudformation template deploys the lab pre-requisites in your AWS account. Note:

-

The stack selects the Asia Pacific(Sydney) for deployment, please alter the region as required.

-

Deploy stack

Region CloudFormation Asia Pacific(Sydney)

-

The CloudFormation stack takes the following parameters as input:

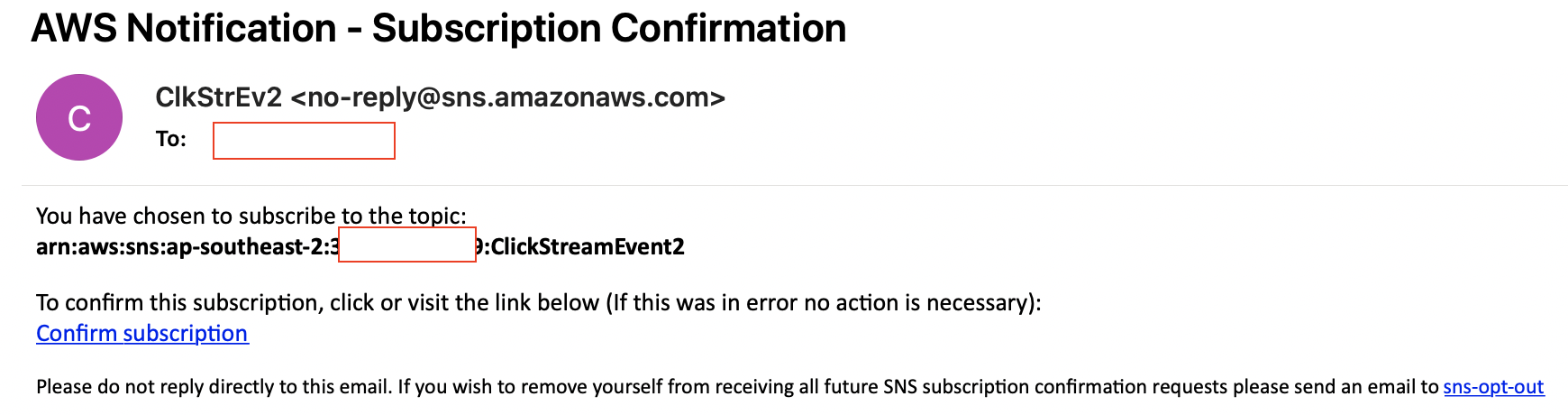

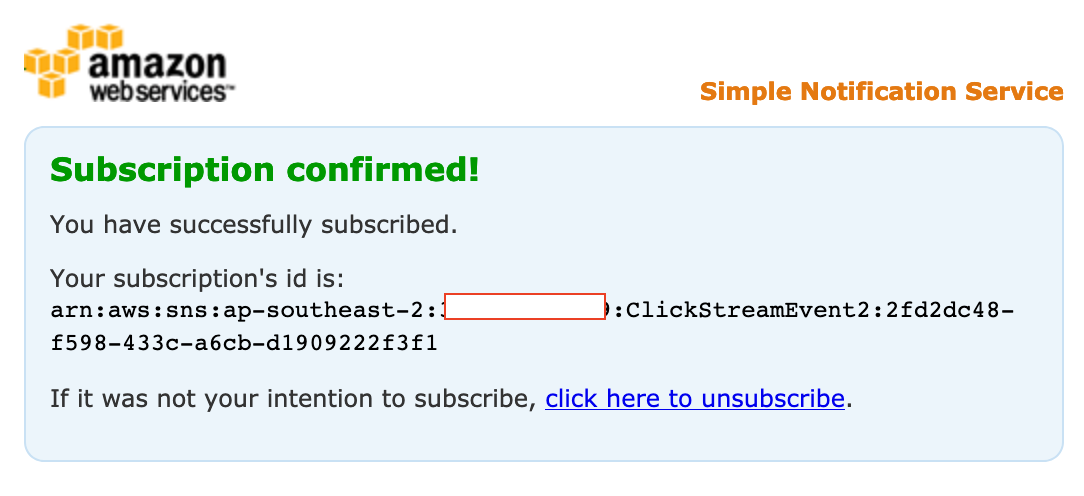

Parameter Description Username User name for the KDG tool. Enter kplsetup. This will be a user in the Amazon CognitoPassword Password for the user in Amazon Cognito Email The email address to send anomaly events SMS The mobile phone number to send anomaly events -

Acknowledge that the CloudFormation might create IAM resources

-

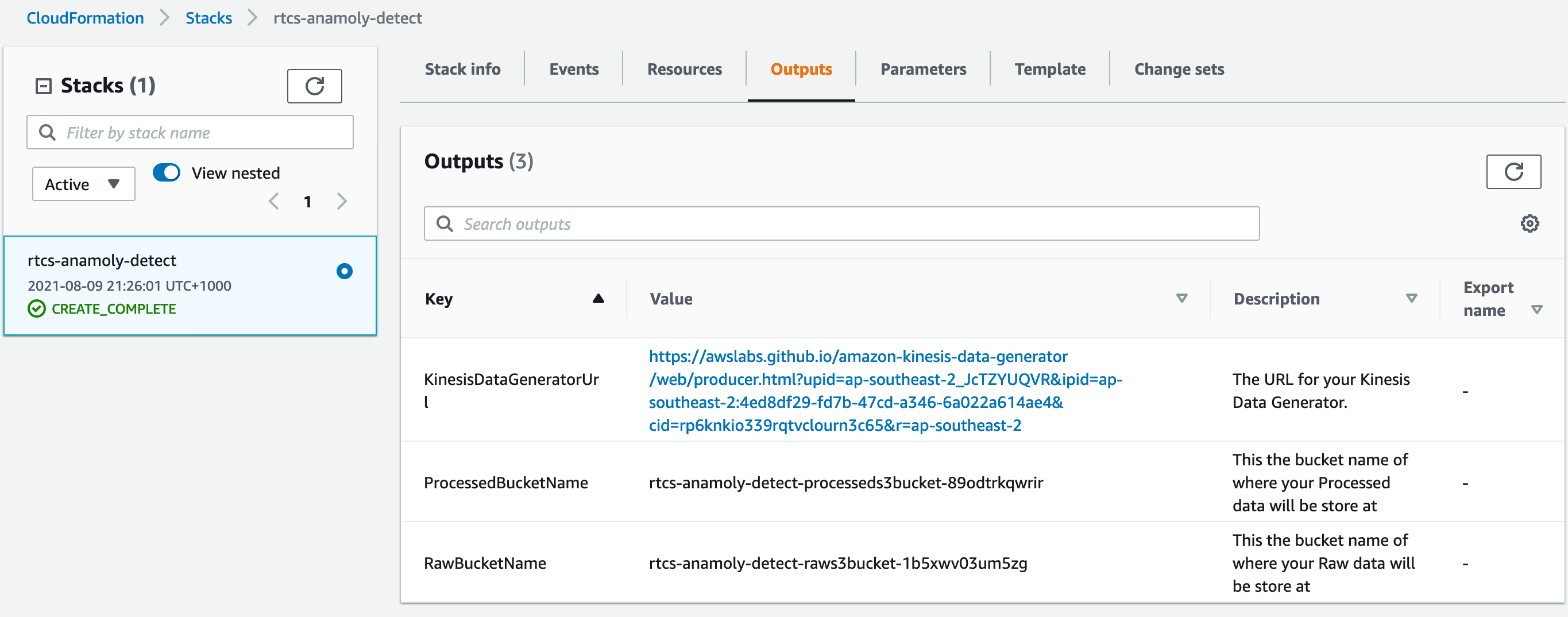

Once the stack is deployed, click the outputs tab to view more information.

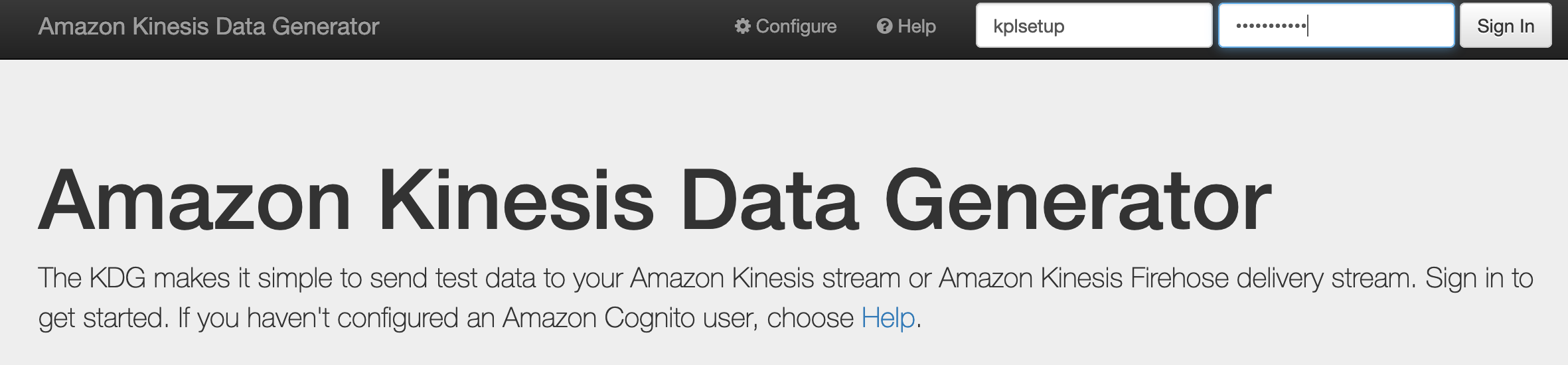

On the Outputs tab of the deployed CloudFormation stack, note the Kinesis Data Generator URL. Navigate to this URL and login into the Amazon Kinesis Data Generator (KDG) Tool. The KDG simplifies the task of generating data and sending it to Amazon Kinesis. The tool provides a user-friendly UI that runs directly in your browser. With the KDG, you can do the following tasks:

- Create templates that represent records for specific use cases

- Populate the templates with fixed or random data

- Save the templates for future use

- Continuously send thousands of records per second to your Amazon Kinesis stream or Firehose delivery stream

- Click on the KinesisDataGeneratorUrl on the Outputs tab on the stack.

- Sign in using the username and password you enetered in the CloudFormation console.

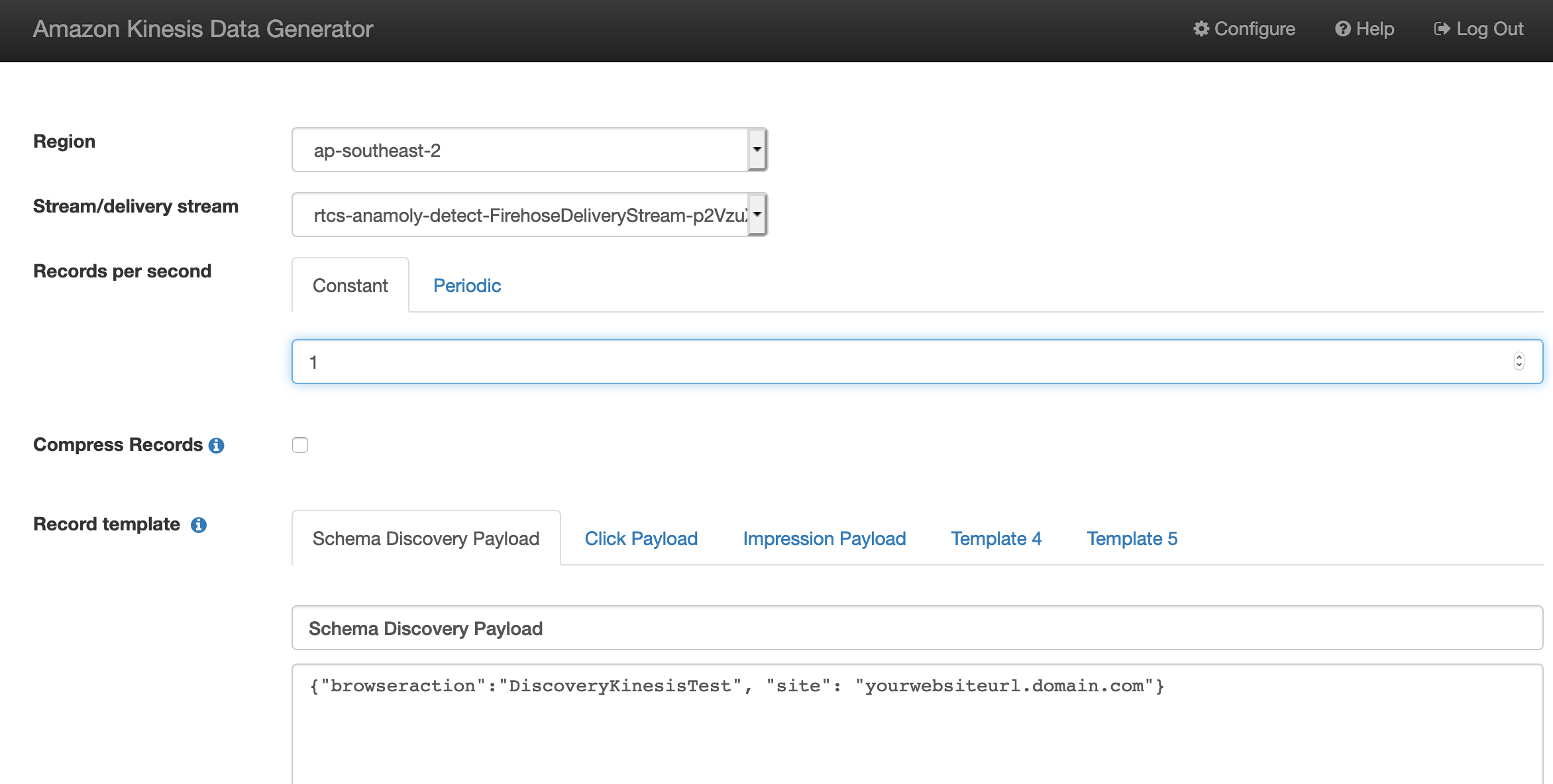

- After you sign in, you should see the KDG console. Select the region where the stack was deployed. e.g. ap-southeast-2.

- You need to set up some templates to mimic the clickstream web payload.

- Create the following templates in the KDG console. But do not click on the 'Send Data' yet:

Records per Second Template Name Payload Description 1 Schema Discovery Payload{"browseraction":"DiscoveryKinesisTest", "site": "yourwebsiteurl.domain.com"}Payload used for schema discovery 1 Click Payload{"browseraction":"Click", "site": "yourwebsiteurl.domain.com"}Payload used for clickstream data 1 Impression Payload{"browseraction":"Impression", "site": "yourwebsiteurl.domain.com"}Payload used for impression stream data

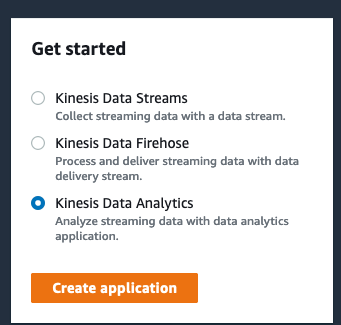

- Navigate to the Amazon Kinesis console

- On the Get Started portlet, select Kinesis Data Analytics, and click on Create Application.

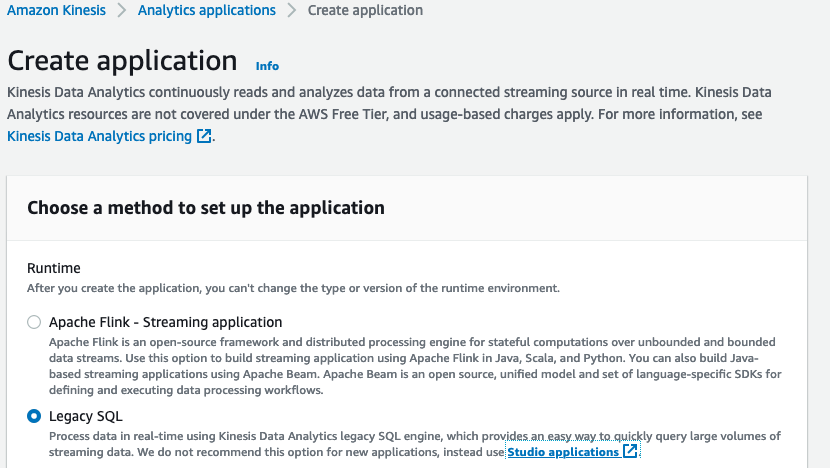

- On the Kinesis Data Analytics - Create application page:

-

Select Legacy SQL as the runtime and click on Create Application.

-

For application name, type

anomaly-detection-application. -

Type a description for the analytics application and click on Create Application

-

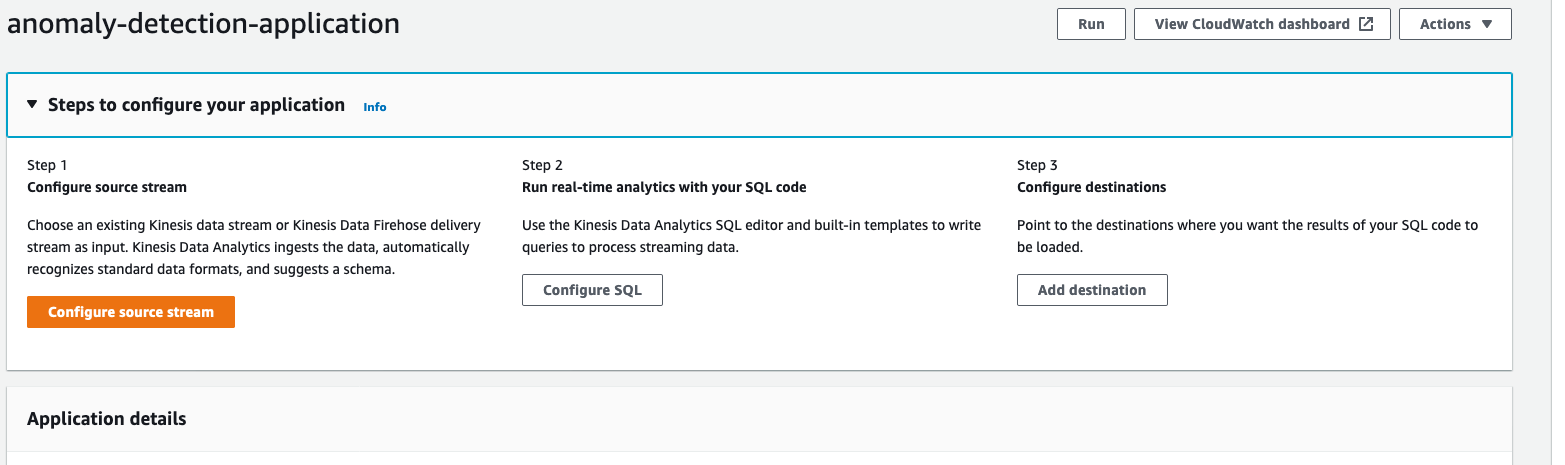

Once created, Open analytics application and expand the 'Steps to configure application' and click on 'Configure source stream'.

-

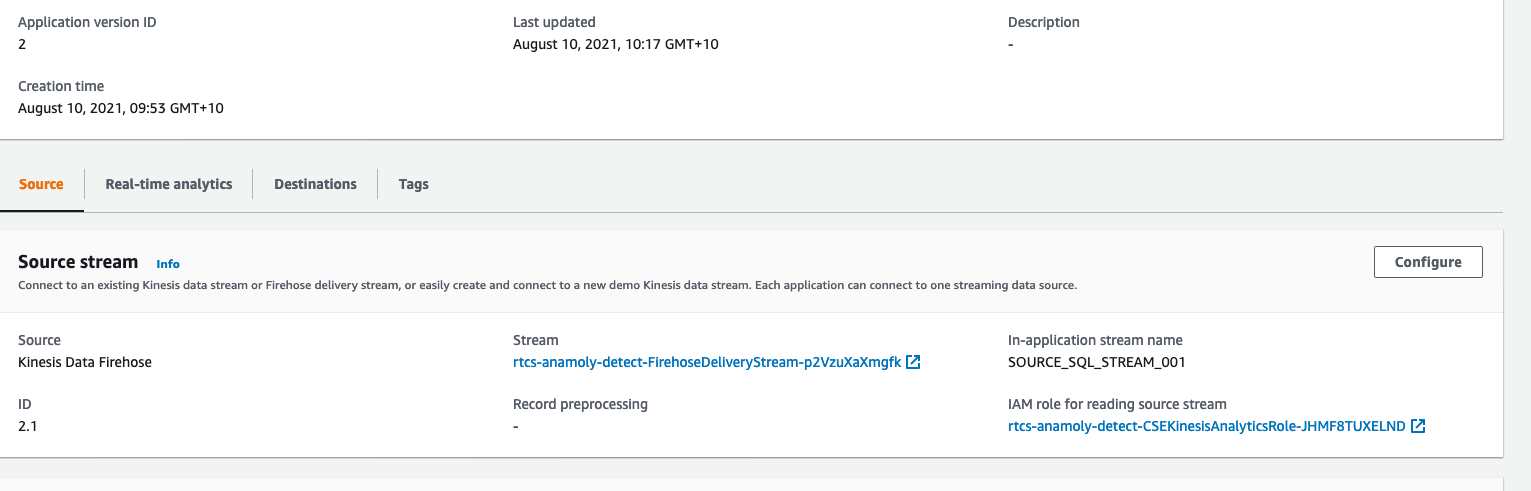

Select the Source as Kinesis Data Firehose delivery stream and select the Delivery Stream created by the CloudFormation template: {stack-name}-FirehoseDeliveryStream-{random string}

-

Select 'Off' for the Record preprocessing with AWS Lambda for this lab.

-

Select 'Choose from IAM roles that Kinesis Data Analytics can assume' and associate the following service role: {stack-name}-CSEKinesisAnalyticsRole-{random string}

-

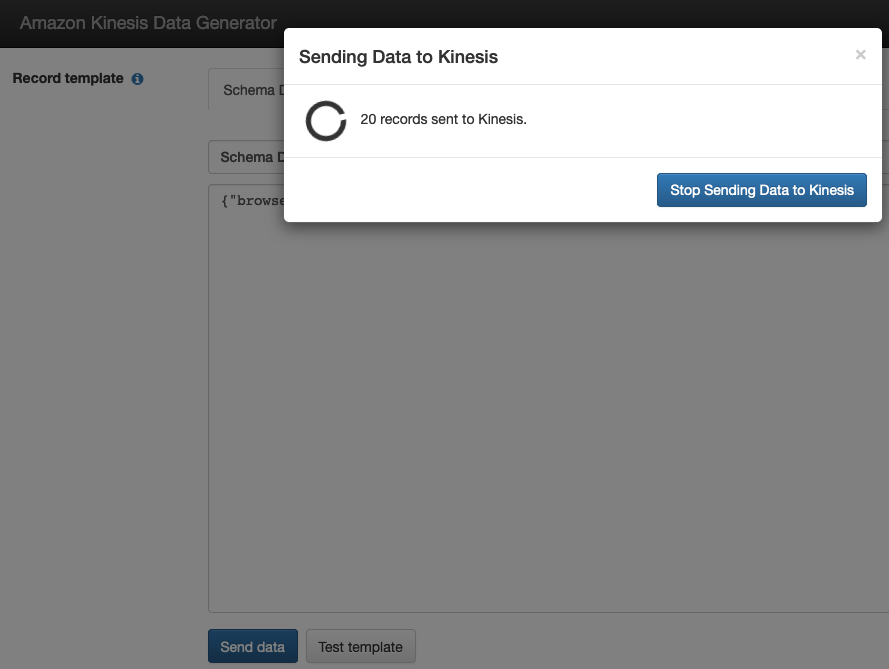

Do not Click on Discover Schema at this point. Open the KDG tool, and click on 'Send Data' for the 'Schema Discovery Payload' template. This will send the sample data to the Kinesis Data Firehose delivery stream. Make sure to login to the tool.

-

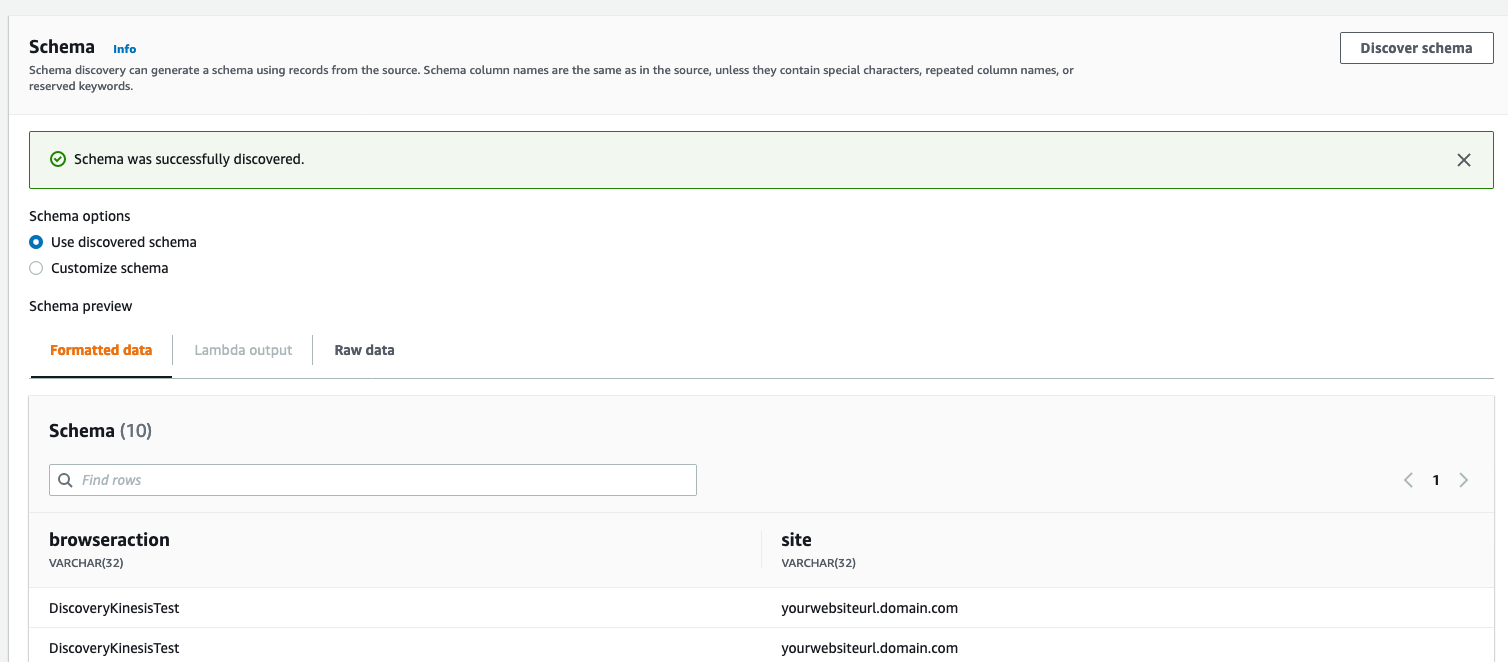

Now go back to the AWS console and click on Discover Schema to display the schema and the formatted data received.

-

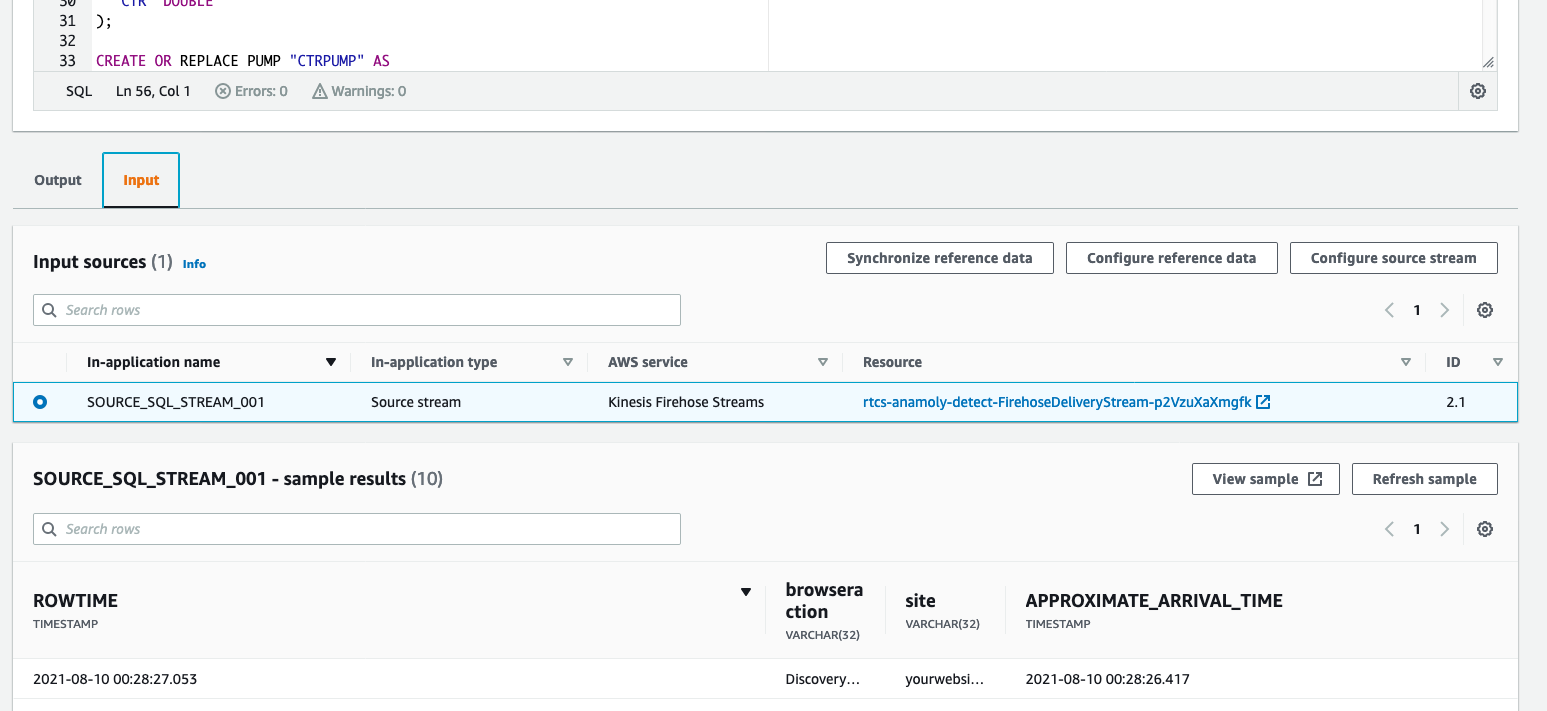

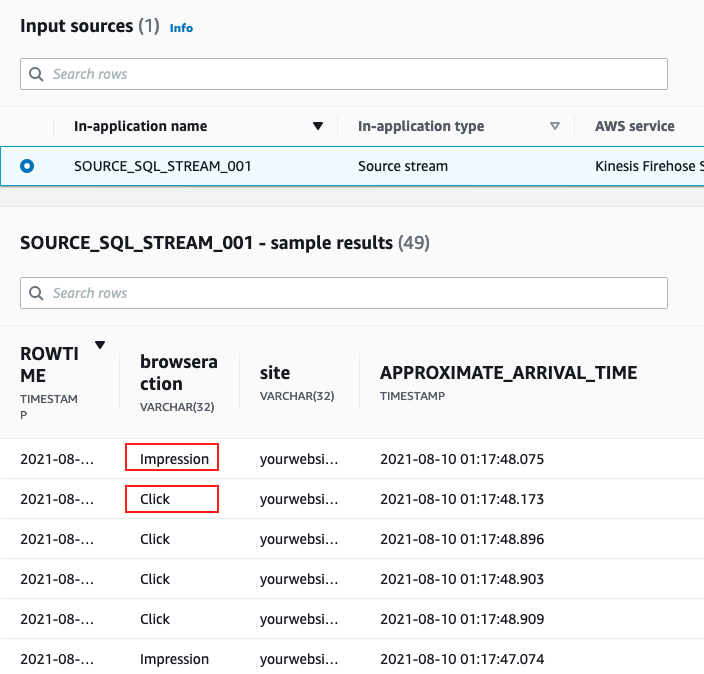

Leave the 'Use discovered schema' option selected for 'Schema options' and click on Save changes. The source stream should show as below:

-

Now navigate to the 'Real-time analytics' tab and click on Configure.

-

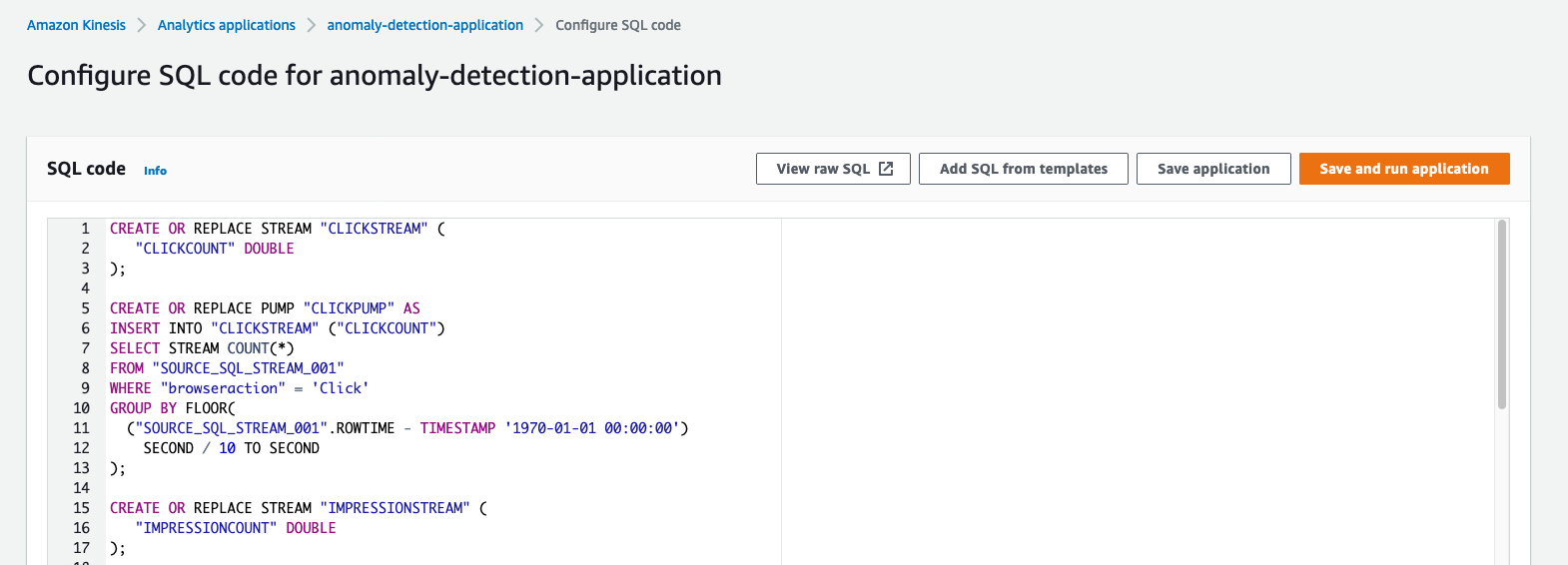

Replace the sql with the contents of the following file: anomaly_detection.sql and click on 'Save and run application'. This should take a minute to run.

-

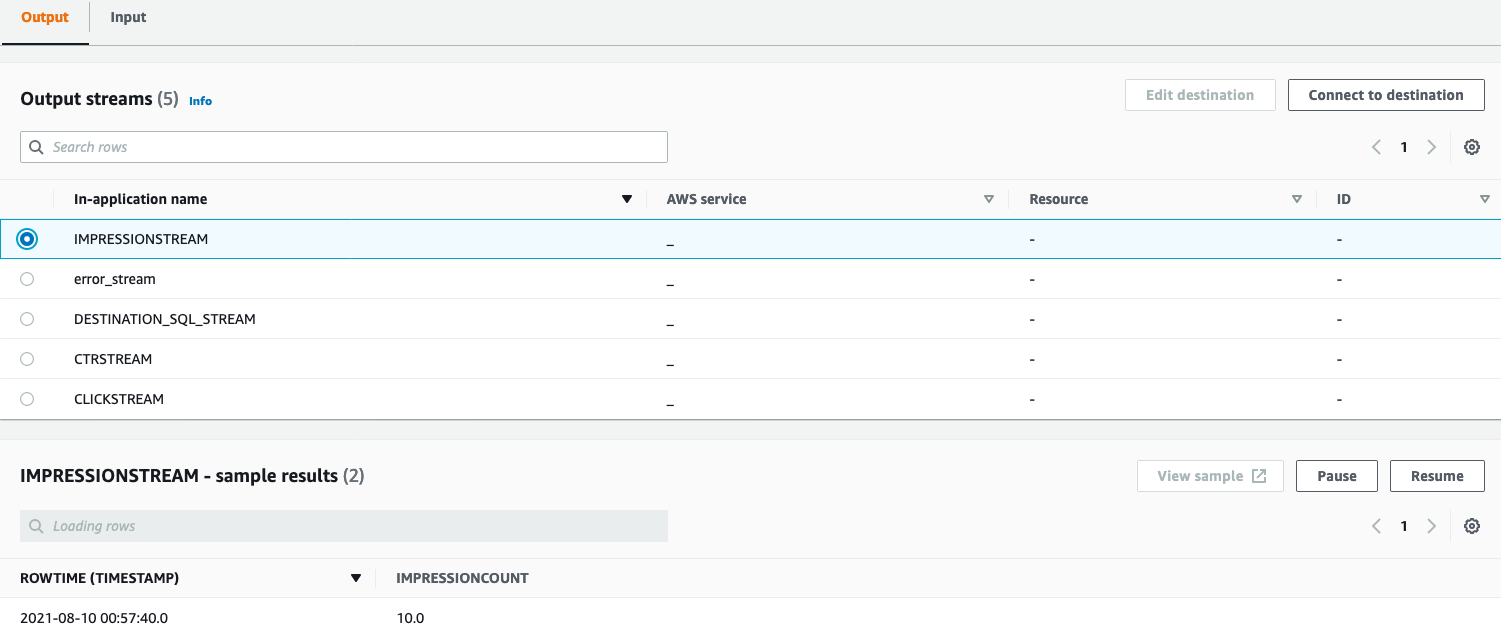

On the same page scroll down to view the Input and Output sub tab. The Input sub tab will list the source stream and the Output sub-tab will display the output streams created by the SQL run before.

-

At this point you have created:

- Kinesis Data Analytics application schema for click stream and impression stream data using the 'Schema Discovery Payload' sent from the KDG tool

- An output stream schema, using anomaly_detection.sql. The schema will in real-time convert the input stream to the output stream.

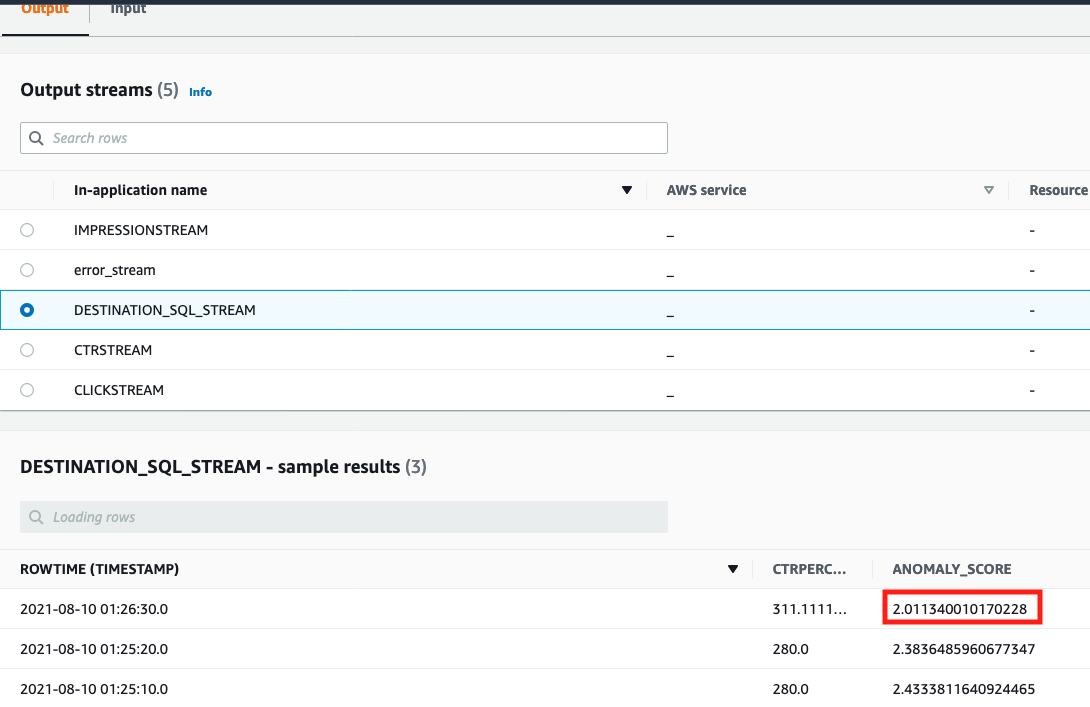

Note: The DESTINATION_SQL_STREAM uses the RANDOM_CUT_FOREST function to detect anomalies within the input data stream. You can read more about the function here.

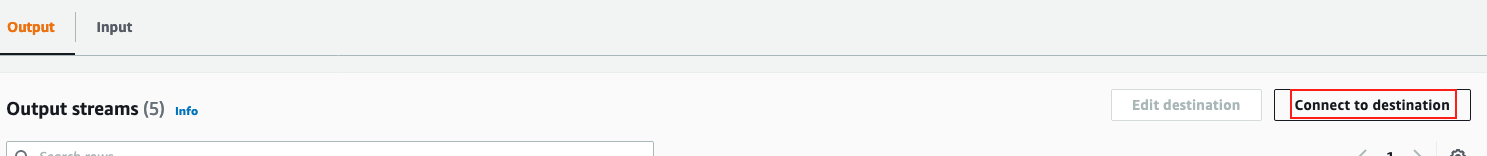

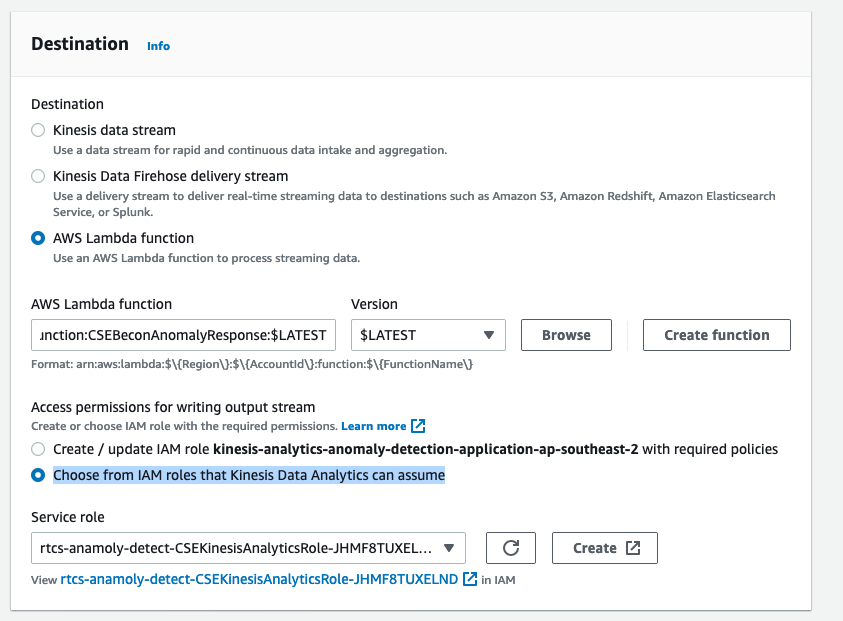

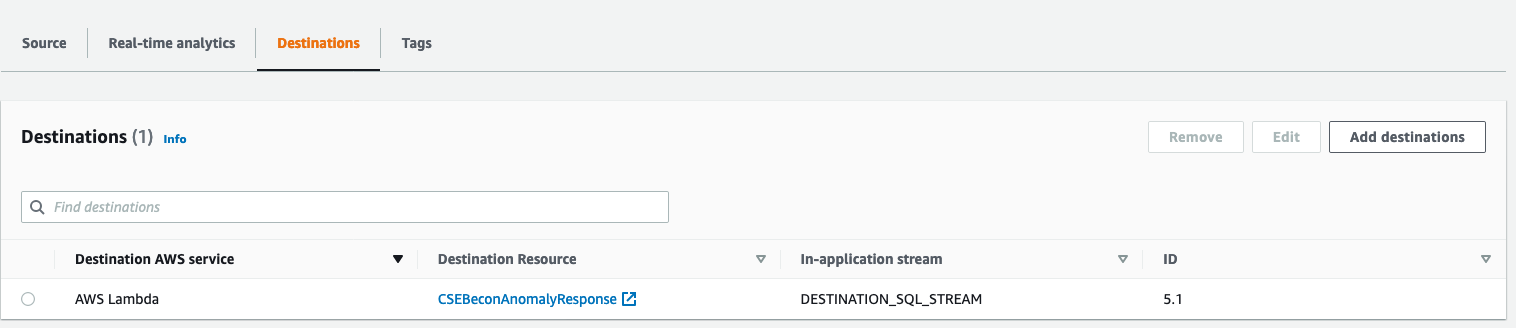

The DESTINATION_SQL_STREAM output stream contains records where anomalies have been found and need to be reported. In this section we will configure a new destination (a lambda function) to direct the anomalies to an SNS Topic.

- Stop sending data configured in the previous step.

- In the output subtab click on 'Connect to destination'.

- Select 'AWS Lambda function' as a destination and browse for the Lamdba function,CSEBeconAnomalyResponse, created by the CloudFormation template with version set as $LATEST.

- For Access Permissions, select 'Choose from IAM roles that Kinesis Data Analytics can assume' and select {stack-name}-CSEKinesisAnalyticsRole-{random-string} as the service role.

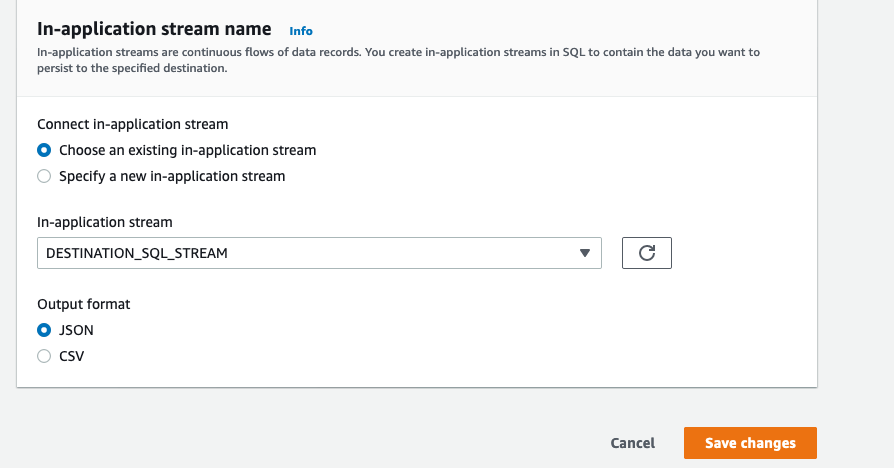

- For the In-application stream name, select 'Choose an existing in-application stream' and select 'DESTINATION_SQL_STREAM' and leave the Ouput format as JSON. Click on Save Changes.

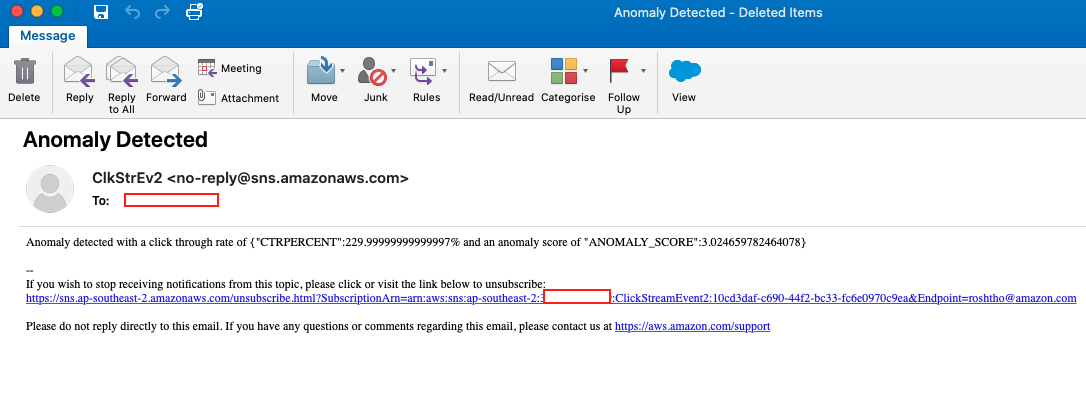

This section tests the end to end architecture. The KDG tool is configured to send Impression and multiple click stream data to imitate an anomaly. The Kinesis Data Analytics application detects anomalies and sends these anomalies to the Lamdba function. The Lamdba function then sends a notification to an SNS topic and in turn to an email and an sms. Note: Stop data the click stream data after 20-30 seconds to prevent a large number of sms being sent.

-

To send in the click and impression data we will need to login to the KDG tool in 5 tabs and Send data to the Kinesis data stream firehose based on the table below. Note: Follow notes for Tab 3,4,5 to prevent excess notifications:

Tab Template Records per Second Payload Notes 1 Impression 1 {"browseraction":"Impression", "site": "yourwebsiteurl.domain.com"}Can leave running 2 Click 1 {"browseraction":"Click", "site": "yourwebsiteurl.domain.com"}Can leave running 3 Click 1 {"browseraction":"Click", "site": "yourwebsiteurl.domain.com"}Stop after 20-30 seconds to prevent large number of emails and sms from SNS Topic 4 Click 1 {"browseraction":"Click", "site": "yourwebsiteurl.domain.com"}Stop after 20-30 seconds to prevent large number of emails and sms from SNS Topic 5 Click 1 {"browseraction":"Click", "site": "yourwebsiteurl.domain.com"}Stop after 20-30 seconds to prevent large number of emails and sms from SNS Topic -

With the KDG tool sending data to Kinesis, the input data can be viewed in the AWS Console.

-

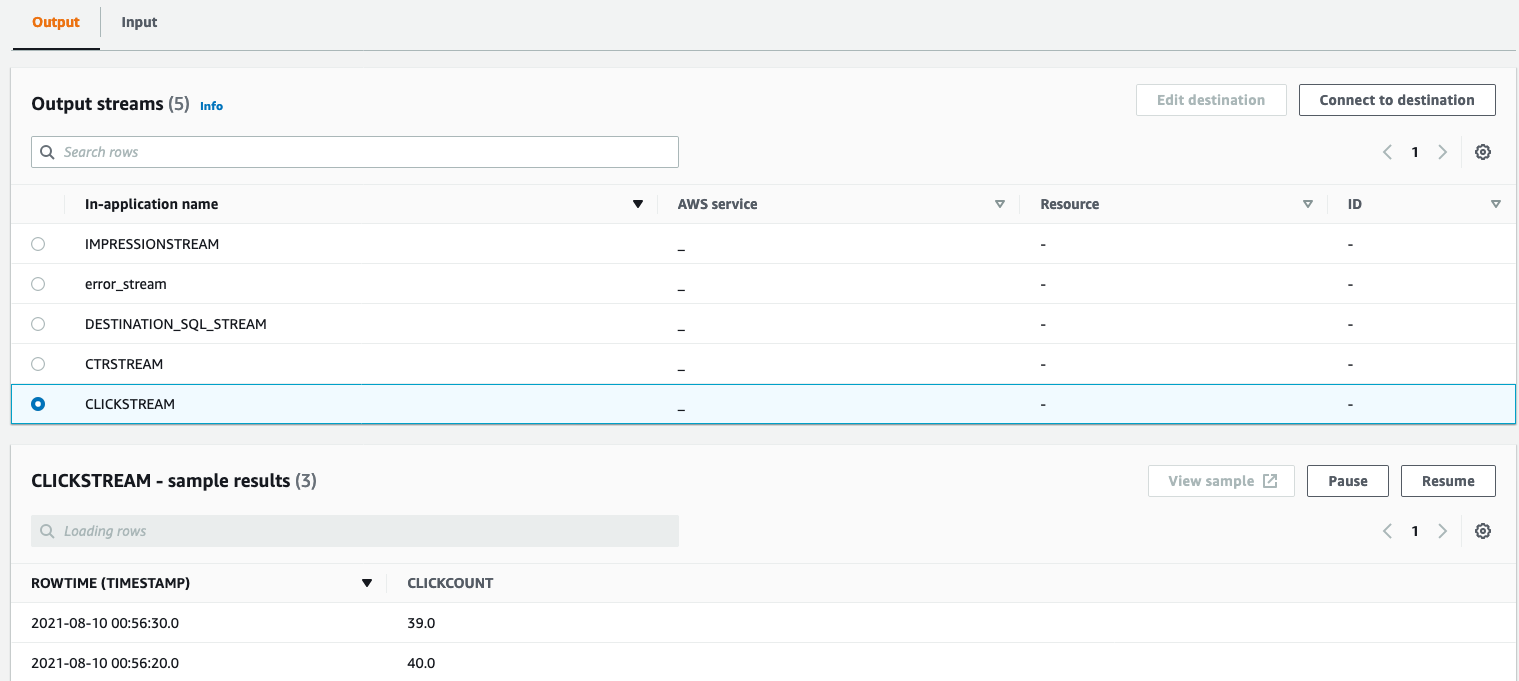

Similarly the data can be viewed in the output data stream. E.g. IMPRESSIONSTREAM, CLICKSTREAM. DESTINATION_SQL_STREAM.

-

An email and sms is sent based on the detected anomalies. These anomalies are based on the 'DESTINATION_SQL_STREAM' stream with a destination of the lamda function.

Follow the below steps to cleanup your account to prevent any aditional charges:

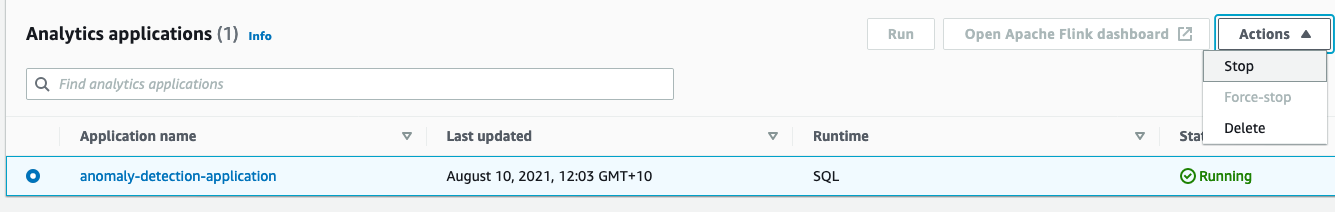

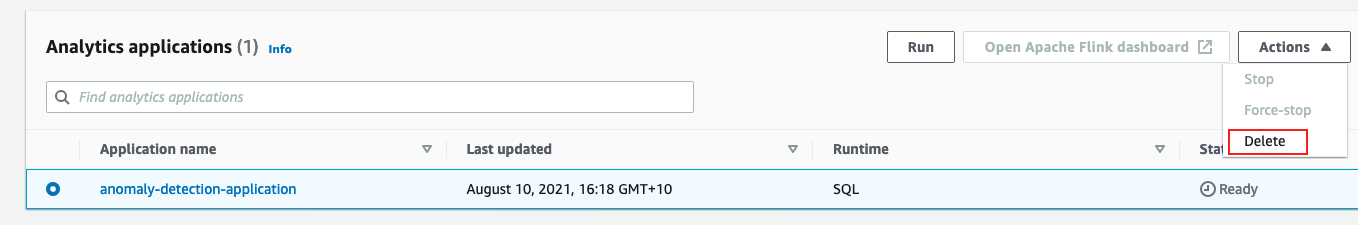

- Navigate to the Kinesis Data Analytics application.

- Select the 'anomaly-detection-application' application and click on Stop.

- Select the 'anomaly-detection-application' application and click on Delete.

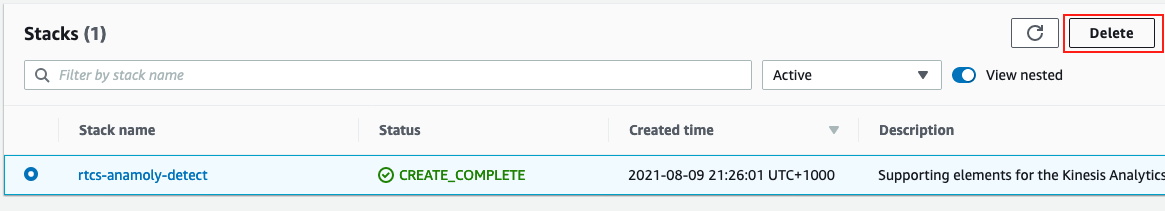

- Navigate to the CloudFormation and find the stack that was deployed as part of the pre-requisites.

- Select the stack and delete. This action will delete all the resources that were created as part of the lab and stop incurring charges.

Please help us to provide your feedback here. Participants who complete the surveys from AWS Innovate Online Conference - Data Edition will receive a gift code for USD25 in AWS credits. AWS credits will be sent via email by 30 September, 2021.