Convert any image or PDF to Markdown text or JSON structured document with super-high accuracy, including tabular data, numbers or math formulas.

The API is built with FastAPI and uses Celery for asynchronous task processing. Redis is used for caching OCR results.

- No Cloud/external dependencies all you need: PyTorch based OCR (Marker) + Ollama are shipped and configured via

docker-composeno data is sent outside your dev/server environment, - PDF to Markdown conversion with very high accuracy using different OCR strategies including marker, surya-ocr or tessereact

- PDF to JSON conversion using Ollama supported models (eg. LLama 3.1)

- LLM Improving OCR results LLama is pretty good with fixing spelling and text issues in the OCR text

- Removing PII This tool can be used for removing Personally Identifiable Information out of PDF - see

examples - Distributed queue processing using [Celery]()

- Caching using Redis - the OCR results can be easily cached prior to LLM processing

- CLI tool for sending tasks and processing results

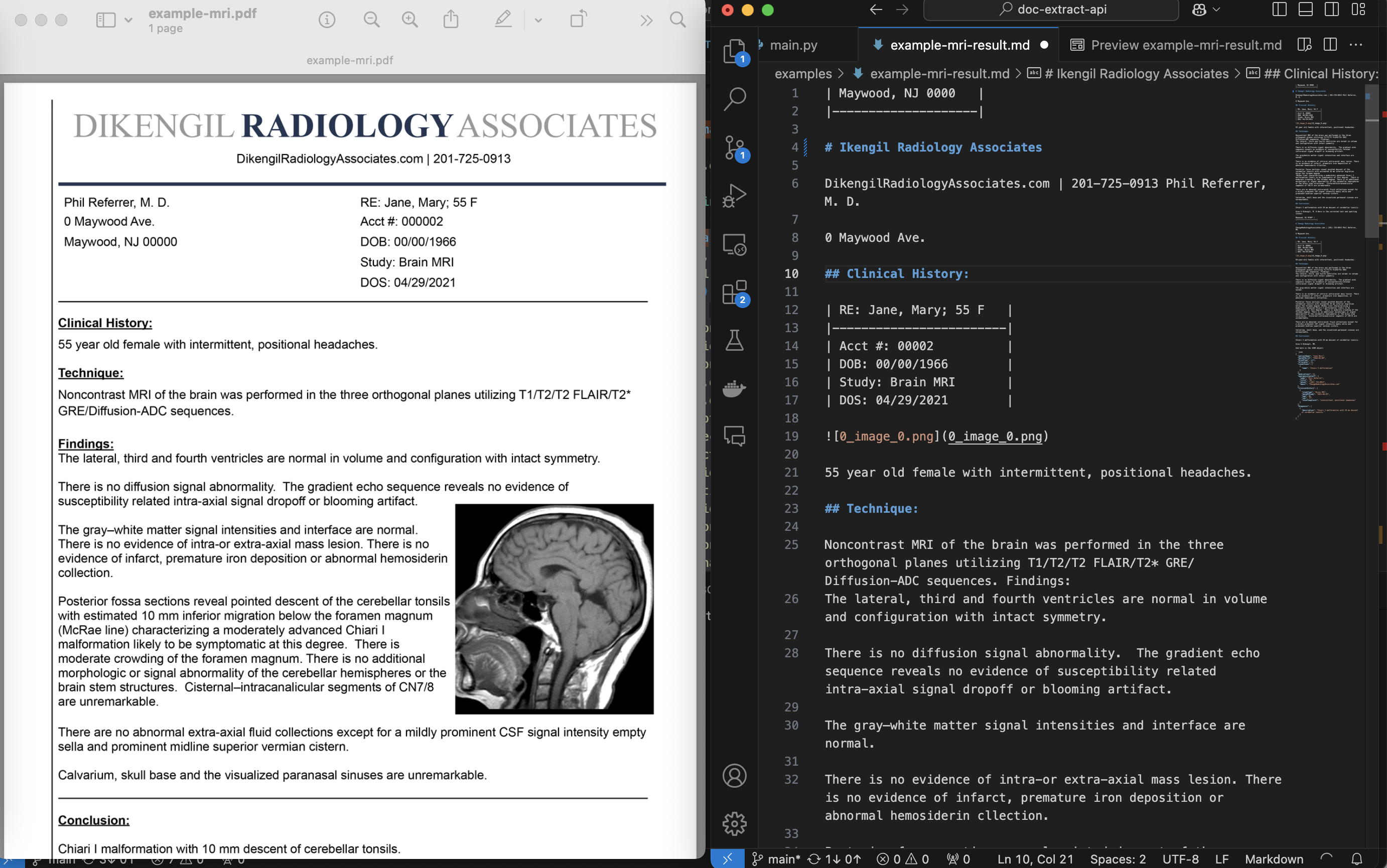

Converting MRI report to Markdown + JSON.

python client/cli.py ocr --file examples/example-mri.pdf --prompt_file examples/example-mri-2-json-prompt.txtBefore running the example see getting started

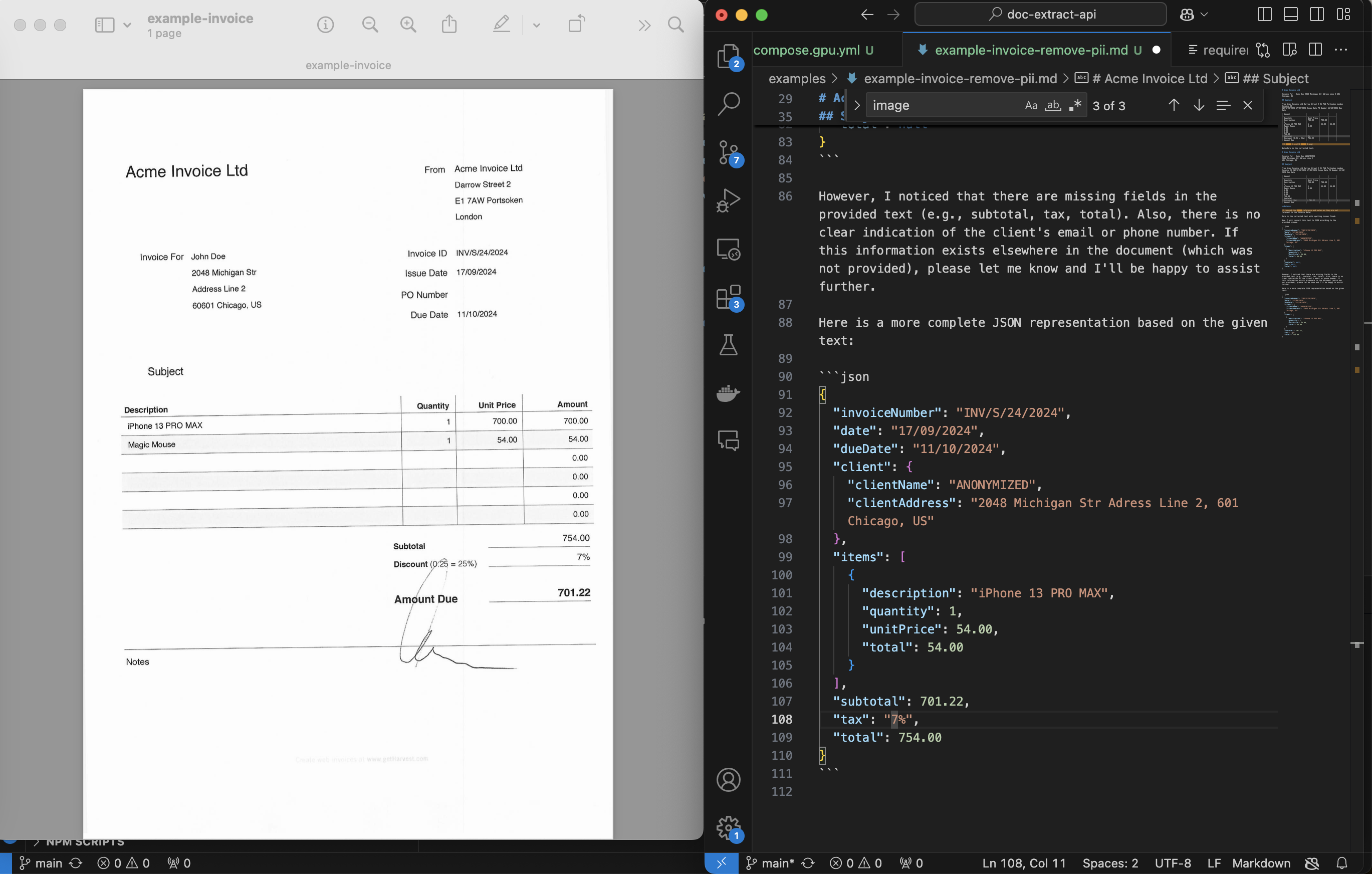

Converting Invoice to JSON and remove PII

python client/cli.py ocr --file examples/example-invoice.pdf --prompt_file examples/example-invoice-remove-pii.txt Before running the example see getting started

Note: As you may observe in the example above, marker-pdf sometimes mismatches the cols and rows which could have potentially great impact on data accuracy. To improve on it there is a feature request #3 for adding alternative support for tabled model - which is optimized for tables.

- Docker

- Docker Compose

git clone https://github.com/CatchTheTornado/pdf-extract-api.git

cd pdf-extract-apiCreate .env file in the root directory and set the necessary environment variables. You can use the .env.example file as a template:

cp .env.example .env

Then modify the variables inside the file:

REDIS_CACHE_URL=redis://redis:6379/1

OLLAMA_API_URL=http://ollama:11434/api

# CLI settings

OCR_URL=http://localhost:8000/ocr

RESULT_URL=http://localhost:8000/ocr/result/{task_id}

CLEAR_CACHE_URL=http://localhost:8000/ocr/clear_cacheBuild and run the Docker containers using Docker Compose:

docker-compose up --build... for GPU support run:

docker-compose -f docker-compose.gpu.yml up --buildThis will start the following services:

- FastAPI App: Runs the FastAPI application.

- Celery Worker: Processes asynchronous OCR tasks.

- Redis: Caches OCR results.

- Ollama: Runs the Ollama model.

If the on-prem is too much hassle ask us about the hosted/cloud edition of pdf-extract-api, we can setup it you, billed just for the usage.

The project includes a CLI for interacting with the API. To make it work first run:

cd client

pip install -r requirements.txtYou might want to test out different models supported by LLama

python client/cli.py llm_pull --model llama3.1python client/cli.py ocr --file examples/example-mri.pdf --ocr_cachepython client/cli.py ocr --file examples/example-mri.pdf --ocr_cache --prompt_file=examples/example-mri-remove-pii.txtpython client/cli.py result -task_id {your_task_id_from_upload_step}python client/cli.py clear_cachepython llm_generate --prompt "Your prompt here"- URL: /ocr

- Method: POST

- Parameters:

- file: PDF file to be processed.

- strategy: OCR strategy to use (

markerortesseract). - ocr_cache: Whether to cache the OCR result (true or false).

- prompt: When provided, will be used for Ollama processing the OCR result

- model: When provided along with the prompt - this model will be used for LLM processing

Example:

curl -X POST -H "Content-Type: multipart/form-data" -F "file=@examples/example-mri.pdf" -F "strategy=marker" -F "ocr_cache=true" -F "prompt=" -F "model=" "http://localhost:8000/ocr" - URL: /ocr/result/{task_id}

- Method: GET

- Parameters:

- task_id: Task ID returned by the OCR endpoint.

Example:

curl -X GET "http://localhost:8000/ocr/result/{task_id}"- URL: /ocr/clear_cache

- Method: POST

Example:

curl -X POST "http://localhost:8000/ocr/clear_cache"- URL: /llm_pull

- Method: POST

- Parameters:

- model: Pull the model you are to use first

Example:

curl -X POST "http://localhost:8000/llama_pull" -H "Content-Type: application/json" -d '{"model": "llama3.1"}'- URL: /llm_generate

- Method: POST

- Parameters:

- prompt: Prompt for the Ollama model.

- model: Model you like to query

Example:

curl -X POST "http://localhost:8000/llama_generate" -H "Content-Type: application/json" -d '{"prompt": "Your prompt here", "model":"llama3.1"}'This project is licensed under the GNU General Public License. See the LICENSE file for details.

Important note on marker license*:

The weights for the models are licensed cc-by-nc-sa-4.0, but Marker's author will waive that for any organization under $5M USD in gross revenue in the most recent 12-month period AND under $5M in lifetime VC/angel funding raised. You also must not be competitive with the Datalab API. If you want to remove the GPL license requirements (dual-license) and/or use the weights commercially over the revenue limit, check out the options here.

In case of any questions please contact us at: info@catchthetornado.com