This example shows how to create a simple custom MuJoCo model and train a reinforcement learning agent using the Gymnasium shell and algorithms from StableBaselines.

To reproduce the result you will need python packages MuJoCo, Gymnasium and StableBaselines3 with the appropriate versions:

pip install -r requirements.txt

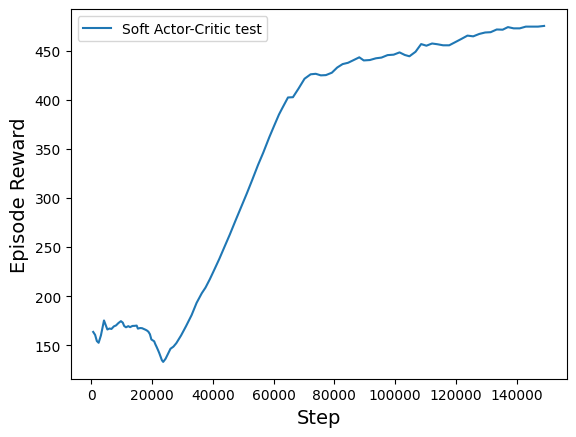

A simple ball balancing environment was implemented as a starting point. The observation consisted of 10 values (position and speed for rotating the platform along two axes and moving the ball along three axes). Each step the agent received a reward of 1 until he fell off the platform or the episode ended (500 steps by default). The Soft Actor Critic algorithm learned an acceptable policy in 150,000 steps.

-

The model in MJCF format is located in the assets folder. Place the xml file of your own model there. Configure it with the necessary joints and actuators.

-

By running the model_viewer.py file, you can test all actuators, as well as the physics of the model in general, in a convenient interactive mode. To do this, run the following command:

python model_viewer.py --path path/towards/your/xml/file

- Create your own environment class similar to BallBalanceEnv.

-

In the

__init__method, replace the model path with your own, and insert your observation shape intoobservation_space(size of observation). -

In the

stepmethod, define the reward for each step, as well as the condition for the end of the episode. -

In the

reset_modelmethod, define what should happen at the beginning of each episode (when the model is reset). For example, initial states, adding noise, etc. -

In the

_get_obsmethod, return your observations, such as the velocities and coordinates of certain joints.

-

In the file learn.py, create an instance of your class (instead of BallBalanceEnv). Then you can choose a different algorithm or use your own, now your environment has all the qualities of the Gym environment.

-

In the file test.py you can test your agent by specifying the path to the model saved after training. You can also create a GIF from frames (commented code) :)