chatd.mp4

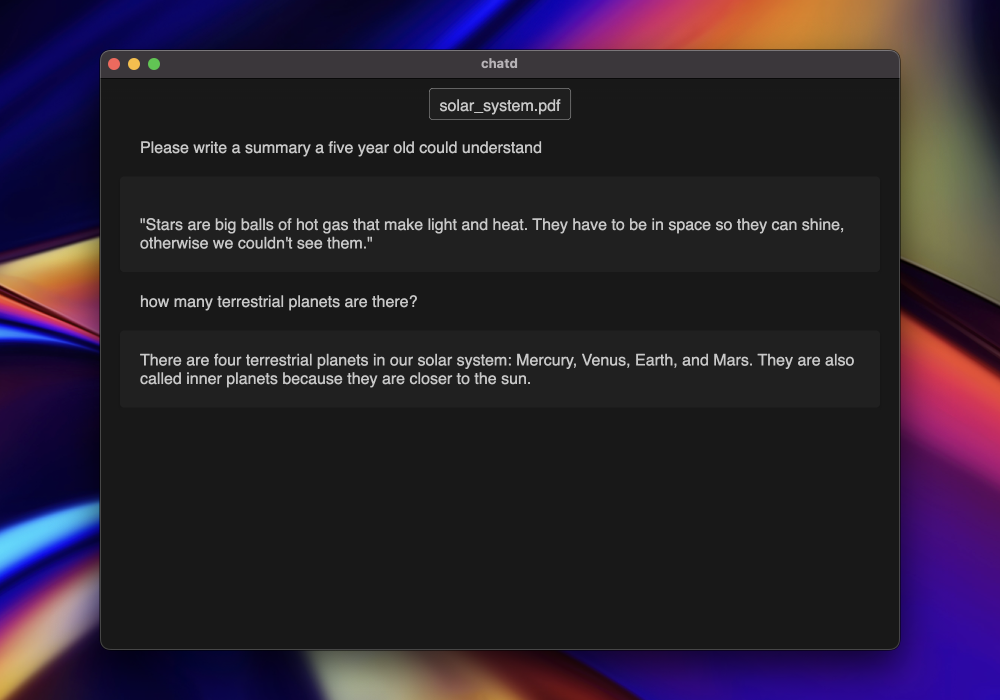

Chat with your documents using local AI. All your data stays on your computer and is never sent to the cloud. Chatd is a completely private and secure way to interact with your documents.

Chatd is a desktop application that lets you use a local large language model (Mistral-7B) to chat with your documents. What makes chatd different from other "chat with local documents" apps is that it comes with the local LLM runner packaged in. This means that you don't need to install anything else to use chatd, just run the executable.

Chatd uses Ollama to run the LLM. Ollama is an LLM server that provides a cross-platform LLM runner API. If you already have an Ollama instance running locally, chatd will automatically use it. Otherwise, chatd will start an Ollama server for you and manage its lifecycle.

- Download the latest release from chatd.ai or the releases page.

- Unzip the downloaded file.

- Run the

chatdexecutable.

Run the following commands in the root directory.

npm install

npm run start- Download the latest

ollama-darwinrelease for MacOS from here. - Make the downloaded binary executable:

chmod +x path/to/ollama-darwin - Copy the

ollama-darwinexecutable to thechatd/src/service/ollama/runnersdirectory. - Optional: The Electron app needs be signed to be able to run on MacOS systems other than the one it was compiled on, so you need a developer certificate. To sign the app, set the following environment variables:

APPLE_ID=your_apple_id@example.com

APPLE_IDENTITY="Developer ID Application: Your Name (ABCDEF1234)"

APPLE_ID_PASSWORD=your_apple_id_app_specific_password

APPLE_TEAM_ID=ABCDEF1234You can find your Apple ID, Apple Team ID, and Apple ID Application in your Apple Developer account. You can create an app-specific password here.

- Run

npm run packageto package the app.

- Build Ollama from source for Windows, this will support CPU only. See here.

- Copy the

ollama.exeexecutable tochatd/src/service/ollama/runners/ollama.exe. - Run

npm run packageto package the app.

Note: The Windows app is not signed, so you will get a warning when you run it.

- Build Ollama from source for Linux x64 to support CPU only, this allows for a smaller executable package. See here.

- Copy the

ollamaexecutable tochatd/src/service/ollama/runners/ollama-linux. - Run

npm run packageto package the app.