This repository contains the DAG code used in the LLMOps: Automatic retrieval-augmented generation with Airflow, GPT-4 and Weaviate use case. The pipeline was modelled after the Ask Astro reference architecture.

The DAGs in this repository use the following tools:

This section explains how to run this repository with Airflow. Note that you will need to copy the contents of the .env_example.txt file to a newly created .env file and add your own credentials.

The following credentials are necessary to use this repository:

- Alpha Vantage API key: available for free at Alpha Vantage.

- OpenAI API key, see the OpenAI API documentation.

- Run

git clone https://github.com/astronomer/use-case-airflow-llm-rag-finance.giton your computer to create a local clone of this repository. - Install the Astro CLI by following the steps in the Astro CLI documentation. Docker Desktop/Docker Engine is a prerequisite, but you don't need in-depth Docker knowledge to run Airflow with the Astro CLI.

- Create the

.envfile with the contents from.env_example.txtplus your own credentials. - Run

astro dev startin your cloned repository. - After your Astro project has started. View the Airflow UI at

localhost:8080, the Weaviate endpoint atlocalhost:8081and the Streamlit app atlocalhost:8501. - In order to fill the local Weaviate instance with data, run the

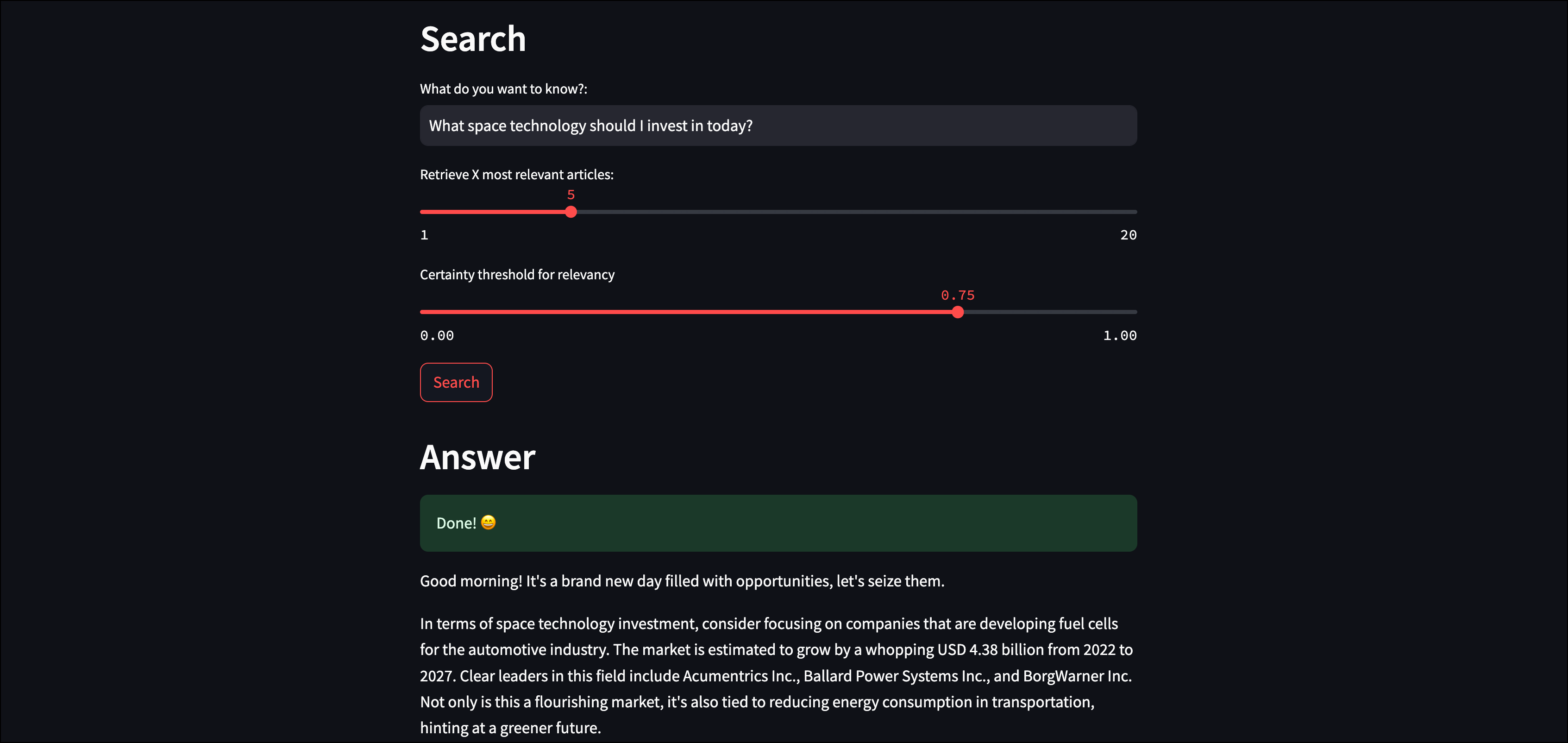

finbuddy_load_newsDAG to get the latest news articles or thefinbuddy_load_pre_embeddedDAG to load a set of preembedded (with ada-200) articles for quick development. - After the DAGrun has completed, ask a question about current financial developments in the Streamlit app at

localhost:8501.

Note that if you switch between using OpenAI and local embeddings you will need to run the create_schema DAG to delete the old schema and create a new one because the two models create embeddings of different dimensions.