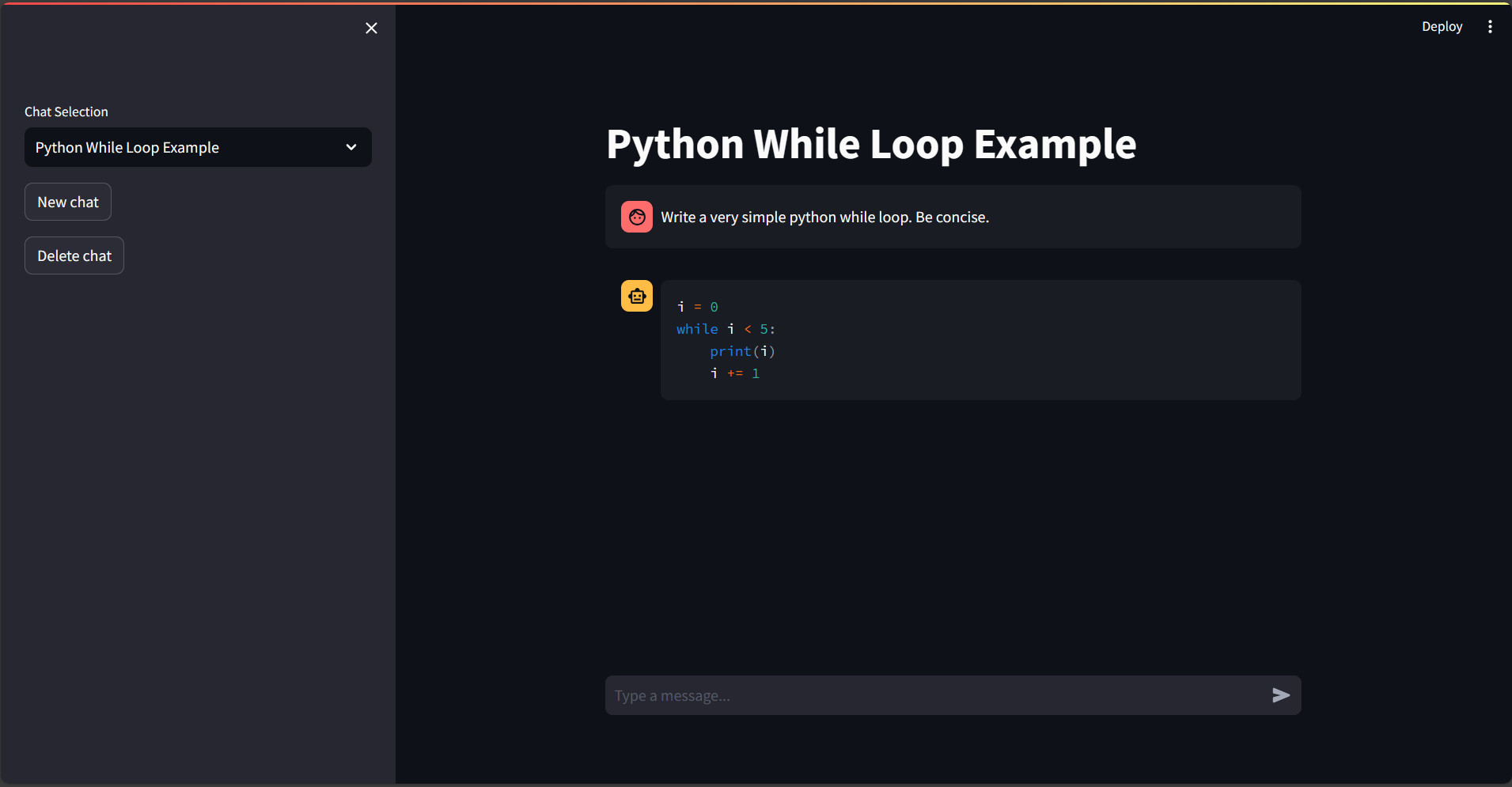

This project demonstrates how to use Streamlit to build a simple chat app that uses Prompt flow to generate responses with a model deployed in Azure OpenAI Service. The app uses two flows:

- chat - for generating responses to user input given the context of the conversation

- make_title - for generating a title for a conversation using the initial user question

You can install the dependencies using pip:

pip install -r requirements.txtThe requires access to an existing Azure OpenAI Service deployment with API access.

By default, the app expects a deployment with name gpt-4-turbo.

You can change this in flows/chat/flow.dag.yaml and flows/make_title/flow.dag.yaml by setting the deployment_name.

You must set the following environment variables:

OPENAI_API_KEY- The API key for your Azure OpenAI Service resourceOPENAI_API_BASE- The base URL for your Azure OpenAI Service resource in the formhttps://<resource-name>.openai.azure.com

You can run the app using the following command:

streamlit run app.pyThe repository uses Pulumi and GitHub actions to deploy the app to Azure App Service. To run the GitHub actions workflow, you must set the following secrets:

ARM_CLIENT_ID- The client ID of the service principal used to deploy the appARM_CLIENT_SECRET- The client secret of the service principal used to deploy the appARM_LOCATION_NAME- The Azure region to deploy the app toARM_SUBSCRIPTION_ID- The Azure subscription ID to deploy the app toARM_TENANT_ID- The Azure tenant ID to deploy the app toOPENAI_API_BASE- The base URL for your Azure OpenAI Service resource in the formhttps://<resource-name>.openai.azure.comOPENAI_API_KEY- The API key for your Azure OpenAI Service resourcePULUMI_ACCESS_TOKEN- The Pulumi access token to use for deployment

Your service principal must have the ability to create to app registrations and managed identities.