Learning to Do or Learning While Doing: Reinforcement Learning and Bayesian Optimisation for Online Continuous Tuning

This repository contains the code used to construct the environment and evaluate the Reinforcement-Learning trained optimiser and Bayesian optimiser for the paper "Learning to Do or Learning While Doing: Reinforcement Learning and Bayesian Optimisation for Online Continuous Tuning".

Arxiv preprint available: arXiv:2306.03739

- The ARES-EA Transverse Beam Tuning Task

- Repository Structure

- The RL/BO control loop

- Gym Environments

- Installation Guide

We consider the Experimental Area of ARES at DESY, Hamburg. Toward the downstream end of the Experimental Area, there is the screen AREABSCR1 where we would like to achieve a variable set of beam parameters, consisting of the beam position and size in both x- and y-direction.

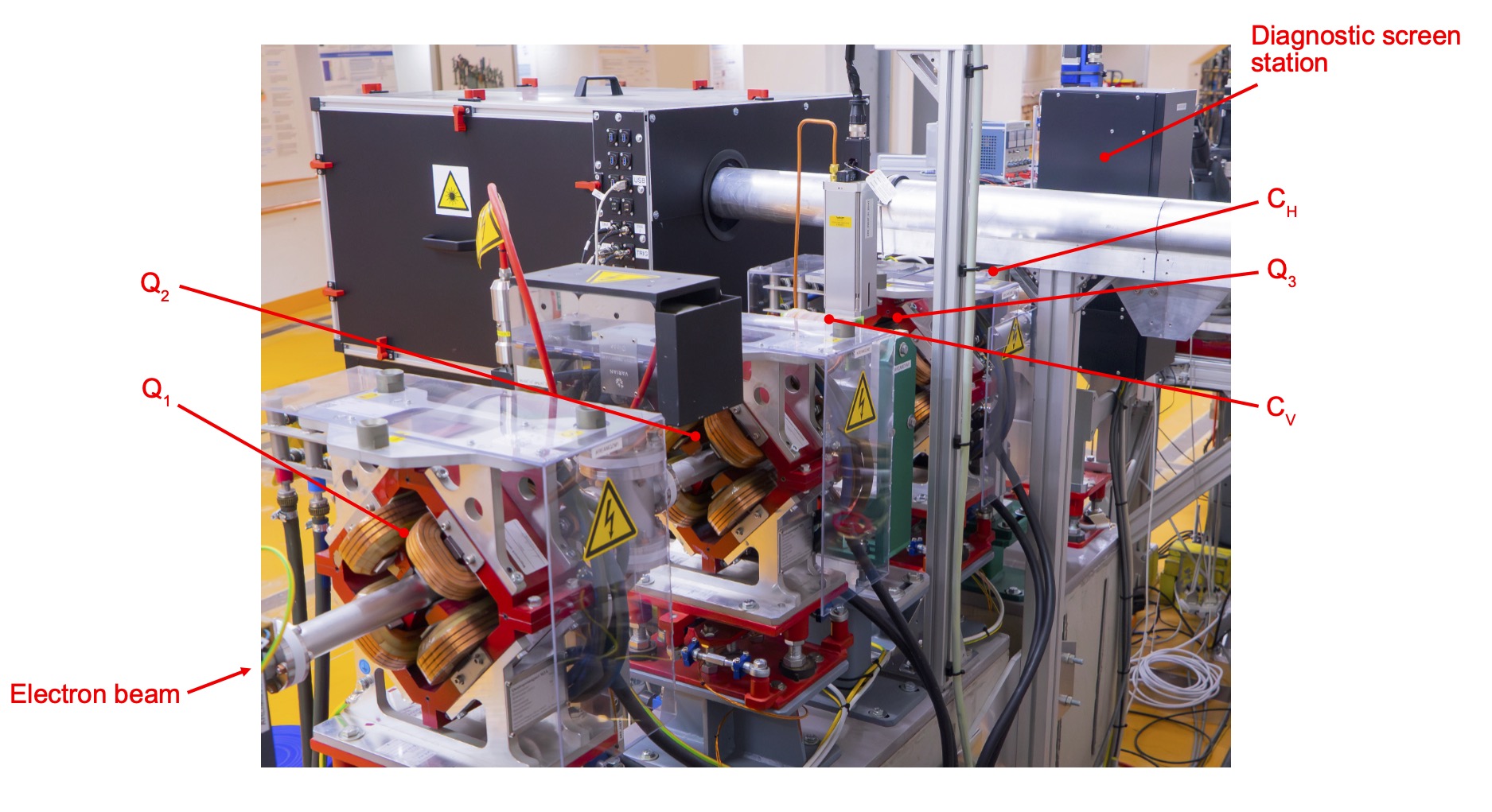

To this end, we can set the values of three quadrupoles AREAMQZM1, AREAMQZM2 and AREAMQZM3, as well as two steerers (dipole corrector magnets) AREAMCVM1 and AREAMCHM1. Below is a simplified overview of the lattice that we consider.

Note that simplified versions of this problem may be considered, where only the quadrupoles are used to achieve a certain beam size, or only the steerers are used to position the beam. Another simplification that may be considered is that the target beam parameters need not be variable but rather the goal is to achieve the smallest and/or most centred beam possible.

Below are the most important scripts

environment.pyIt contains the Gym environmentsTransverseTuningEnvand its derived classEATransverseTuning.backend.pycontains the different backends that the Gym environments can use to interact with either the simulation model or the real particle accelerator.bayesopt.pyimplements a custom Bayesian optimization routineBayesianOptimizationAgentacting similar as a RL agent.ea_train.pyAn example training script to train a RLO agent. Note: The RLO agent used in the paper is trained using TD3, the current version uses PPO, which might not fully reproduce the old result.

- The files

evaluation/eval_*.pygenerate the simulation results in different conditions. - The notebooks

evaluation/eval_*.ipynbare used to analyse and evaluate the results and generate the final plots. trails.yamlcontains the problem configuration that are used to generate the evaluation results.eval.pycontains utlity functions to load evaluation results and produce plots.

Note: To run these files, move them out of the evaluation folder to have the correct import path etc.

The Gym environments are built on a base class TransverseTuningEnv,which defines a general transverse beam tuning task using a set of magnets as actuators and a screen to for observation, with the goal to position and focus the beam to specified values.

The derived class EATransverseTuning desribes the specific task at ARES experimental area (EA).

The EACheetahBackend and EADOOCSBackend are used to interact with the Cheetah simulation model or the real ARES accelerator.

Whilst most of the environment are fixed to the problem, some things can be changed as design decisions. These are the action and observation spaces, the reward composition (weighting of individual components), the objective function as well as the way that actions are defined (deltas vs. absolute values).

The default observation is a dictionary

keys = ["beams","magnets", "target"]After using FlattenObservation wrapper, the observation shape becomes (13,), given that no filter_observation is used:

-

size= (4,),ind = (0, 1, 2, 3), observed beam (mu_x, sigma_x, mu_y, sigma_y) in m -

size= (5,),ind = (4,5,6,7,8,), magnet values$(k_{Q1}, k{Q2}, \theta_{CV}, k_{Q3}, \theta{CH})$ in$1/m^2$ and mrad respectively -

size= (4,),ind = (9,10,11,12), target (mu_x, sigma_x, mu_y, sigma_y) in m

The reward function of ARESEA is defined as a composition of the following parts, each with a configurable weight multiplied

| Components | Reward |

|---|---|

| beam reward | +1 |

| --- | --- |

| beam on screen | +1 |

| x pos in threshold | +1 |

| y pos in threshold | +1 |

| x size in threshold | +1 |

| y size in threshold | +1 |

| finished | +1 |

| time steps | -1 |

The beam distance metric can be chosen to be either L1 (mean aboslute error) or L2 (mean squared error) loss between the current and target beam parameters.

The beam reward mode can be either "differential" or "feedback". For example for L1 loss:

-

differential, or improvement upon last step:

$r = \left(|v_\textrm{previous} - v_\textrm{target}| - |v_\textrm{current} - v_\textrm{target}| \right) / |v_\textrm{initial} - v_\textrm{target}|$ , where$v$ are the beam parameters$\mu_x, \mu_y, \sigma_x, \sigma_y$ respectively. -

feedback, or remaining difference target:

$r = - |v_\textrm{current} - v_\textrm{target}| / |v_\textrm{initial} - v_\textrm{target}|$

- Install conda or miniconda

- (Suggested) Create a virtual environment and activate it

- Install dependencies

conda env create -n ares-ea-rl python=3.9

conda activate ares-ea-rl

pip install -r requirements.txtWe acknowledge the contributions of the authors to this work: Jan Kaiser, Chenran Xu, Annika Eichler, Andrea Santamaria Garcia, Oliver Stein, Erik Bründermann, Willi Kuropka, Hannes Dinter, Frank Mayet, Thomas Vinatier, Florian Burkart and Holger Schlarb.

This work has in part been funded by the IVF project InternLabs-0011 (HIR3X) and the Initiative and Networking Fund by the Helmholtz Association (Autonomous Accelerator, ZT-I-PF-5-6). The authors acknowledge support from DESY (Hamburg, Germany) and KIT (Karlsruhe, Germany), members of the Helmholtz Association HGF, as well as support through the Maxwell computational resources operated at DESY and the bwHPC at SCC, KIT.