A complete Retrieval-Augmented Generation (RAG) application that demonstrates modern AI capabilities for answering questions about Ultimate Frisbee rules and strategies. This project showcases how to build a production-ready RAG system using cutting-edge technologies.

This repository serves as a comprehensive tutorial project for YouTube viewers learning about RAG (Retrieval-Augmented Generation) implementation.

- 📚 Intelligent Document Processing: Automatically indexes and processes the official WFDF Ultimate Frisbee Rules (2025-2028)

- 🤖 AI-Powered Q&A: Ask natural language questions about Ultimate Frisbee rules and get accurate, context-aware answers

- 📊 Source Attribution: Every answer includes relevant source documents with similarity scores and page references

- FastAPI: High-performance API framework with automatic OpenAPI documentation

- SQLModel: Modern Python SQL toolkit combining SQLAlchemy + Pydantic

- LlamaIndex: RAG framework for document processing and querying

- PostgreSQL + pgvector: Vector database for embeddings storage

- Ollama: Local LLM serving (supports Llama 3.2, Mistral, etc.)

- React 19: Modern React with latest features

- Vite: Lightning-fast build tool

- TailwindCSS: Utility-first CSS framework

- SWR: Data fetching with caching and revalidation

- Radix UI: Accessible, unstyled UI components

- Docker Compose: Multi-container orchestration

- pgvector: PostgreSQL extension for vector operations

- uv: Fast Python package management

- Docker & Docker Compose (required)

- 8GB+ RAM (for running local LLMs)

- NVIDIA GPU (optional, for faster inference)

- Git (for cloning the repository)

# Clone the repository

git clone https://github.com/yourusername/UltimateAdvisor.git

cd UltimateAdvisor

# Copy environment template

cp .env.example .envEdit .env with your preferred settings:

# Database Configuration

APP_PG_USER=postgres

APP_PG_PASSWORD=your_secure_password

APP_PG_DATABASE=ultimate_advisor

APP_PG_PORT=5432

# Ollama Models (you can change these)

APP_CHAT_MODEL=llama3.2:3b

APP_EMBEDDING_MODEL=nomic-embed-text:latest# Start all services (this will download models automatically)

docker-compose up -d

# Monitor the logs to see when everything is ready

docker-compose logs -fNote: First startup takes 5-10 minutes as it downloads the LLM models.

# Index the WFDF Ultimate Frisbee Rules document

uv run ./src/scripts/run_load_embeddings.py- UI: http://localhost:8000

- API Documentation: http://localhost:8000/docs

- API Redoc: http://localhost:8000/redoc

Try asking these questions in the chat interface:

- "What happens if the disc goes out of bounds?"

- "How many players are on the field for each team?"

- "What is a turnover in Ultimate Frisbee?"

- "Explain the spirit of the game rule"

- "What are the dimensions of an Ultimate field?"

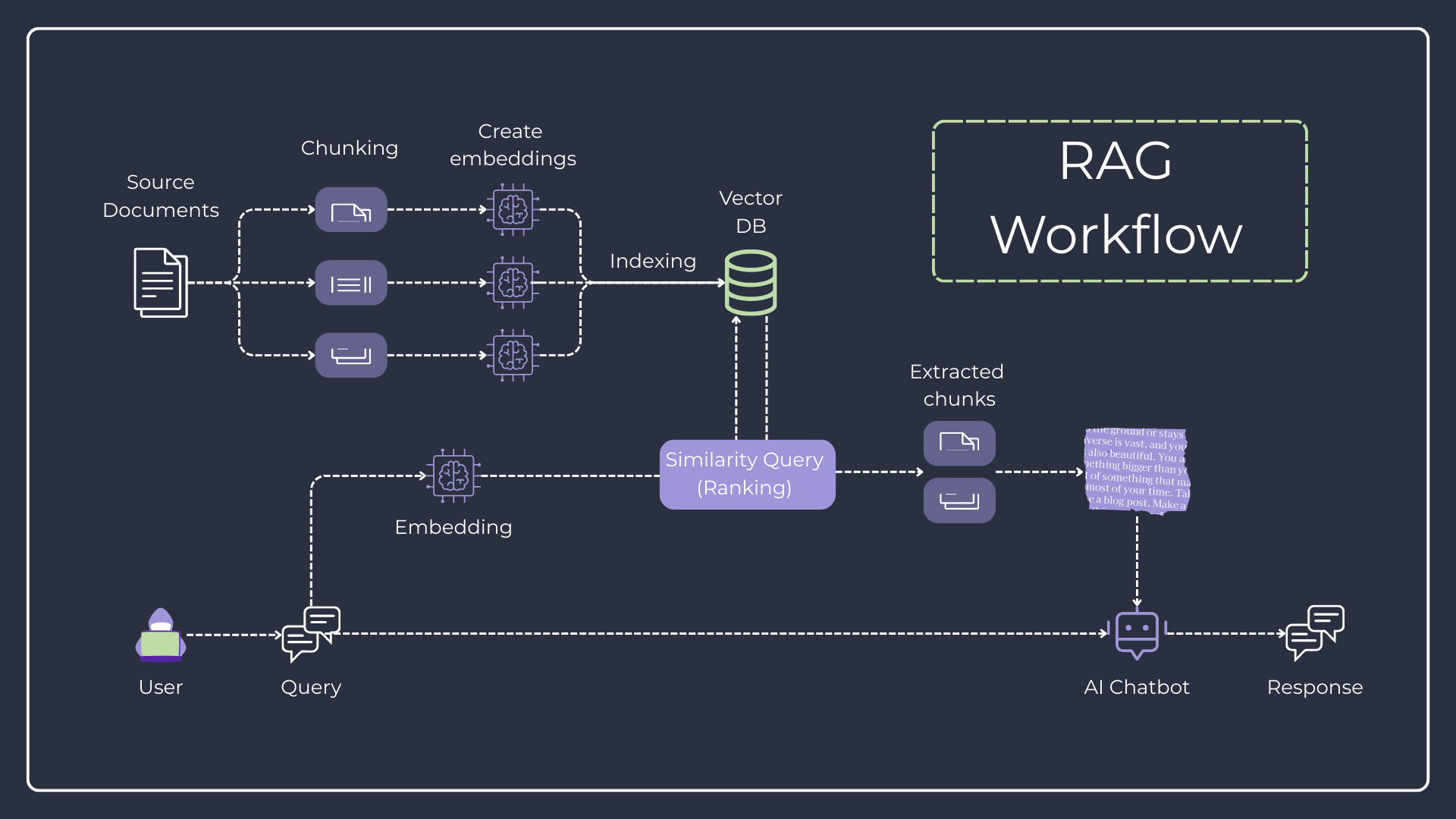

- Document Processing: PDF documents are chunked and embedded using Ollama

- Vector Storage: Embeddings are stored in PostgreSQL with pgvector extension

- Query Processing: User questions are embedded and matched against stored vectors

- Response Generation: Retrieved context is sent to the chat model for answer generation

- History Tracking: All conversations are persisted for future reference

If you prefer to run services locally:

- Install Python dependencies:

# Install uv package manager

curl -LsSf https://astral.sh/uv/install.sh | sh

# Install dependencies

uv sync- Start PostgreSQL with pgvector:

docker run -d \

--name postgres-pgvector \

-p 5432:5432 \

-e POSTGRES_USER=postgres \

-e POSTGRES_PASSWORD=password \

-e POSTGRES_DB=ultimate_advisor \

pgvector/pgvector:pg17- Start Ollama:

# Install Ollama (see https://ollama.ai)

ollama serve

# Pull required models

ollama pull gemma3:4b

ollama pull embeddinggemma:latest- Initialize the database:

uv run python src/scripts/run_init_db.py- Load embeddings:

uv run python src/scripts/run_load_embeddings.py- Start the backend:

uv run fastapi dev src/main.py --host 0.0.0.0 --port 8000- Start the frontend:

cd frontend

pnpm install

pnpm run devThis project is featured in a YouTube tutorial covering RAG development: YouTube Tutorial

🔔 Subscribe to @DevItWithMe for more!

🙏 If you find this project helpful, consider Buying Me a Coffee

⭐ Star this repository if it helps you learn RAG development!

🐛 Found a bug? Open an issue

💬 Have questions? Start a discussion