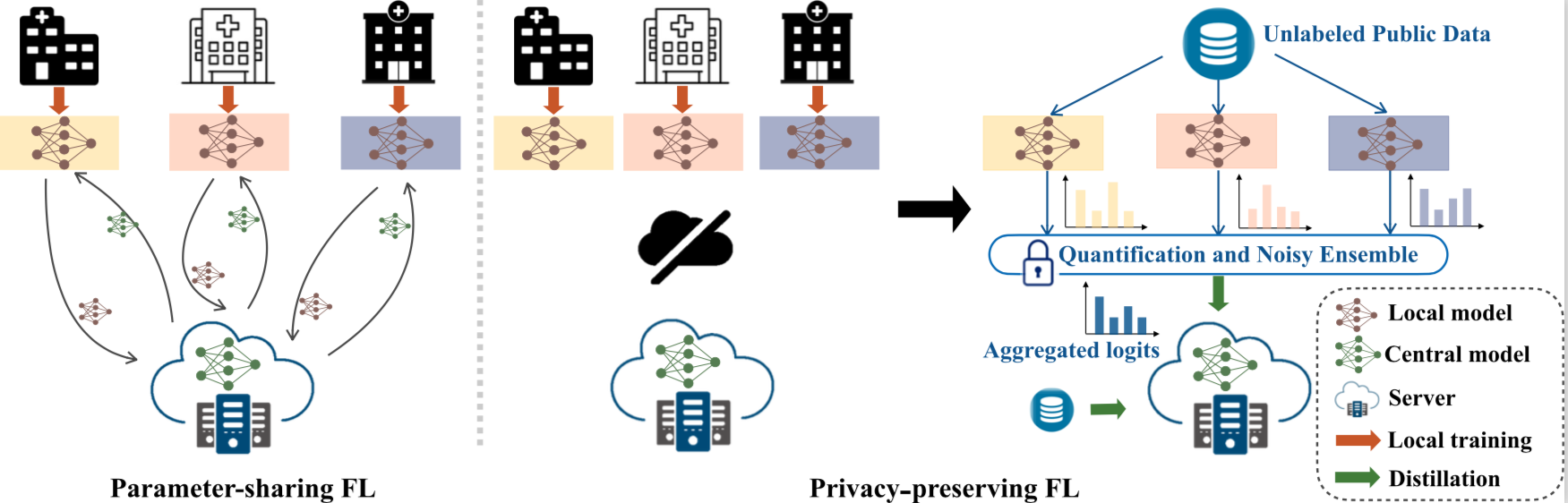

This repository contains the implementation for Preserving Privacy in Federated Learning with Ensemble Cross-Domain Knowledge Distillation (AAAI 2022).

Contact: Xuan Gong (xuangong@buffalo.edu)

python main.py --alpha 1.0 --seed 1 --C 1python main.py --alpha 1.0 --seed 1 --C 1 --oneshotpython main.py --alpha 1.0 --seed 1 --C 1 --oneshot --noisescale 1.0 --quantify 100

python main.py --alpha 0.1 --seed 1 --C 1 --oneshot --noisescale 1.0 --quantify 100

python main.py --alpha 1.0 --seed 1 --C 1 --dataset cifar100 --oneshot --noisescale 1.0 --quantify 100

python main.py --alpha 0.1 --seed 1 --C 1 --dataset cifar100 --oneshot --noisescale 1.0 --quantify 100@inproceedings{gong2022preserving,

title={Preserving Privacy in Federated Learning with Ensemble Cross-Domain Knowledge Distillation},

author={Gong, Xuan and Sharma, Abhishek and Karanam, Srikrishna and Wu, Ziyan and Chen, Terrence and Doermann, David and Innanje, Arun},

booktitle = {Proceedings of the AAAI conference on Artificial Intelligence},

pages = {11891--11899},

year = {2022},

}