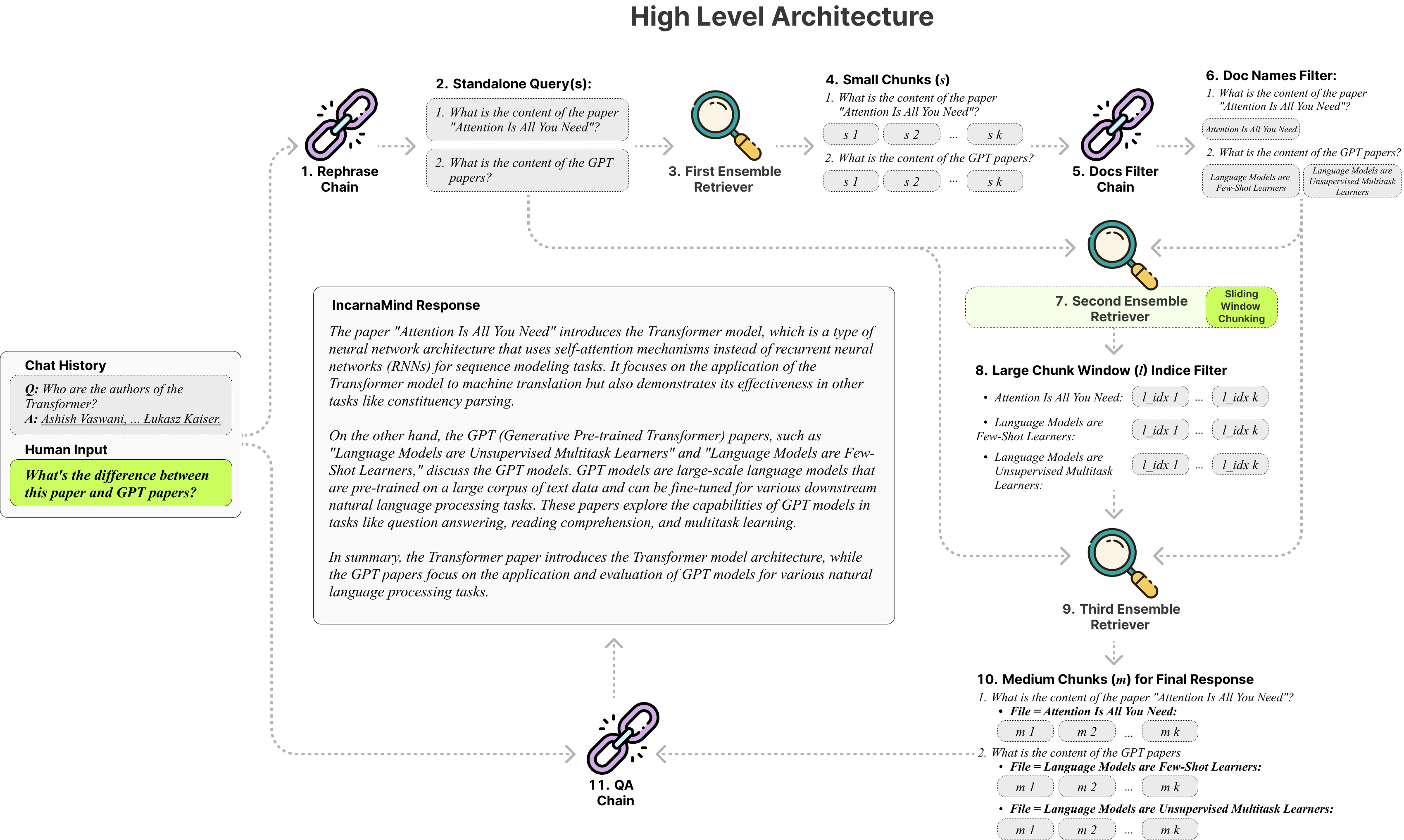

IncarnaMind enables you to chat with your personal documents 📁 (PDF, TXT) using Large Language Models (LLMs) like GPT (architecture overview). While OpenAI has recently launched a fine-tuning API for GPT models, it doesn't enable the base pretrained models to learn new data, and the responses can be prone to factual hallucinations. Utilize our Sliding Window Chunking mechanism and Emsemble Retriever enable efficient querying of both fine-grained and coarse-grained information within your ground truth documents to augment the LLMs.

Please feel free to use it and welcome any feedback and new feature suggestions 🙌.

Powered by Langchain and Chroma DB.

Demo.mp4

-

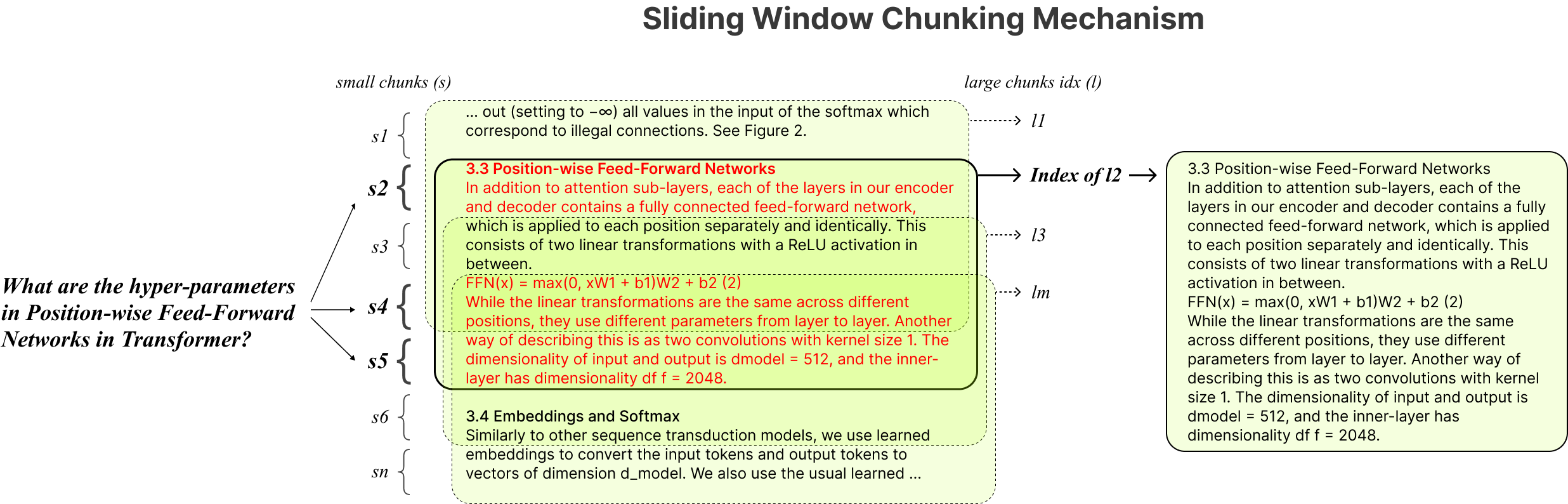

Fixed Chunking: Our Sliding Window Chunking technique provides a balanced solution in terms of time, computing power, and performance.

-

Precision vs. Semantics: Small chunks enable fine-grained information retrieval, while large chunks focus on coarse-grained data. We leverage both embedding-based and BM25 methods for a hybrid search approach.

-

Single-Document Limitation: IncarnaMind supports multi-document querying, breaking the one-document-at-a-time barrier.

-

Stability: We use Chains instead of Agent to ensure stable parsing across different LLMs.

-

Adaptive Chunking: Dynamically adjust the size and position of text chunks to improve retrieval augmented generation (RAG).

-

Multi-Document Conversational QA: Perform simmple and multi-hop queries across multiple documents simultaneously.

-

File Compatibility: Supports both PDF and TXT file formats.

-

LLM Model Compatibility: Supports both OpenAI GPT and Anthropic Claude models.

The installation is simple, you just need run few commands.

- 3.8 ≤ Python < 3.11 with Conda

- OpenAI API Key or Anthropic Claude API Key

- And of course, your own documents.

git clone https://github.com/junruxiong/IncarnaMind

cd IncarnaMindCreate Conda virtual environment

conda create -n IncarnaMind python=3.10Activate

conda activate IncarnaMindInstall all requirements

pip install -r requirements.txtSetup your API keys in configparser.ini file

[tokens]

OPENAI_API_KEY = sk-(replace_me)

and/or

ANTHROPIC_API_KEY = sk-(replace_me)(Optional) Setup your custom parameters in configparser.ini file

[parameters]

PARAMETERS 1 = (replace_me)

PARAMETERS 2 = (replace_me)

...

PARAMETERS n = (replace_me)Put all your files (please name each file correctly to maximize the performance) into the /data directory and run the following command to ingest all data: (You can delete example files in the /data directory before running the command)

python docs2db.pyIn order to start the conversation, run a command like:

python main.pyWait for the script to require your input like the below.

Human:When you start a chat, the system will automatically generate a IncarnaMind.log file. If you want to edit the logging, please edit in the configparser.ini file.

[logging]

enabled = True

level = INFO

filename = IncarnaMind.log

format = %(asctime)s [%(levelname)s] %(name)s: %(message)s- Citation is not supported for current version, but will release soon.

- Limited asynchronous capabilities.

- Frontend UI interface

- OCR support

- Asynchronous optimization

- Support open source LLMs

- Support more document formats

If you want to cite our work, please use the following bibtex entry:

@misc{IncarnaMind2023,

author = {Junru Xiong},

title = {IncarnaMind},

year = {2023},

publisher = {GitHub},

journal = {GitHub Repository},

howpublished = {\url{https://github.com/junruxiong/IncarnaMind}}

}