Overview

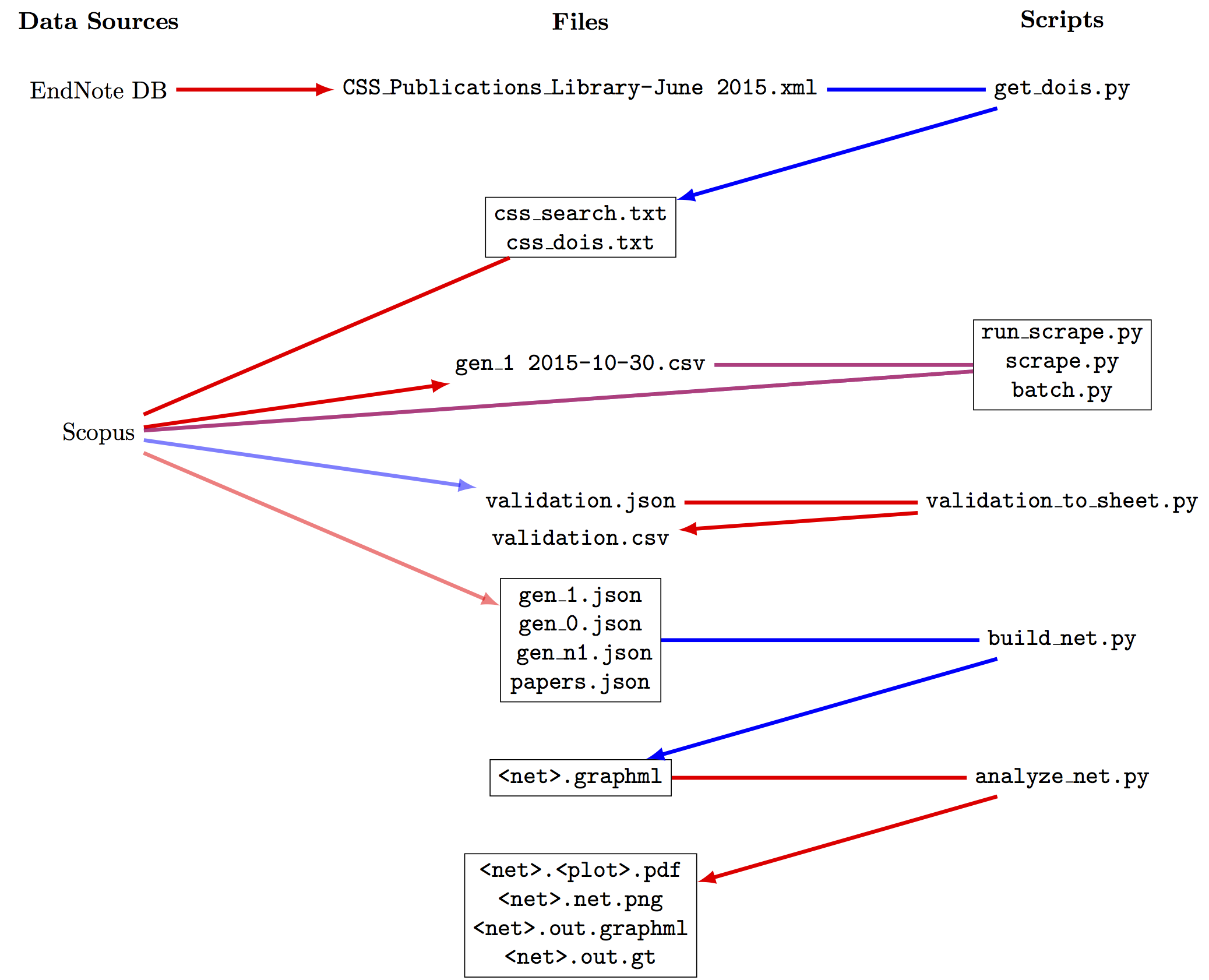

This repository contains several tools for citation network construction and analysis, originally developed for the project described in bibliometrics.pdf. The folders contain a series of Python 3 scripts, intended to be used sequentially as follows:

-

get_dois: Starting with axmlfile exported from EndNote, extract a list of DOIs and a search string that can be copied and pasted directly into Scopus' advanced search box. -

In Scopus, manually retrieve "generation 1." (See the project description for an explanation for this term.)

-

scrape: Starting with thecsvfile for generation 1, retrieve the desired metadata.scrape.get_meta_by_doi, which actually builds the query for the Scopus API, assumes that an API key has been defined asMY_API_KEYinapi_key.py. A new Scopus API key can be generated by registering for free on the Scopus API page.- My project required retrieving metadata for something like 30-50,000 articles.

- Since the Scopus API being used has a limit cap of something like 2,000 articles per week, I contacted Scopus to arrange for a limit cap raise. It took a few weeks to negotiate the cap raise.

- The raised cap was still too low to retrieve all of the required metadata in one run. The module

batch.pywas written to break the retrieval list into manageable chunks. run_scrape.pyactually works through the metadata retrieval process.

-

build_net: Using the metadata retrieved from Scopus, build citation and coauthor networks. Each of the resultinggraphmlfiles contains a single connected network.- Installing

graph_toolis nontrivial. However, especially if compiled with the--enable-openmpflag, it is significantly faster than any of the other major Python network analysis packages.

- Installing

-

analyze_net: Using thegraphmlfiles and two "comparison networks," conduct the actual network analysis. -

ida.R: IMO, Python is better for manipulating complex data structures, but R has better tools for generating publication-quality tables and plots, and a nicer interactive IDE. This R file helps us do this with thegraphmlfiles generated byanalyze_net. -

In review, an ad hoc text analysis was added of the core paper abstracts. This analysis, and supplement text, are found in

text analysis.