Collecting, parsing and analyzing machine data is very common these days. Applications like Sumologic, Splunk or Loggly help tens of thousands of organizations make sense of data (often in unpredictable formats), and gain business and operational insights that can help diagnose problems across services, detect security threats and much more.

This challenge is all about creating 100% serverless log parser using CloudWatch Logs, Lambda, and Athena.

We are providing you with a log generator that uses the Twitter Streaming API to create logs on your behalf; you are responsible for parsing the data so that it can be queried using Athena.

The following tools and accounts are required to complete these instructions.

The following steps walk you through the set-up of a CloudWatch Log and the provided lambda function that will be used to parse the log stream.

In the LogGenerator directory, paste the following credentials in the credentials.json.

{

"consumer_key" : "AvWcq75KC8jJuKzNF7TOiLv5z",

"consumer_secret" : "okVzxcW7H7FV9Wn7HUzl3t2x2L7kxpCztWAhht58aMXWqHXVUW",

"access_token" : "38059844-yqm6BewaPT03c9GLaIACUcBNZnnyCmnS0OmNQ9PWs",

"access_secret" : "IVM8XlWF35SxBVPacQ2cKCBoKO6brImtzLqlZZjyYbSXD"

}{

"consumer_key" : "kOr9jFO0N0oFWEilI74ZTmaop",

"consumer_secret" : "eI1Tjv0tgO9nkYxAJfMslVJRRwJqKsosFwEjEbewYOZDRZhGll",

"access_token" : "820470222-yHssA3Qt7qNDX5A47VrR0UQJXuWARlcTL6Gok9Ut",

"access_secret" : "DK1d9WzGKBEAqH0HzFTChDdgsxUpjMhcUzDA2nmy6j0xX"

}{

"consumer_key" : "Dcy5c0uufheP6eSbjvnlzyceP",

"consumer_secret" : "AmxndTnMunx2K2jroikBK5UiG8dABbtTkcl0pvdOMTY1nwHZpV",

"access_token" : "897963891975634944-PITAa4klOVP7fVF2nJ8pWVpX8XAA6AV",

"access_secret" : "nOlyeblXqCAqW9k1H4NorGWUWR1Z9DqFXUgFYjgbGBxs6"

}{

"consumer_key" : "KdsdVpbRI5hJY8iYlje3tYbTn",

"consumer_secret" : "KF6Sck4fyq1544kBfs8VaGF0qoFwygYykZ1HSrVjjeREauJfV9",

"access_token" : "717226425745612800-iTJSiXRe3Wg4IdXrN88kJZfOrkZRRAK",

"access_secret" : "P2IF0vQOHWpyFdkDGPIpg1DzILszi9bvcMoUzjZNqmIBq"

}NOTE: Only one twitter stream is allowed per set of credentials.

- Sign into Twitter and navigate to https://apps.twitter.com/

- Click on Create New App

- Fill in the name, description, and a placeholder URL and create the twitter application

- Navigate to

Keys and Access Tokenstab and clickCreate my access token - Save the

- Consumer Key

- Consumer Secret

- Access Token

- Access Token Secret

- Navigate to

/LogGenerator/credentials.jsonand complete the fields

The project uses by default the lambdasharp profile. Follow these steps to setup a new profile if need be.

- Create a

lambdasharpprofile:aws configure --profile lambdasharp - Configure the profile with the AWS credentials and region you want to use

Set up the CloudWatch Log Group /lambda-sharp/log-parser/dev and a log stream test-log-stream via the AWS Console or by executing AWS CLI commands.

aws logs create-log-group --log-group-name '/lambda-sharp/log-parser/dev' --profile lambdasharp

aws logs create-log-stream --log-group-name '/lambda-sharp/log-parser/dev' --log-stream-name test-log-stream --profile lambdasharpThe LambdaSharpLogParserRole lambda function requires an IAM role. You can create the LambdaSharpLogParserRole role via the AWS Console or by executing AWS CLI commands.

aws iam create-role --role-name LambdaSharp-LogParserRole --assume-role-policy-document file://assets/lambda-role-policy.json --profile lambdasharp

aws iam attach-role-policy --role-name LambdaSharp-LogParserRole --policy-arn arn:aws:iam::aws:policy/AWSLambdaFullAccess --profile lambdasharpaws s3api create-bucket --bucket "<USERNAME>-lambda-sharp-s3-logs" --create-bucket-configuration LocationConstraint=<AWS_REGION> --profile lambdasharp- Navigate into the LogGenerator folder:

cd LogParser - Run:

dotnet restore - Edit

aws-lambda-tools-defaults.jsonand make sure everything is set up correctly. - Run:

dotnet lambda deploy-function

- From the AWS Console, navigate to the Lambda Services console

- Find the deployed function and click into it to find the

Triggerstab - Add a trigger and select

CloudWatch Logsas the trigger - Select the log group

/lambda-sharp/log-parser/devand add a filter name

Using the included LogGenerator application, stream tweets directly into CloudWatch

The included LogGenerator application can stream live tweets directly into the CloudWatch log matching the above setup.

NOTE: The log group and stream names that the generator uses can be modified by changing the values in the

LogGenerator/application_vars.jsonfile.

- Navigate into the LogGenerator folder:

cd LogGenerator - Run:

./lambdasharp generate logs <filter>

filteris a keyword that will be used to stream related tweets e.g.cats,dogs,running,music, etc- This will stream log entries continuously to your cloudwatch log

- Use

ctrl-Cto stop the stream

- OR Run:

./lambdasharp generate sample <number> <filter>

numberis the number of tweets you want to stream to cloudwatch logs

Data will begin streaming from Twitter into the CloudWatch log.

Note: CloudWatch log events must be sent to a log stream in chronological order. You will run into issues if multiple instances of the LogGenerator are streaming to the same log stream at the same time.

The data is base64 encoded and compressed, you must implement the DecompressLogData method and return the JSON containing the log records.

See the documentation for more information.

Each log entry contains several sections separated by a special character (you must find out what this character is). Each section contains useful information about the tweet and the user, such as user name total number of tweets, the number of re-tweets, date created and more. Filter this information and turn it into a JSON object.

Repeat these steps for each entry in your logs.

NOTE: Not all tweets have the same sections, for example, not all tweets have location information. You must account for missing data!

Put each JSON object in a file in S3. Each JSON object must be a single line, and each file can contain one or more lines.

This is important because Athena will count each line as a new record.

BONUS 1: Find out a "serverless" way to accumulate records across different executions of the lambda function and save them to S3 as a single file!

BONUS 2: Compress the file before putting it into S3. (Bonus 1 and 2 are not mutually exclusive)

Set up an Athena database called lambdasharp_logs, and create a table that defines the schema of the parsed JSON. The following is a minimal example, add additional fields for information found in the log streams.

CREATE DATABASE lambdasharp_logs;CREATE EXTERNAL TABLE IF NOT EXISTS lambdasharp_logs.users (

`user_name` string,

`friends` int,

`date_created` timestamp

)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

WITH SERDEPROPERTIES (

'serialization.format' = '1'

) LOCATION 's3://<USERNAME>-lambda-sharp-s3-logs/users/'

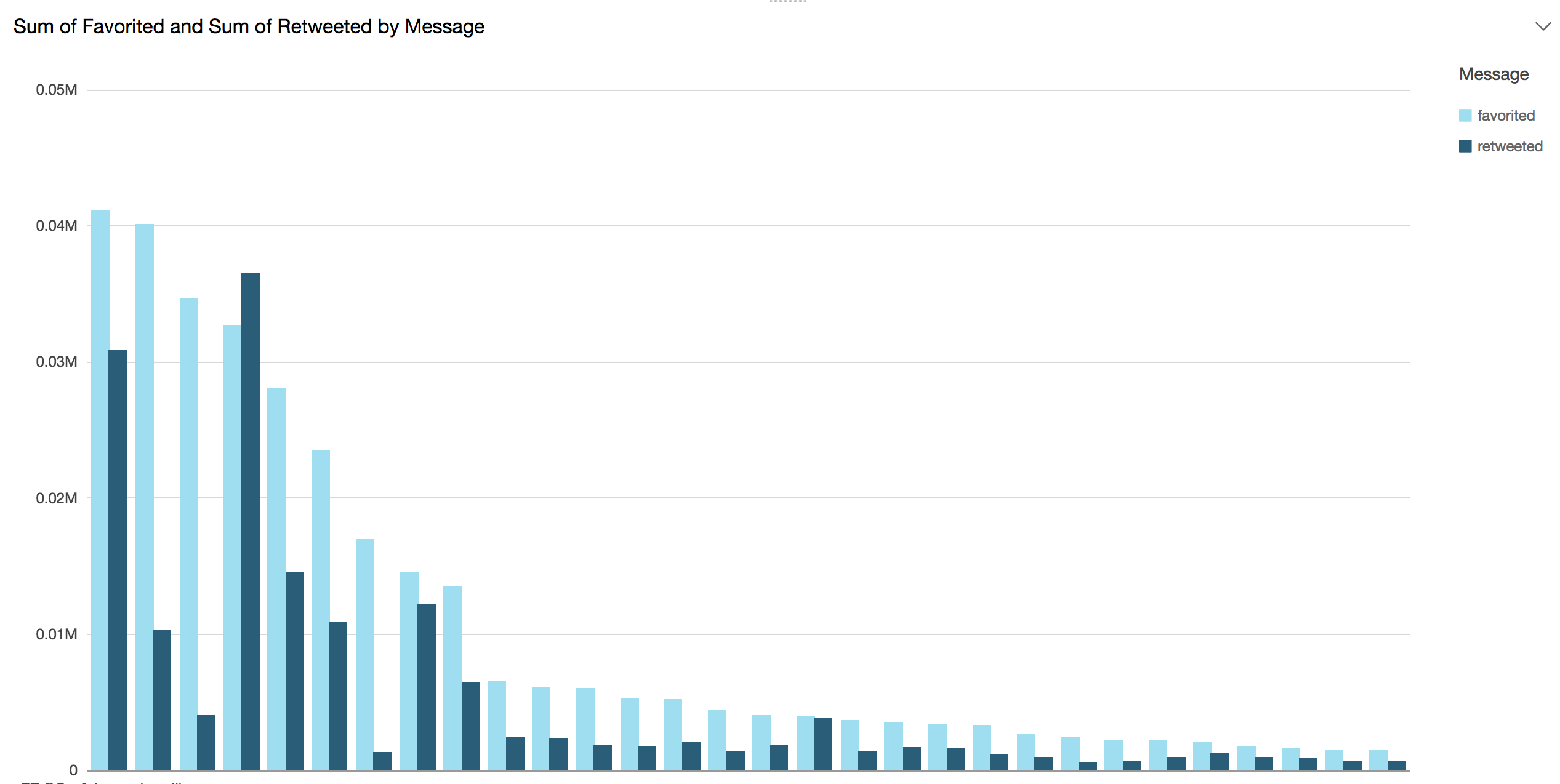

TBLPROPERTIES ('has_encrypted_data'='false');You can use Amazon QuickSight to create visualizations of many data sources including Athena.

NOTE: to use QuickSight you will be required to do a 1 click sign up. It is free for 60 days and $9 after that.

See docs for more information.

- Make sure you are in the same region as the rest of your project

- Click on

Connect to another data source... - Select Athena and give your data source a name. Click on create

- Select the Athena database

lambdasharp_logs - Select the table you want to visualize, e.g. users or tweet_info

- Select

Direct query your dataand create

You are now ready to create different visualizations based on the data you have in Athena.

Happy Hacking!

- Erik Birkfeld for organizing.

- MindTouch for hosting.

- Copyright (c) 2017 Juan Manuel Torres, Katherine Marino, Daniel Lee

- MIT License