This project was developed as part of the DD2424 Deep Learning in Data Science course at KTH Royal Institute of Technology, spring 2017.

We attach the full report and our slides.

| Author | GitHub |

|---|---|

| Federico Baldassarre | baldassarreFe |

| Diego Gonzalez Morin | diegomorin8 |

| Lucas Rodés Guirao | lucasrodes |

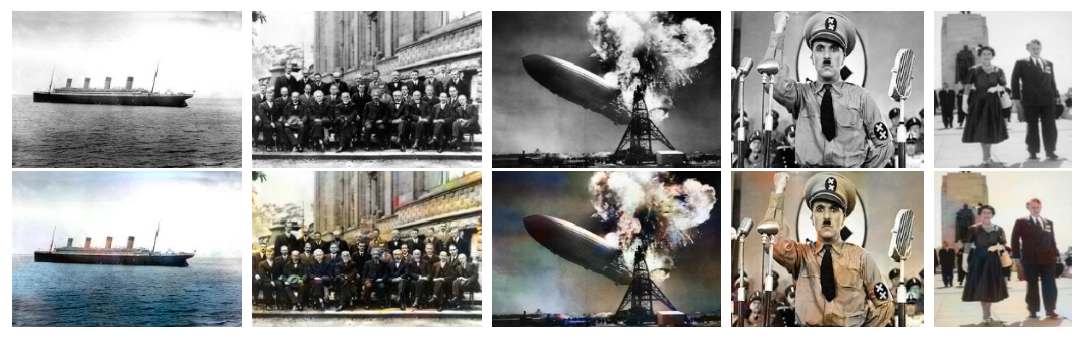

We got the inspiration from the work of Richard Zhang, Phillip Isola and Alexei A. Efros, who realized a network able to colorize black and white images (blog post and paper). They trained a network on ImageNet pictures preprocessed to make them gray-scale, with the colored image as the output target.

Then we also saw the experiments of Satoshi Iizuka, Edgar Simo-Serra and Hiroshi Ishikawa, who added image classification features to raw pixels fed to the network, improving the overall results (YouTube review, blog post and paper).

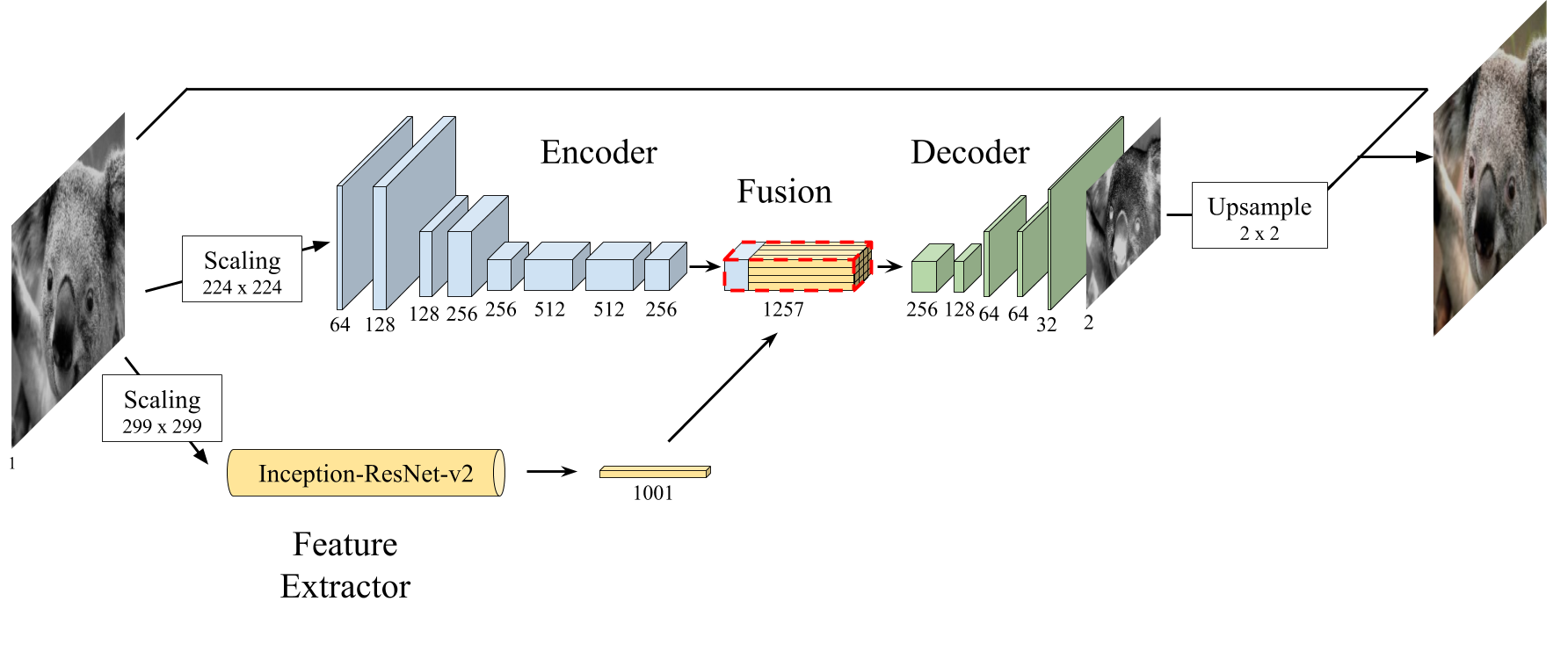

In our work we implement a similar architecture, enriching the pixel information of each image with semantic information about its content. However, object recognition is already a complex problem and would be worth a project on its own. For this reason we are not going to build an image classifier from scratch. Instead we will use a network that has already been trained on a large variety of images, such as Google’s Inception-ResNet-v2, which is trained on ImageNet.

The hidden layers of these models learned to create a semantic representation of the image that is then used by the final layer (fully connected + softmax) to label the objects in the image. By “cutting” the model at one of its final layers we will get a high dimensional representation of image features, that will be used by our network to perform the colorization task (TensorFlow tutorial on transfer learning, another tutorial and arXiv paper).

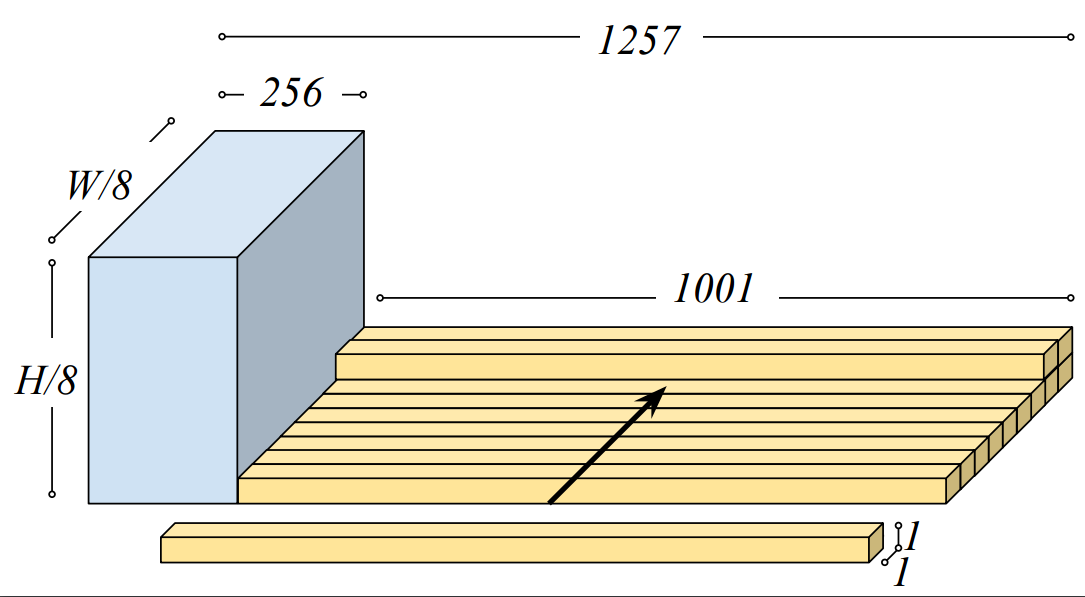

The fusion between the fixed-size embedding and the intermediary result of the convolutions is performed by means of replication and stacking as described in the original paper:

We have used the MSE loss as the objective function.

Training data for this experiment could actually come from every source, but we rather prefer using images from ImageNet, which nowadays is considered the de-facto reference for image tasks. It will also make our experiments easily reproducible, compared to using our own images.

Our project is implemented in Python 3, for it is widely used in the data science and machine learning communities. As for additional libraries, for machine learning, deep learning and image manipulation we rely on Keras/TensorFlow, NumPy/SciPy, Scikit. Refer to requirements.txt for a complete list of the dependencies.