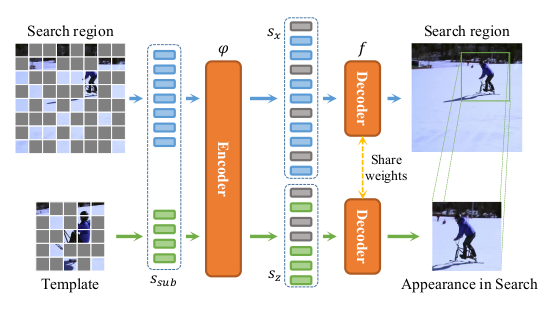

Representation Learning for Visual Object Tracking by Masked Appearance Transfer

-

Clone the repository locally

-

Create a conda environment

conda env create -f env.yaml

conda activate mat

pip install --upgrade git+https://github.com/got-10k/toolkit.git@master

# (You can use `pipreqs ./root` to analyse the requirements of this project.)- Prepare the training data:

We use LMDB to store the training data. Please check

./data/parse_<DATA_NAME>and generate lmdb datasets. - Specify the paths in

./lib/register/paths.py.

All of our models are trained on a single machine with two RTX3090 GPUs. For distributed training on a single node with 2 GPUs:

- MAT pre-training

python -m torch.distributed.launch --nproc_per_node=2 train.py --experiment=translate_template --train_set=common_pretrain- Tracker training

Modify the cfg.model.backbone.weights in ./config/cfg_translation_track.py to be the last checkpoint of the MAT pre-training.

python -m torch.distributed.launch --nproc_per_node=2 train.py --experiment=translate_track --train_set=commonWe provide a multi-process testing script for evaluation on several benchmarks.

Please modify the paths to your dataset in ./lib/register/paths.py.

Download this checkpoint and put it into ./checkpoints/translate_track_common/, and download this checkpoint and put it into ./checkpoints/translate_template_common_pretrain/.

python test.py --gpu_id=0,1 --num_process=0 --experiment=translate_track --train_set=common --benchmark=lasot --vis@InProceedings{Zhao_2023_CVPR,

author = {Zhao, Haojie and Wang, Dong and Lu, Huchuan},

title = {Representation Learning for Visual Object Tracking by Masked Appearance Transfer},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {18696-18705}

}- Thanks for the great MAE, MixFormer, pysot.

- For data augmentation, we use Albumentations.

This work is released under the GPL 3.0 license. Please see the LICENSE file for more information.