🏷️ Recognize Anything: A Strong Image Tagging Model & Tag2Text: Guiding Vision-Language Model via Image Tagging

Official PyTorch Implementation of the Recognize Anything Model (RAM) and the Tag2Text Model.

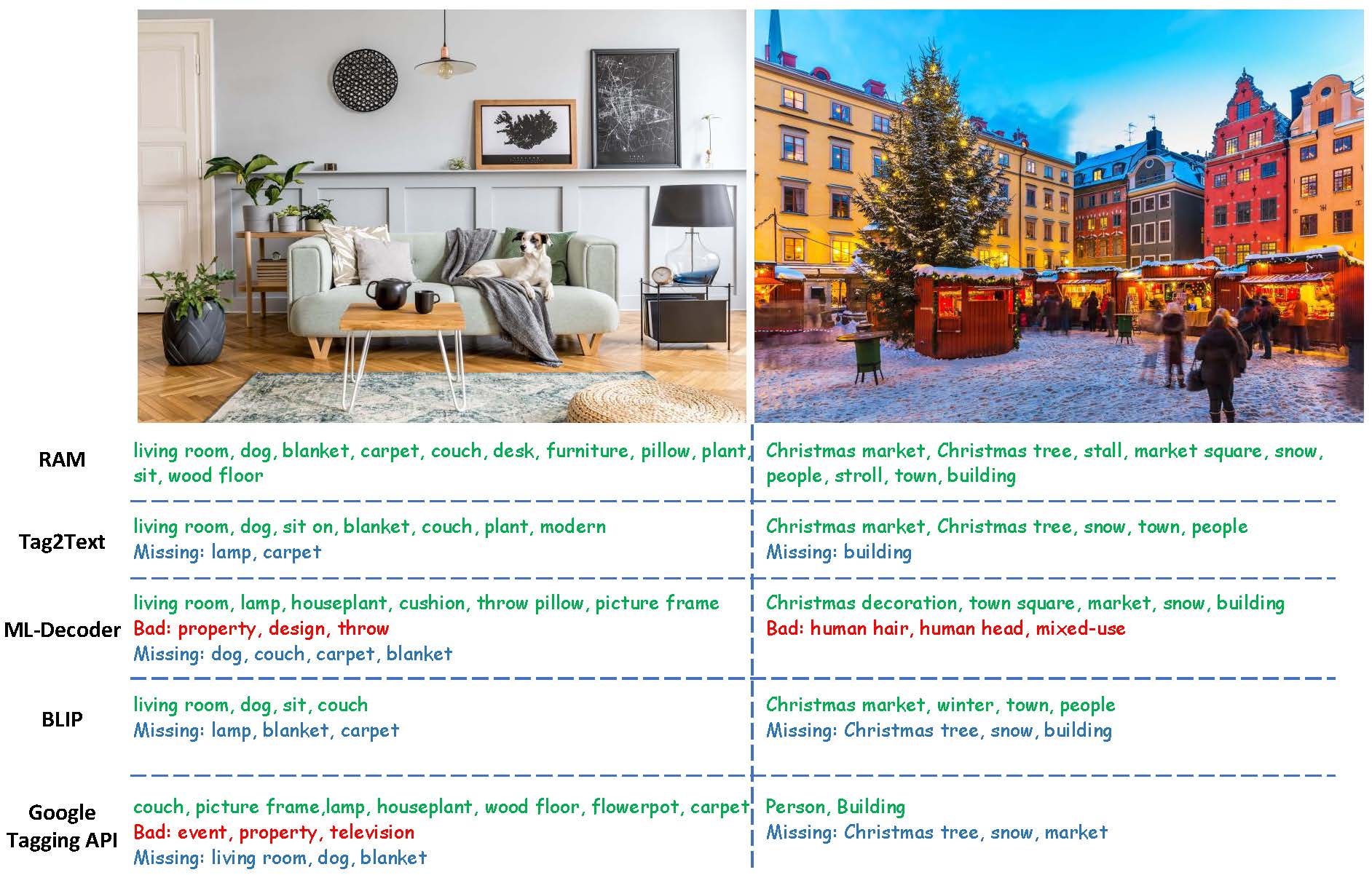

- RAM is an image tagging model, which can recognize any common category with high accuracy.

- Tag2Text is a vision-language model guided by tagging, which can support caption, retrieval and tagging.

Welcome to try our RAM & Tag2Text web Demo! 🤗

Both Tag2Text and RAM exihibit strong recognition ability. We have combined Tag2Text and RAM with localization models (Grounding-DINO and SAM) and developed a strong visual semantic analysis pipeline in the Grounded-SAM project.

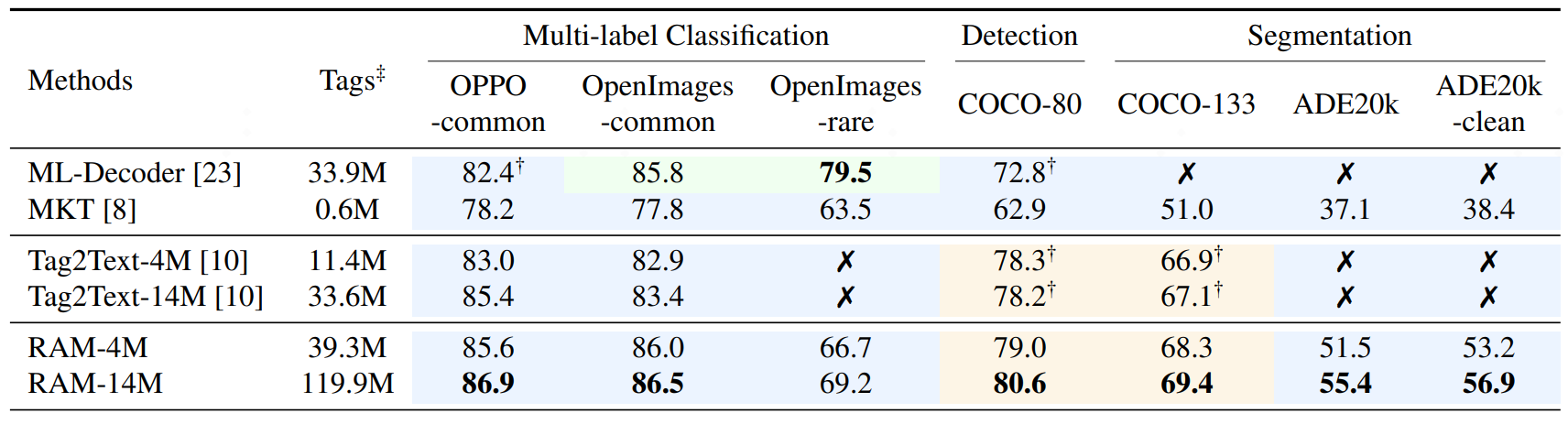

- The Recognize Anything Model (RAM) and Tag2Text exhibits exceptional recognition abilities, in terms of both accuracy and scope.

- Especially, RAM’s zero-shot generalization is superior to ML-Decoder’s full supervision on OpenImages-common categories test dataset.

(Green color means fully supervised learning and Blue color means zero-shot performance.)

|

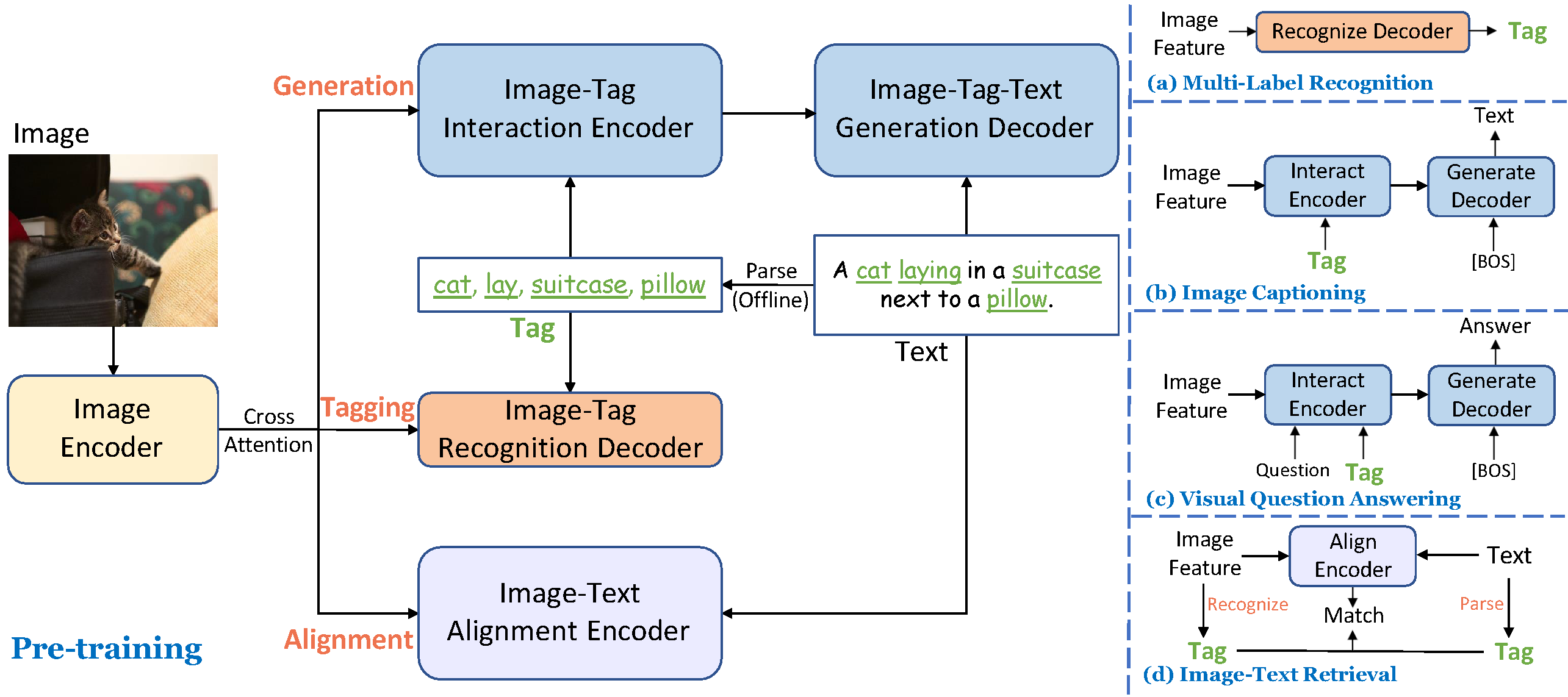

Tag2Text for Vision-Language Tasks.

- Tagging. Without manual annotations, Tag2Text achieves superior image tag recognition ability of 3,429 commonly human-used categories.

- Captioning. Tag2Text integrates recognized image tags into text generation as guiding elements, resulting in the generation with more comprehensive text descriptions.

- Retrieval. Tag2Text provides tags as additional visible alignment indicators.

|

Advancements of RAM on Tag2Text.

- Accuracy. RAM utilizes a data engine to generate additional annotations and clean incorrect ones, resulting higher accuracy compared to Tag2Text.

- Scope. Tag2Text recognizes 3,400+ fixed tags. RAM upgrades the number to 6,400+, covering more valuable categories. With open-set capability, RAM is feasible to recognize any common category.

|

- Release Tag2Text demo.

- Release checkpoints.

- Release inference code.

- Release RAM demo and checkpoints.

- Release training codes (until July 8st at the latest).

- Release training datasets (until July 15st at the latest).

| Name | Backbone | Data | Illustration | Checkpoint | |

|---|---|---|---|---|---|

| 1 | RAM-14M | Swin-Large | COCO, VG, SBU, CC-3M, CC-12M | Provide strong image tagging ability. | Download link |

| 2 | Tag2Text-14M | Swin-Base | COCO, VG, SBU, CC-3M, CC-12M | Support comprehensive captioning and tagging. | Download link |

- Install the dependencies, run:

pip install -r requirements.txt

-

Download RAM pretrained checkpoints.

-

Get the English and Chinese outputs of the images:

python inference_ram.py --image images/1641173_2291260800.jpg \ --pretrained pretrained/ram_swin_large_14m.pth

- Install the dependencies, run:

pip install -r requirements.txt

-

Download RAM pretrained checkpoints.

-

Custom recognition categories in build_zeroshot_label_embedding.

-

Get the tags of the images:

python inference_ram_zeroshot_class.py --image images/zeroshot_example.jpg \ --pretrained pretrained/ram_swin_large_14m.pth

- Install the dependencies, run:

pip install -r requirements.txt

-

Download Tag2Text pretrained checkpoints.

-

Get the tagging and captioning results:

python inference_tag2text.py --image images/1641173_2291260800.jpg \ --pretrained pretrained/tag2text_swin_14m.pthOr get the tagging and sepcifed captioning results (optional):

python inference_tag2text.py --image images/1641173_2291260800.jpg \ --pretrained pretrained/tag2text_swin_14m.pth \ --specified-tags "cloud,sky"

If you find our work to be useful for your research, please consider citing.

@article{zhang2023recognize,

title={Recognize Anything: A Strong Image Tagging Model},

author={Zhang, Youcai and Huang, Xinyu and Ma, Jinyu and Li, Zhaoyang and Luo, Zhaochuan and Xie, Yanchun and Qin, Yuzhuo and Luo, Tong and Li, Yaqian and Liu, Shilong and others},

journal={arXiv preprint arXiv:2306.03514},

year={2023}

}

@article{huang2023tag2text,

title={Tag2Text: Guiding Vision-Language Model via Image Tagging},

author={Huang, Xinyu and Zhang, Youcai and Ma, Jinyu and Tian, Weiwei and Feng, Rui and Zhang, Yuejie and Li, Yaqian and Guo, Yandong and Zhang, Lei},

journal={arXiv preprint arXiv:2303.05657},

year={2023}

}

This work is done with the help of the amazing code base of BLIP, thanks very much!

We want to thank @Cheng Rui @Shilong Liu @Ren Tianhe for their help in marrying RAM/Tag2Text with Grounded-SAM.

We also want to thank Ask-Anything, Prompt-can-anything for combining RAM/Tag2Text, which greatly expands the application boundaries of RAM/Tag2Text.