Project 3 of Collaborative Multi Agents training from the Udacity's Nanodegree program

In the context of the Tennis environment, two agents move rackets on a tennis field to bounce a ball over a net, delimiting their respective areas. The goal of the agents is to keep the ball up in the air (not to score a goal by making it fall in the adversary field). This environment is highly similar to the one developed by Unity in the following sets of learning environment: Tennis

To capture is knowledge of the environment, our agent has an observation space of size 24. Amongst all the variables making up this vector, we can count the position of the ball, the velocity of the racket.

state = [ 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. -6.65278625 -1.5

-0. 0. 6.83172083 6. -0. 0. ]The reward of the agent is pretty straight forward:

it is given +0.1 score for every time where the an agent hit the ball in the air, over the net. If the ball hits the ground or is thrown out of bound, it receives a -0.01 reward. The reward of the actions is common between the agents, to make them collaborate.

To collect these rewards, our agent can move itself. These movement are represented as 2 vector of 2 values, with each value being between -1 and 1:

action = [

[0.06428211, 1. ],

[0.06536206, 1. ]

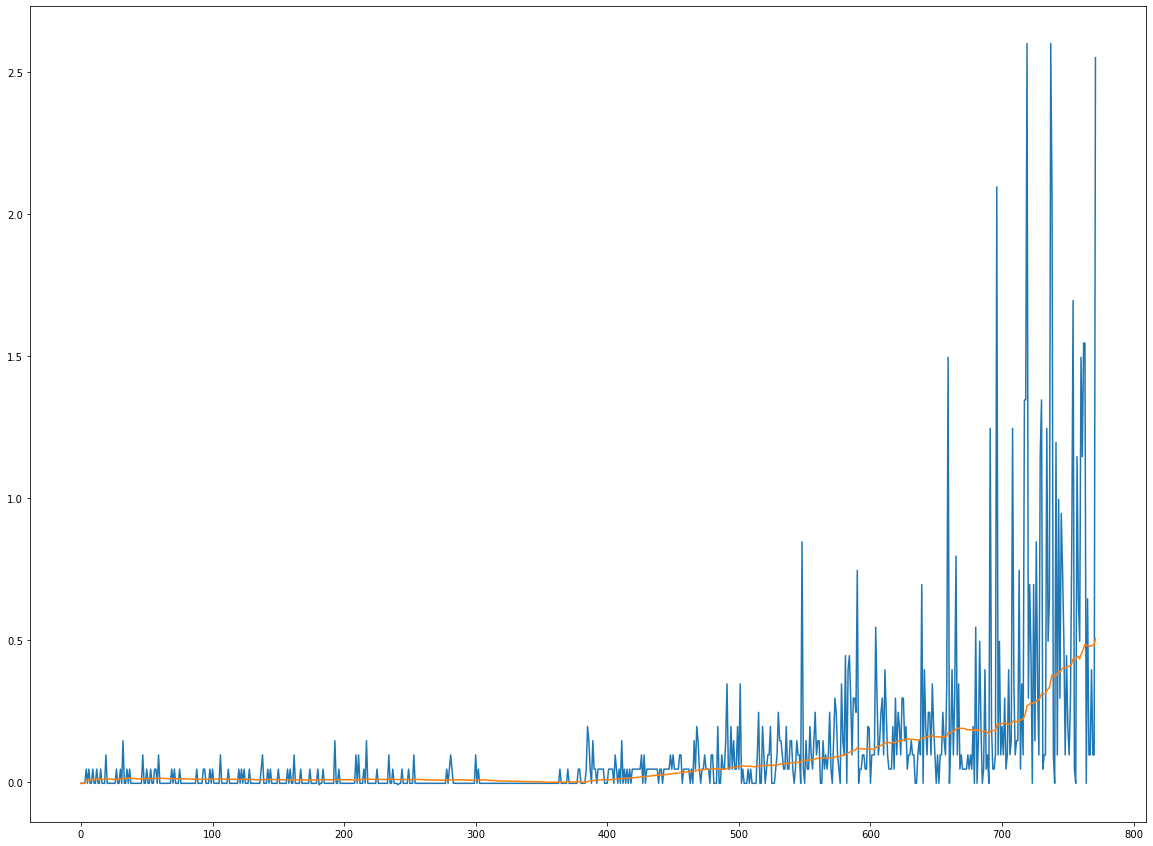

]When training the agent, it is considered successful to the eyes of the assignment when it gathers a reward of +0.5 over 100 consecutive episodes.

Clone this GitHub repository, create a new conda environment and install all the required libraries using the frozen conda_requirements.txt file.

$ git clone https://github.com/dimartinot/P3-Collaborative.git

$ cd P3-Collaborative/

$ conda create --name drlnd --file conda_requirements.txtIf you would rather use pip rather than conda to install libraries, execute the following:

$ pip install -r requirements.txtIn this case, you may need to create your own environment (it is, at least, highly recommended).

Provided that you used the conda installation, your environment name should be drlnd. If not, use conda activate <your_env_name>

$ conda activate drlnd

$ jupyter notebookThis project is made of two main jupyter notebooks using classes spread in multiple Python file:

DDPG.ipynb: Training of the MADDPG agent;

ddpg_agent.py: Contains the class definition of the basic DDPG agent and the MADDPG wrapper. Uses soft update (for weight transfer between the local and target networks) as well as a uniformly distributed replay buffer and a standardised Normal Random Variable to model the exploration/exploitation dilemma;model.py: Contains the PyTorch class definition of the Actor and the critic neural networks, used by their mutual target and local network's version;

4 weight files are provided, two for each agents (critic + actor):

checkpoint_actor_local_{agent_id}.pth&checkpoint_critic_local_{agent_id}.pth: these are the weights of a common ddpg agent using a uniformly distributed replay buffer where{agent_id}is to be replaced by either 0 or 1, depending on the agent to instantiate.