- Overview

- Summary

- Scikit-learn Pipeline

- AutoML

- Pipeline comparison

- Future work

- Proof of cluster clean up

- Citation

- References

This project is part of the Udacity Azure ML Nanodegree. In this project, I had the opportunity to build and optimize an Azure ML pipeline using the Python SDK and a custom Scikit-learn Logistic Regression model. I optimised the hyperparameters of this model using HyperDrive. Then, I used Azure AutoML to find an optimal model using the same dataset, so that I can compare the results of the two methods.

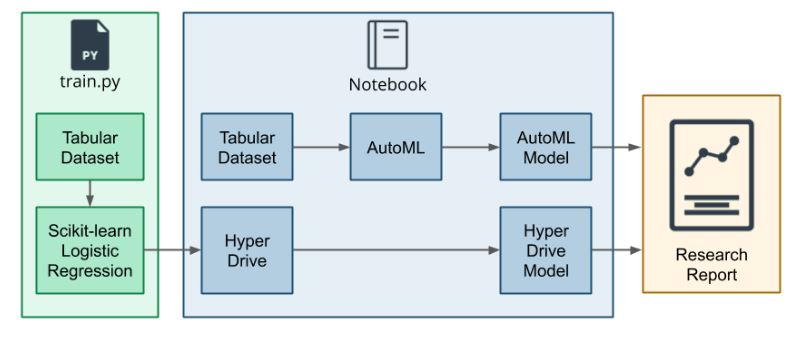

Below you can see an image illustrating the main steps I followed during the project.

Step 1: Set up the train script, create a Tabular Dataset from this set & evaluate it with the custom-code Scikit-learn logistic regression model.

Step 2: Creation of a Jupyter Notebook and use of HyperDrive to find the best hyperparameters for the logistic regression model.

Step 3: Next, load the same dataset in the Notebook with TabularDatasetFactory and use AutoML to find another optimized model.

Step 4: Finally, compare the results of the two methods and write a research report i.e. this Readme file.

This dataset contains marketing data about individuals. The data is related with direct marketing campaigns (phone calls) of a Portuguese banking institution. The classification goal is to predict whether the client will subscribe a bank term deposit (column y).

The best performing model was the HyperDrive model with ID HD_fda34223-a94c-456b-8bf7-52e84aa1d17e_14. It derived from a Scikit-learn pipeline and had an accuracy of 0.91760. In contrast, for the AutoML model with ID AutoML_ee4a685e-34f2-4031-a4f9-fe96ff33836c_13, the accuracy was 0.91618 and the algorithm used was VotingEnsemble.

Explain the pipeline architecture, including data, hyperparameter tuning, and classification algorithm.

Parameter sampler

I specified the parameter sampler as such:

ps = RandomParameterSampling(

{

'--C' : choice(0.001,0.01,0.1,1,10,20,50,100,200,500,1000),

'--max_iter': choice(50,100,200,300)

}

)

I chose discrete values with choice for both parameters, C and max_iter.

C is the Regularization while max_iter is the maximum number of iterations.

RandomParameterSampling is one of the choices available for the sampler and I chose it because it is the faster and supports early termination of low-performance runs. If budget is not an issue, we could use GridParameterSampling to exhaustively search over the search space or BayesianParameterSampling to explore the hyperparameter space.

Early stopping policy

An early stopping policy is used to automatically terminate poorly performing runs thus improving computational efficiency. I chose the BanditPolicy which I specified as follows:

policy = BanditPolicy(evaluation_interval=2, slack_factor=0.1)

evaluation_interval: This is optional and represents the frequency for applying the policy. Each time the training script logs the primary metric counts as one interval.

slack_factor: The amount of slack allowed with respect to the best performing training run. This factor specifies the slack as a ratio.

Any run that doesn't fall within the slack factor or slack amount of the evaluation metric with respect to the best performing run will be terminated. This means that with this policy, the best performing runs will execute until they finish and this is the reason I chose it.

Model and hyperparameters generated by AutoML.

I defined the following configuration for the AutoML run:

automl_config = AutoMLConfig(

compute_target = compute_target,

experiment_timeout_minutes=15,

task='classification',

primary_metric='accuracy',

training_data=ds,

label_column_name='y',

enable_onnx_compatible_models=True,

n_cross_validations=2)

experiment_timeout_minutes=15

This is an exit criterion and is used to define how long, in minutes, the experiment should continue to run. To help avoid experiment time out failures, I used the minimum of 15 minutes.

task='classification'

This defines the experiment type which in this case is classification.

primary_metric='accuracy'

I chose accuracy as the primary metric.

enable_onnx_compatible_models=True

I chose to enable enforcing the ONNX-compatible models. Open Neural Network Exchange (ONNX) is an open standard created from Microsoft and a community of partners for representing machine learning models. More info here.

n_cross_validations=2

This parameter sets how many cross validations to perform, based on the same number of folds (number of subsets). As one cross-validation could result in overfit, in my code I chose 2 folds for cross-validation; thus the metrics are calculated with the average of the 2 validation metrics.

Comparison of the two models and their performance. Differences in accuracy & architecture - comments

| HyperDrive Model | |

|---|---|

| id | HD_fda34223-a94c-456b-8bf7-52e84aa1d17e_14 |

| Accuracy | 0.9176024279210926 |

| AutoML Model | |

|---|---|

| id | AutoML_ee4a685e-34f2-4031-a4f9-fe96ff33836c_13 |

| Accuracy | 0.916176024279211 |

| AUC_weighted | 0.9469939634729121 |

| Algortithm | VotingEnsemble |

The difference in accuracy between the two models is rather trivial and although the HyperDrive model performed better in terms of accuracy, I am of the opinion that the AutoML model is actually better because of its AUC_weighted metric which equals to 0.9469939634729121 and is more fit for the highly imbalanced data that we have here. If we were given more time to run the AutoML, the resulting model would certainly be much more better. And the best thing is that AutoML would make all the necessary calculations, trainings, validations, etc. without the need for us to do anything. This is the difference with the Scikit-learn Logistic Regression pipeline, in which we have to make any adjustments, changes, etc. by ourselves and come to a final model after many trials & errors.

Some areas of improvement for future experiments and how these improvements might help the model

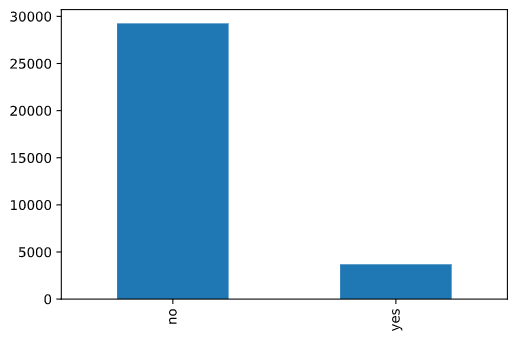

- Our data is highly imbalanced:

Class imbalance is a very common issue in classification problems in machine learning. Imbalanced data negatively impact the model's accuracy because it is easy for the model to be very accurate just by predicting the majority class, while the accuracy for the minority class can fail miserably. This means that taking into account a simple metric like accuracy in order to judge how good our model is can be misleading.

There are many ways to deal with imbalanced data. These include using:

- A different metric; for example, AUC_weighted which is more fit for imbalanced data

- A different algorithm

- Random Under-Sampling of majority class

- Random Over-Sampling of minority class

- The imbalanced-learn package

There are many other methods as well, but I will not get into much details here as it is out of scope.

Concluding, the high data imbalance is something that can be handled in a future execution, leading to an obvious improvement of the model.

-

Another factor that I would improve is n_cross_validations. As cross-validation is the process of taking many subsets of the full training data and training a model on each subset, the higher the number of cross validations is, the higher the accuracy achieved is. However, a high number also raises computation time (a.k.a. training time) thus costs so there must be a balance between the two factors.

Note: In case I would be able to improve

n_cross_validations, I would also have to increaseexperiment_timeout_minutesas the current setting of 15 minutes would not be enough.

[Moro et al., 2014] S. Moro, P. Cortez and P. Rita. A Data-Driven Approach to Predict the Success of Bank Telemarketing. Decision Support Systems, Elsevier, 62:22-31, June 2014

- Udacity Nanodegree material

- Tutorial: Create a classification model with automated ML in Azure Machine Learning

- How to Set Up an AutoML Process: Example Step-by-Step Instructions

- Bank Marketing, UCI Dataset: Original source of data

- Create and run machine learning pipelines with Azure Machine Learning SDK

- A Simple Guide to Scikit-learn Pipelines

- Building and optimizing pipelines in Scikit-Learn (Tutorial)

- I had no idea how to build a Machine Learning Pipeline. But here’s what I figured.

- Using Azure Machine Learning for Hyperparameter Optimization

- hyperdrive Package

- Tune hyperparameters for your model with Azure Machine Learning

- Configure data splits and cross-validation in automated machine learning

- train-hyperparameter-tune-deploy-with-sklearn

- classification-bank-marketing-all-features

- Resolving issue with Azure SDK version 1.19

- Prevent overfitting and imbalanced data with automated machine learning - Handle imbalanced data

- 10 Techniques to deal with Imbalanced Classes in Machine Learning