VPoser: Variational Human Pose Prior

Description

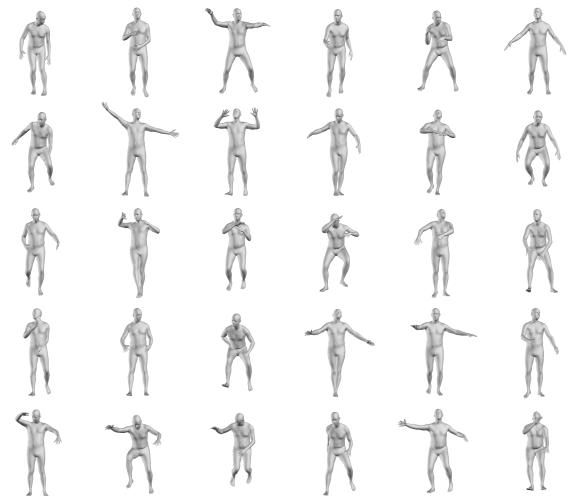

The articulated 3D pose of the human body is high-dimensional and complex. Many applications make use of a prior distribution over valid human poses, but modeling this distribution is difficult. Here we provide a learned distribution trained from a large dataset of human poses represented as SMPL bodies.

Here we present a method that is used in SMPLify-X. Our variational human pose prior, named VPoser, has the following features:

- defines a prior of SMPL pose parameters

- is end-to-end differentiable

- provides a way to penalize impossible poses while admitting valid ones

- effectively models correlations among the joints of the body

- introduces an efficient, low-dimensional, representation for human pose

- can be used to generate valid 3D human poses for data-dependent tasks

Table of Contents

- Description

- Installation

- Loading trained models

- Train VPoser

- Tutorials

- Citation

- License

- Acknowledgments

- Contact

Installation

To install the model:

- To install from PyPi simply run:

pip install human_body_prior- Clone this repository and install it using the setup.py script:

git clone https://github.com/nghorbani/human_body_prior

python setup.py installLoading Trained Models

To download the trained VPoser models go to the SMPL-X project website and register to get access to the downloads section. Afterwards, you can follow the model loading tutorial to load and use your trained VPoser models.

Train VPoser

We train VPoser, using a variational autoencoder that learns a latent representation of human pose and regularizes the distribution of the latent code to be a normal distribution. We train our prior on data from the AMASS dataset; specifically, the SMPL pose parameters of various publicly available human motion capture datasets. You can follow the data preparation tutorial to learn how to download and prepare AMASS for VPoser. Afterwards, you can train VPoser from scratch.

Tutorials

- VPoser PoZ Space for Body Models

- Sampling Novel Body Poses from VPoser

- Preparing VPoser Training Dataset

- Train VPoser from Scratch

Citation

Please cite the following paper if you use this code directly or indirectly in your research/projects:

@inproceedings{SMPL-X:2019,

title = {Expressive Body Capture: 3D Hands, Face, and Body from a Single Image},

author = {Pavlakos, Georgios and Choutas, Vasileios and Ghorbani, Nima and Bolkart, Timo and Osman, Ahmed A. A. and Tzionas, Dimitrios and Black, Michael J.},

booktitle = {Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR)},

year = {2019}

}

Also note that if you consider training your own VPoser for your research using the AMASS dataset, then please follow its respective citation guideline.

License

Software Copyright License for non-commercial scientific research purposes. Please read carefully the terms and conditions and any accompanying documentation before you download and/or use the SMPL-X/SMPLify-X model, data and software, (the "Model & Software"), including 3D meshes, blend weights, blend shapes, textures, software, scripts, and animations. By downloading and/or using the Model & Software (including downloading, cloning, installing, and any other use of this github repository), you acknowledge that you have read these terms and conditions, understand them, and agree to be bound by them. If you do not agree with these terms and conditions, you must not download and/or use the Model & Software. Any infringement of the terms of this agreement will automatically terminate your rights under this License.

Contact

The code in this repository is developed by Nima Ghorbani.

If you have any questions you can contact us at smplx@tuebingen.mpg.de.

For commercial licensing, contact ps-licensing@tue.mpg.de

Acknowledgments

We thank the authors of AMASS for their early release of their dataset for this project. We thank Partha Ghosh for disscussions and insights that helped with this project.