In this project we have used comments from reddit to play around with multiple functionalities of Apache Spark, HDFS and Docker. The main objective of the project was to get our hands dirty with setting up containerized clusters of Apache Spark, HDFS using Docker functionalities. This was done as a part of a grading requirement for a Data Engineering course.

The collaborators of this project are:

The main phases of the project were as follows:

- Setup a multi-container Spark-HDFS cluster using

docker-compose. - Run multiple analysis on the dataset provided - Reddit Comments.

- Choose few analysis pipelines and run experiments to find out the performance with variable worker nodes.

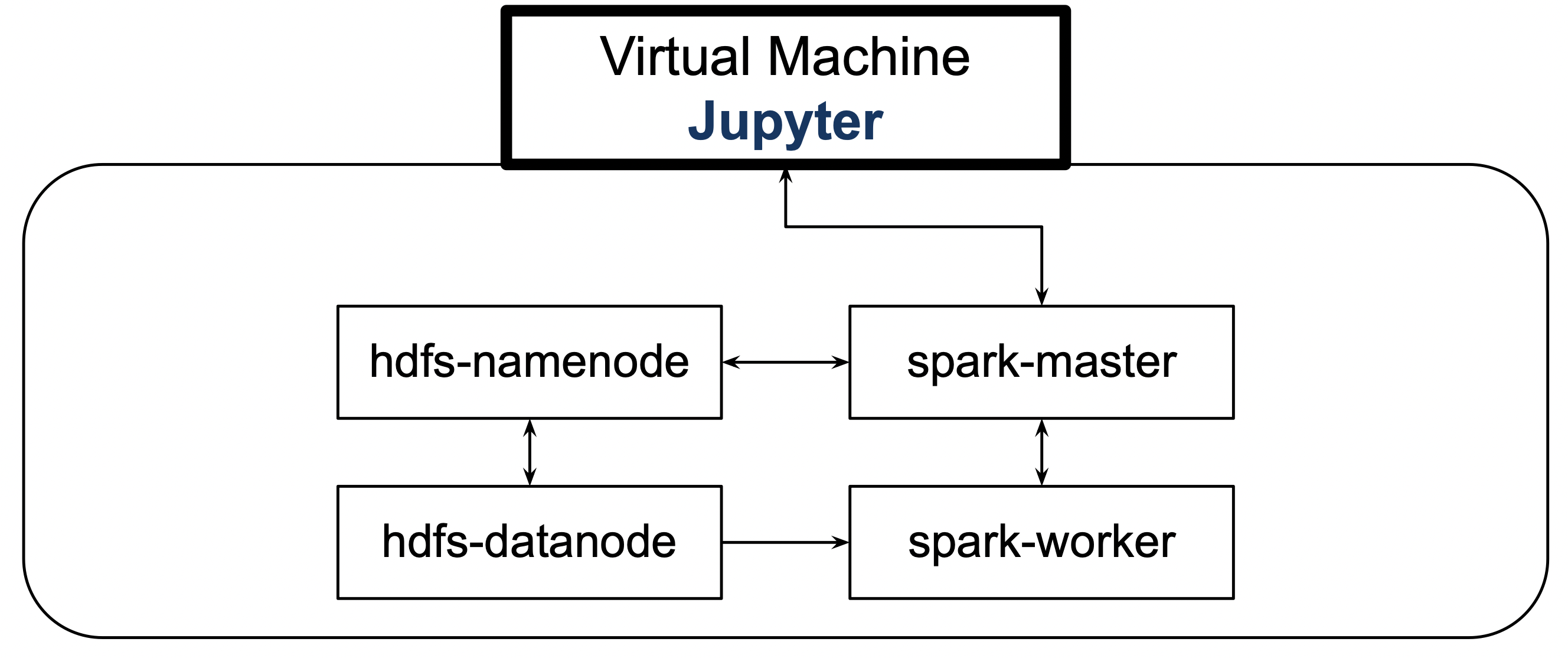

The base architecture we setup looks as follows:

-

Install pip, Jupyter and PySpark

sudo apt install python3-pip pip3 install Jupyter pip install pyspark -

Spark Installation

Download the tarball for Spark 3.2.3

wget https://dlcdn.apache.org/spark/spark-3.2.3/spark-3.2.3-bin-hadoop3.2.tgzExtract the tarball

tar -xvf spark-3.2.3-bin-hadoop3.2.tgzRemove the zipped file

rm spark-3.2.3-bin-hadoop3.2.tgzMove the directory

/opt/and rename it assparksudo mv spark-3.2.3-bin-hadoop3.2/ /opt/sparkSave SPARK_HOME in Environment Variables (.bashrc)

nano .bashrcAdd the following to the

.bashrcfileexport SPARK_HOME=/opt/spark export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin -

Install Docker using official documentation: Docker Installation

-

Clone the reddit-explore-uu repository

git clone https://github.com/dininduviduneth/reddit-explore-uu.git

-

Build and start the base cluster

docker-compose up -d -

Verify successful completion

Check if the required images are created

docker imagesThe images

bde2020/hadoop-namenode,bde2020/hadoop-datanode,docker-config_spark-masteranddocker-config_spark-workermust have been created.Check if the containers are up and running

docker psThere should be three containers running - one each for

spark-master,spark-worker,hadoop namenodeandhadoop datanode.

- Download the required Reddit dataset to the VM

wget https://files.pushshift.io/reddit/comments/[REDDIT_DATASET_FILENAME].zst - Extract the dataset

unzstd --ultra --memory=2048MB -d [REDDIT_DATASET_FILENAME].zst - Delete the zipped file

rm [REDDIT_DATASET_FILENAME].zst - Push the file to HDFS using

-putcommandhdfs dfs -put [REDDIT_DATASET_FILENAME] / - Once uploaded the dataset should be visible at the Web UI of HDFS -

http://[PUBLIC IP OF VM]:9870/

- Start a Jupyter server

python3 -m notebook --ip=* --no-browser

- Navigate to

data-analysiswithin thereddit-explore-uudirectory and run the analysis notebooks.

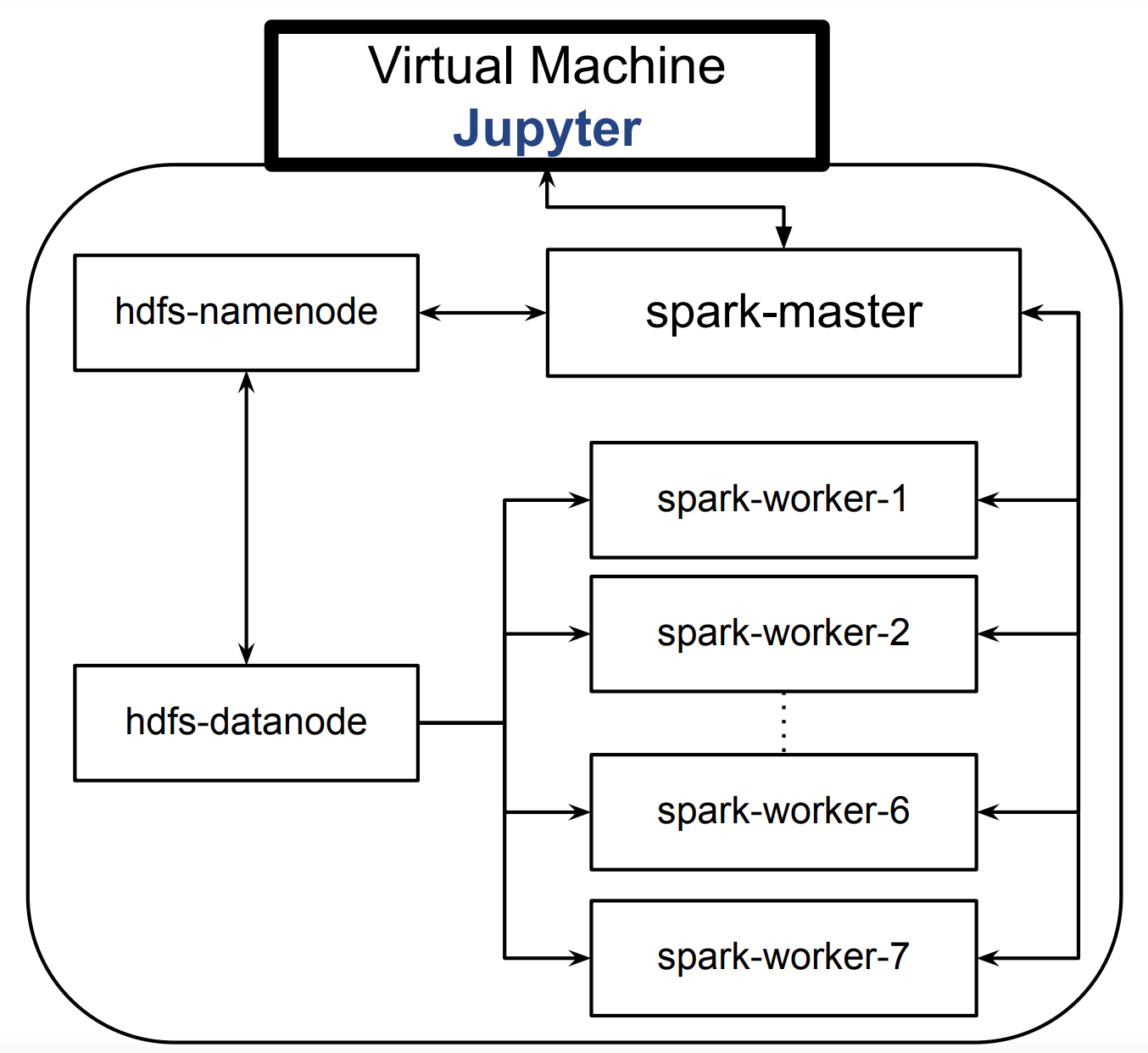

We have used Docker's docker-compose scale functionality to autoscale our spark worker nodes. The scaled setup for seven worker nodes looks as follows:

- To scale the workers by X

docker-compose scale spark-worker=X

-

To stop a scaled cluster, first scale down to 1 worker node

docker-compose scale spark-worker=1 -

To stop the containers

docker-compose stop