-

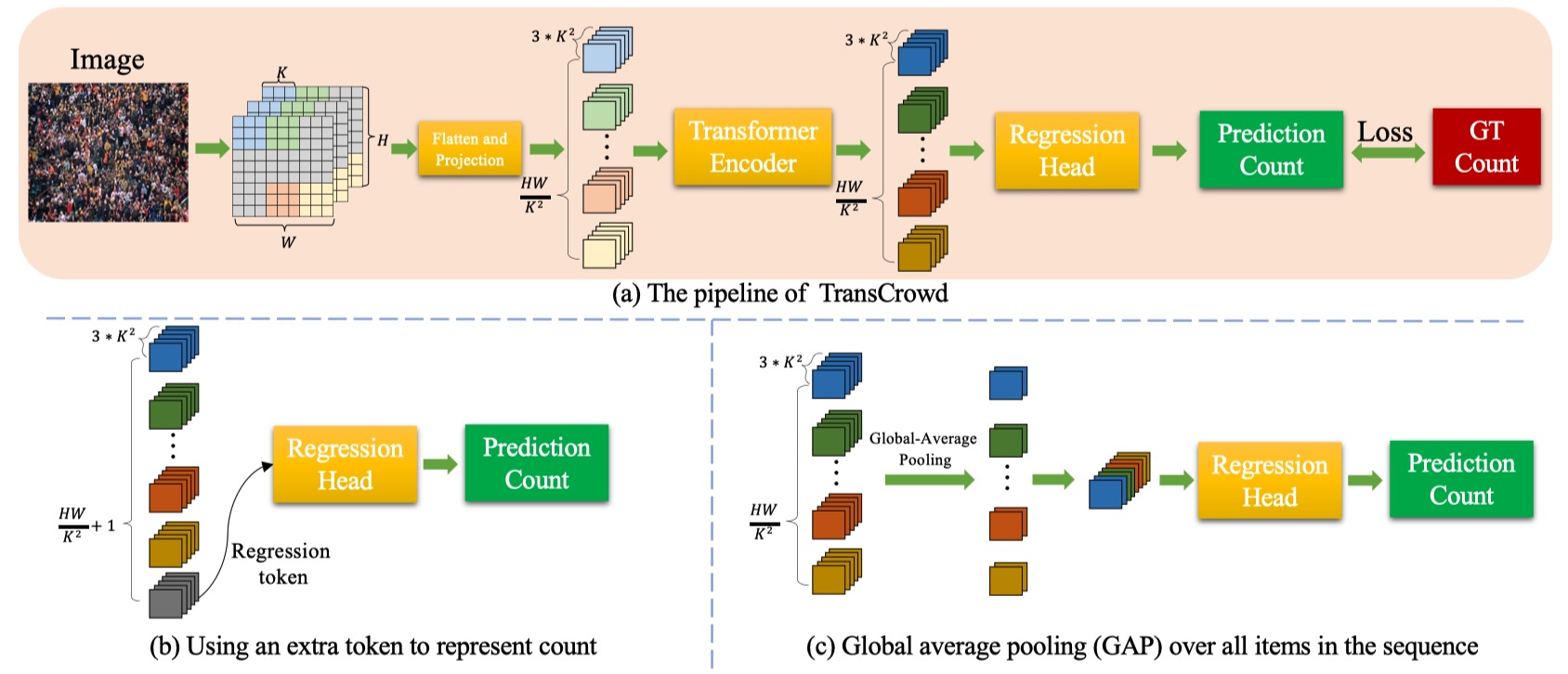

An officical implementation of TransCrowd: Weakly-Supervised Crowd Counting with Transformers. To the best of our knowledge, this is the first work to adopt a pure Transformer for crowd counting research. We observe that the proposed TransCrowd can effectively extract the semantic crowd information by using the self-attention mechanism of Transformer.

-

Paper Link

python >=3.6

pytorch >=1.5

opencv-python >=4.0

scipy >=1.4.0

h5py >=2.10

pillow >=7.0.0

imageio >=1.18

timm==0.1.30

- Download ShanghaiTech dataset from Baidu-Disk, passward:cjnx; or Google-Drive

- Download UCF-QNRF dataset from here

- Download JHU-CROWD ++ dataset from here

- Download NWPU-CROWD dataset from Baidu-Disk, passward:3awa; or Google-Drive

cd data

run python predataset_xx.py

“xx” means the dataset name, including sh, jhu, qnrf, and nwpu. You should change the dataset path.

Generate image file list:

run python make_npydata.py

Training example:

python train.py --dataset ShanghaiA --save_path ./save_file/ShanghaiA --batch_size 24 --model_type 'token'

python train.py --dataset ShanghaiA --save_path ./save_file/ShanghaiA --batch_size 24 --model_type 'gap'

Please utilize a single GPU with 24G memory or multiple GPU for training. On the other hand, you also can change the batch size.

Test example:

Download the pretrained model from Baidu-Disk, passward:8a8n

python test.py --dataset ShanghaiA --pre model_best.pth --model_type 'gap'

If you find this project is useful for your research, please cite:

@article{liang2022transcrowd,

title={TransCrowd: weakly-supervised crowd counting with transformers},

author={Liang, Dingkang and Chen, Xiwu and Xu, Wei and Zhou, Yu and Bai, Xiang},

journal={Science China Information Sciences},

volume={65},

number={6},

pages={1--14},

year={2022},

publisher={Springer}

}