Note to reviewers: see all required generated files in generated_files folder.

- New data files (

csv) are deposited into a specified folder. - All files in the specified folder should be combined into a single dataset.

- Feature columns are

lastmonth_activity,lastyear_activity,number_of_employees - Features are

numerical. - Label column to predict is

exited. - Labels are

binary.

Using config.json the following items are set:

input_folder_pathcontains all files to be used in current datasetoutput_folder_patha copy of the current dataset will be written heretest_data_patha witheld dataset in csv format for model testing.output_model_patha copy of the current model will be written hereprod_deployment_paththe model stored here is served by a web APIdb_pathpath to a sqlite database

- Locate all files in data folder.

- Compile data in all files into single dataset.

- Deduplicate.

sklearn.LogisticRegression

The following diagnostic items are tracked for each dataset.

- Column-wise mean, median, and standard deviation.

- Column-wise percent of missing values.

- Time required to ingest dataset.

- Time required to train on the dataset

- Model f1 score.

- All pip packages installed, current and latest-available versions

For serving of the model and associated metrics, a number of files are written to a deployment folder specified in the config.

trainedmodel.pklthe trained modeldataset_summary.csvsummary statistics of the dataset used during trainingingestedfiles.txtlist of the source files used for traininglatestscore.txtf1 score as well as timing informationpackages.csvpip packages installed vs latest-available version generated during training

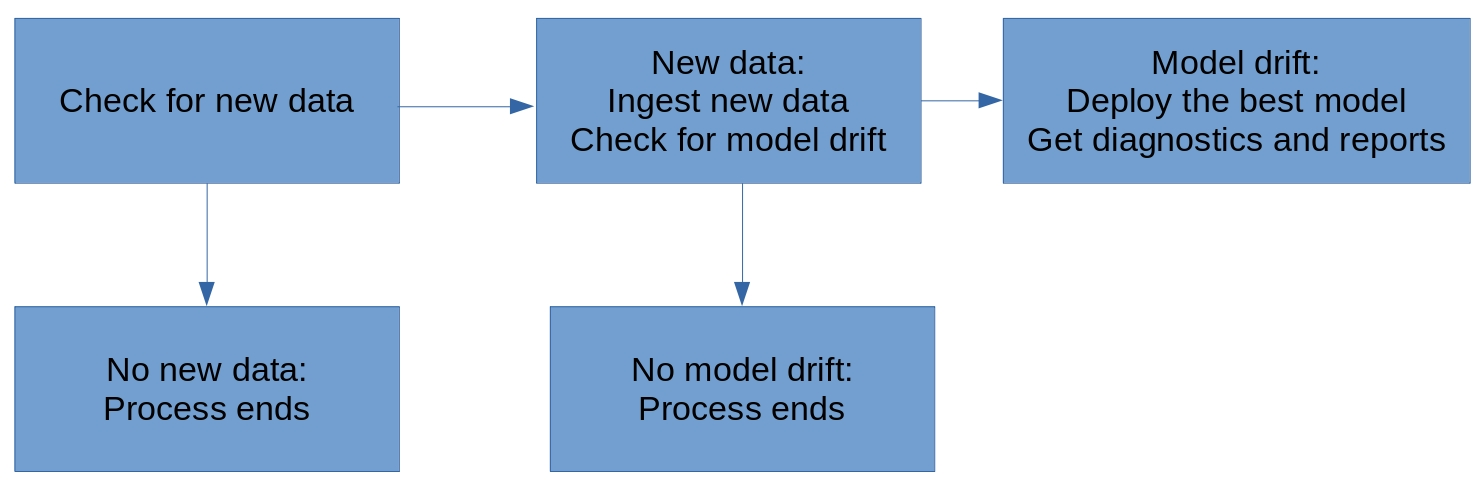

Automation is accomplished with a cronjob running fullprocess.py at regular intervals. This works as follows:

Deployed files are served on an API implemented with Flask in app.py.

Tests for this API are provided in apicalls.py.

*Note one limitation in this implementation as specified by the project rubric is the API can only provide predictions for files residing on the file system of the web app.