What it is. Yet another implementation of Ultralytics's yolov5, and with modules refactoring to make it available in deployment backends such as libtorch, onnxruntime, tvm and so on.

About the code. Follow the design principle of detr:

object detection should not be more difficult than classification, and should not require complex libraries for training and inference.

yolort is very simple to implement and experiment with. You like the implementation of torchvision's faster-rcnn, retinanet or detr? You like yolov5? You love yolort!

- Support exporting to

TorchScriptmodel. Oct. 8, 2020. - Support inferring with

LibTorchcpp interface. Oct. 10, 2020. - Add

TorchScriptcpp inference example. Nov. 4, 2020. - Refactor YOLO modules and support dynmaic batching inference. Nov. 16, 2020.

- Support exporting to

ONNX, and inferring withONNXRuntimeinterface. Nov. 17, 2020. - Add graph visualization tools. Nov. 21, 2020.

- Add

TVMcompile and inference notebooks. Feb. 5, 2021.

There are no extra compiled components in yolort and package dependencies are minimal, so the code is very simple to use.

-

Above all, follow the official instructions to install PyTorch 1.7.0+ and torchvision 0.8.1+

-

Installation via Pip

Simple installation from PyPI

pip install -U yolort

Or from Source

# clone yolort repository locally git clone https://github.com/zhiqwang/yolov5-rt-stack.git cd yolov5-rt-stack # install in editable mode pip install -e .

-

Install pycocotools (for evaluation on COCO):

pip install -U 'git+https://github.com/ppwwyyxx/cocoapi.git#subdirectory=PythonAPI' -

To read a source of image(s) and detect its objects 🔥

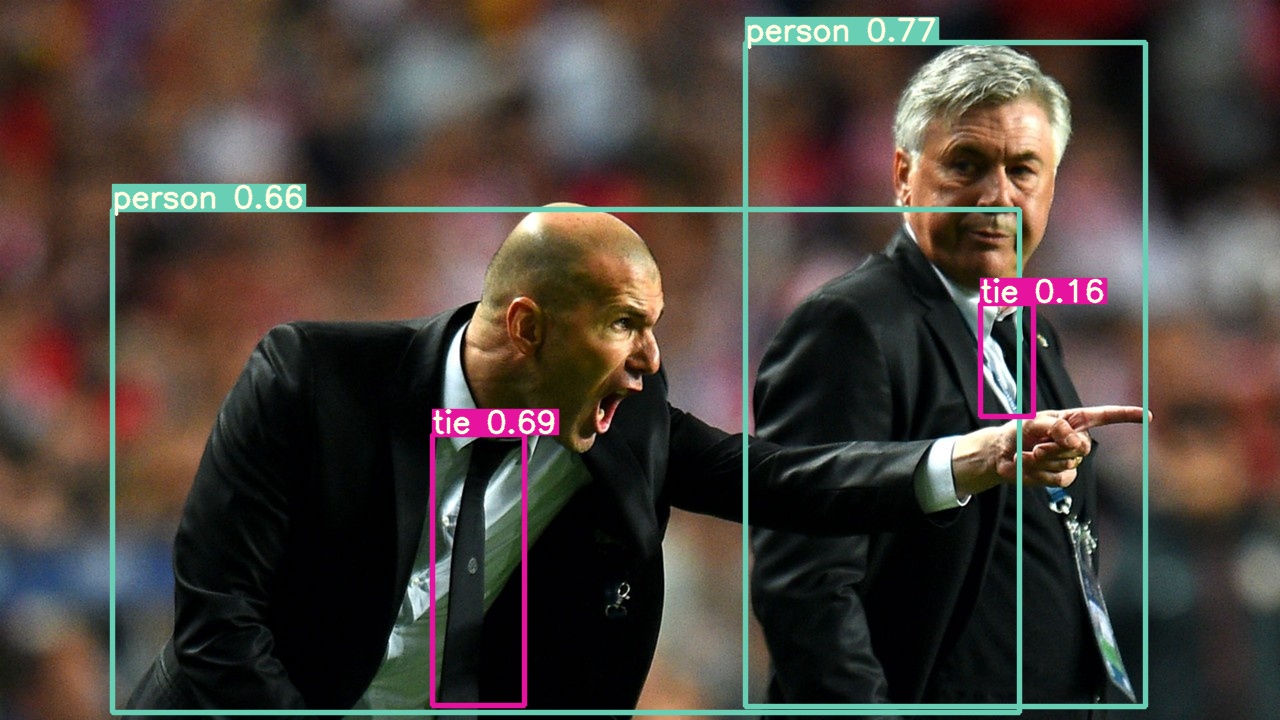

from yolort.models import yolov5s # Load model model = yolov5s(pretrained=True, score_thresh=0.45) model.eval() # Perform inference on an image file predictions = model.predict('bus.jpg') # Perform inference on a list of image files predictions = model.predict(['bus.jpg', 'zidane.jpg'])

The models are also available via torch hub, to load yolov5s with pretrained weights simply do:

model = torch.hub.load('zhiqwang/yolov5-rt-stack', 'yolov5s', pretrained=True)The module state of yolort has some differences comparing to ultralytics/yolov5. We can load ultralytics's trained model checkpoint with minor changes, and we have converted ultralytics's release v3.1 and v4.0. For example, if you want to convert a yolov5s (release 4.0) model, you can just run the following script:

from yolort.utils import update_module_state_from_ultralytics

# Update module state from ultralytics

model = update_module_state_from_ultralytics(arch='yolov5s', version='v4.0')

# Save updated module

torch.save(model.state_dict(), 'yolov5s_updated.pt')We provide a notebook to demonstrate how the model is transformed into torchscript. And we provide an C++ example of how to infer with the transformed torchscript model. For details see the GitHub actions.

Now, yolort can draw the model graph directly, checkout our visualize-jit-models notebook to see how to use and visualize the model graph.

- The implementation of

yolov5borrow the code from ultralytics. - This repo borrows the architecture design and part of the code from torchvision.

We appreciate all contributions. If you are planning to contribute back bug-fixes, please do so without any further discussion. If you plan to contribute new features, utility functions or extensions, please first open an issue and discuss the feature with us. BTW, leave a 🌟 if you liked it, this means a lot to us :)