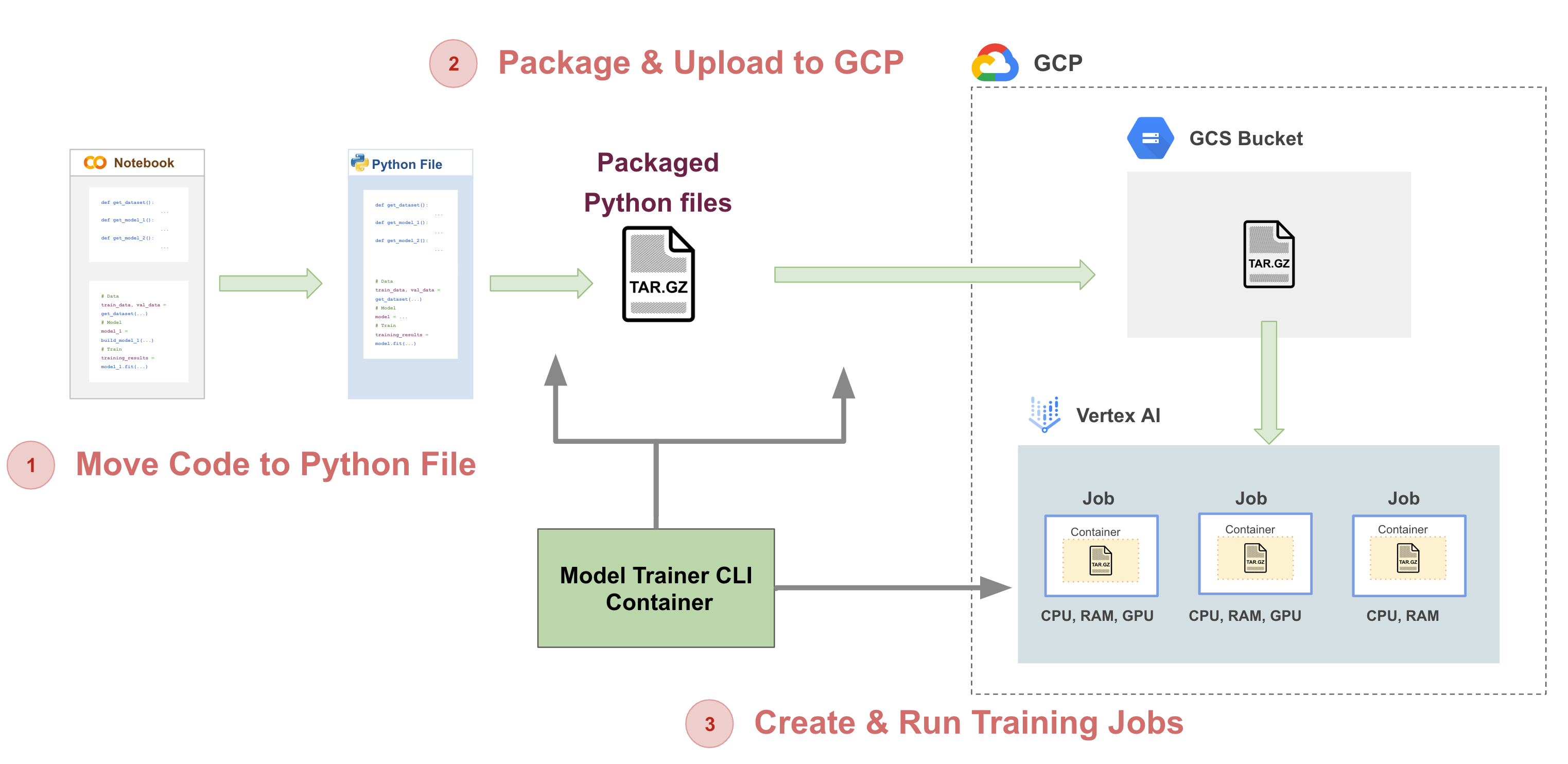

In this tutorial we will move code from notebooks to a python file and then perform serverless training jobs using Vertex AI:

- Have Docker installed

- Cloned this repository to your local machine with a terminal up and running

- Check that your Docker is running with the following command

docker run hello-world

Install Docker Desktop

- To make sure we can run multiple container go to Docker>Preferences>Resources and in "Memory" make sure you have selected > 4GB

Follow the instructions for your operating system.

If you already have a preferred text editor, skip this step.

In this tutorial we will setup a container to manage packaging python code for training and creating jobs on Vertex AI (AI Platform) to run training tasks.

In order to complete this tutorial you will need your GCP account setup and a WandB account setup.

- Clone or download from here

Search for each of these in the GCP search bar and click enable to enable these API's

- Vertex AI API

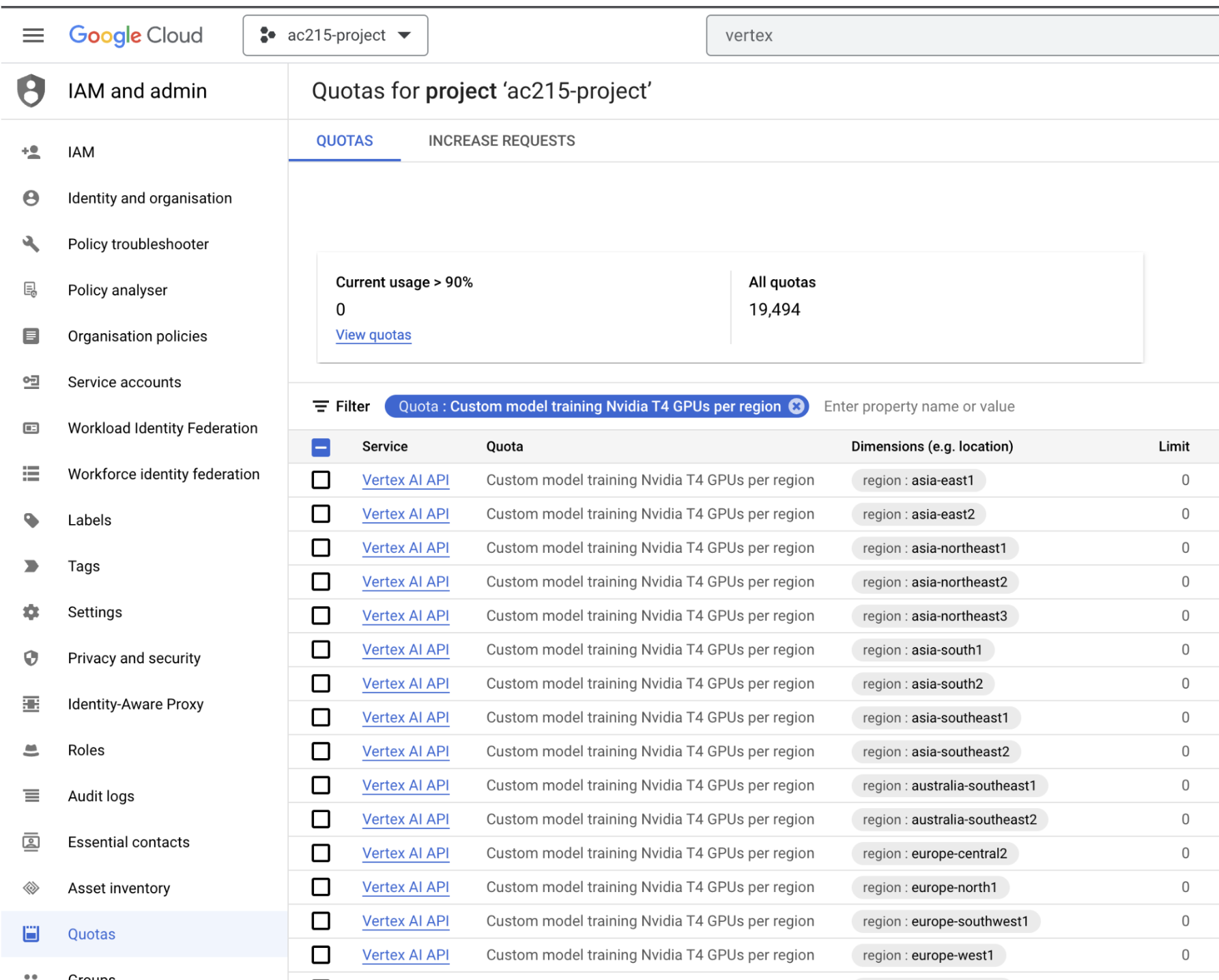

In order to do serverless training we need access to GPUs from GCP.

- Go to Quotas in your GCP console

- Filter by

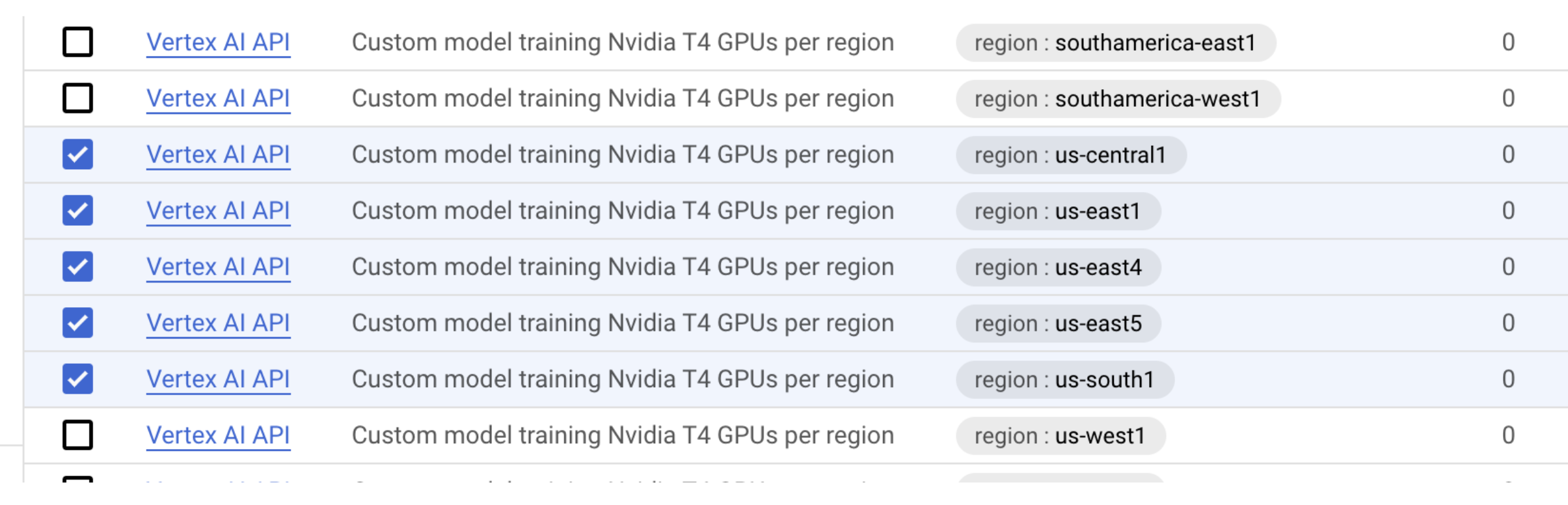

Quota: Custom model trainingand select a GPU type, e.g:Custom model training Nvidia T4 GPUs per region

- Select a few regions

- Click on

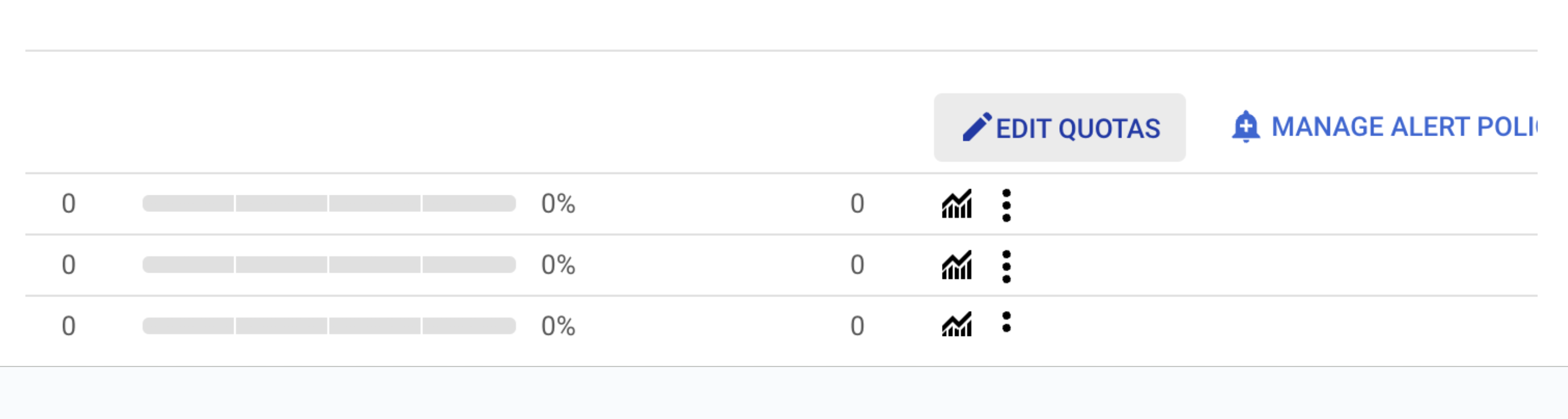

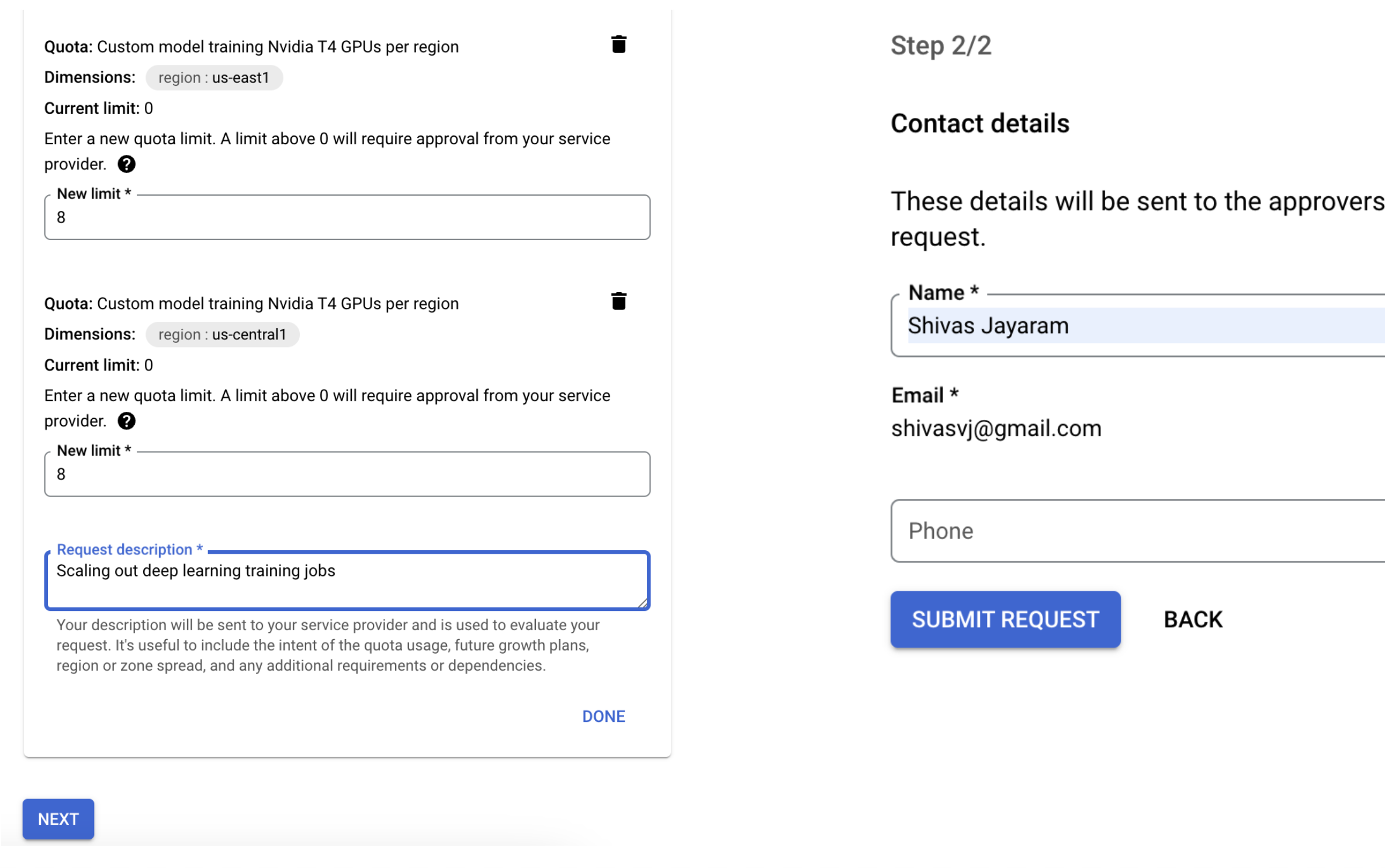

EDIT QUOTAS

- Put in a new limit and click

NEXT - Enter your Name and

SUBMIT REQUEST

- This processes usually takes a few hours to get approved

- Also based on how new your GCP account is, you may not be approved

Next step is to enable our container to have access to Storage buckets & Vertex AI(AI Platform) in GCP.

It is important to note that we do not want any secure information in Git. So we will manage these files outside of the git folder. At the same level as the model-training folder create a folder called secrets

Your folder structure should look like this:

|-model-training

|-secrets

- Here are the step to create a service account:

- To setup a service account you will need to go to GCP Console, search for "Service accounts" from the top search box. or go to: "IAM & Admins" > "Service accounts" from the top-left menu and create a new service account called "model-trainer". For "Service account permissions" select "Storage Admin", "AI Platform Admin", "Vertex AI Administrator".

- This will create a service account

- On the right "Actions" column click the vertical ... and select "Manage keys". A prompt for Create private key for "model-trainer" will appear select "JSON" and click create. This will download a Private key json file to your computer. Copy this json file into the secrets folder. Rename the json file to

model-trainer.json

We need a bucket to store the packaged python files that we will use for training.

- Go to

https://console.cloud.google.com/storage/browser - Create a bucket

mushroom-app-trainer[REPLACE WITH YOUR BUCKET NAME]

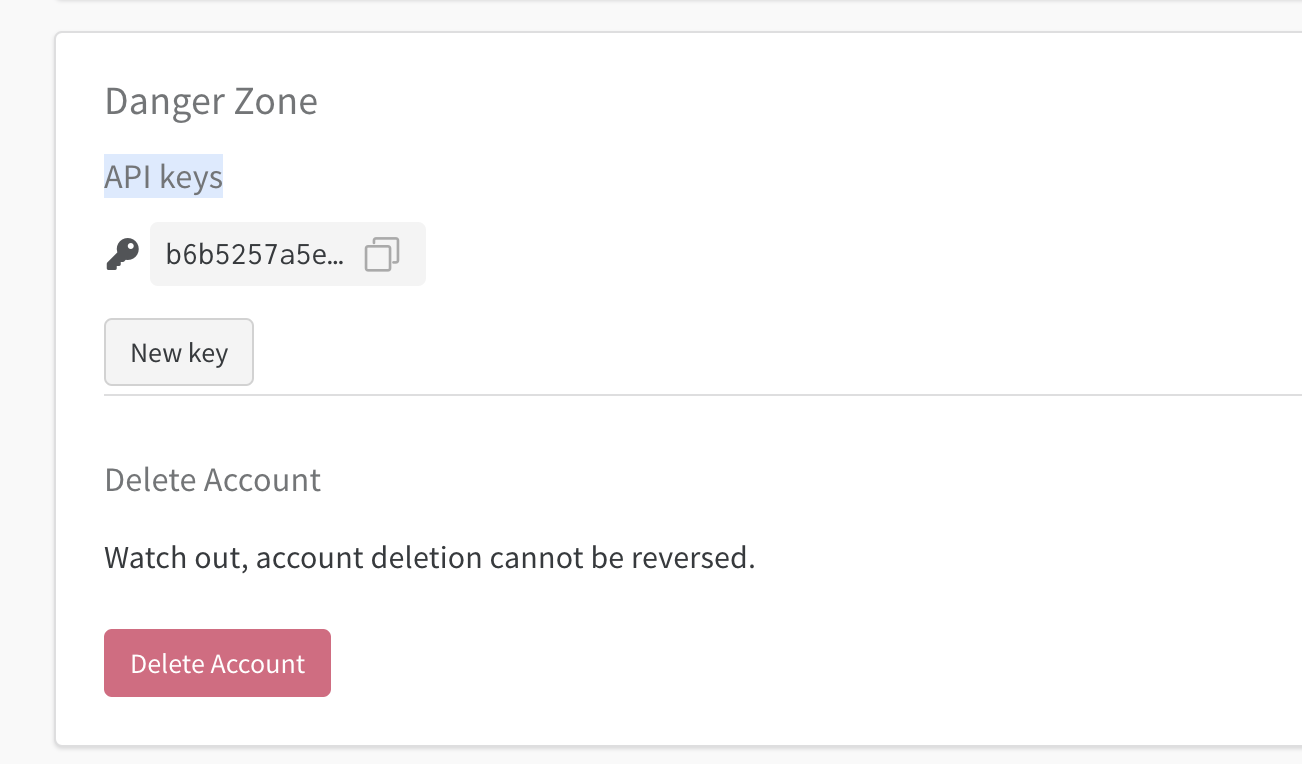

We want to track our model training runs using WandB. Get the API Key for WandB:

- Login into WandB

- Go to to User settings

- Scroll down to the

API keyssections - Copy the key

- Set an environment variable using your terminal:

export WANDB_KEY=...

Based on your OS, run the startup script to make building & running the container easy

This is what your docker-shell file will look like:

export IMAGE_NAME=model-training-cli

export BASE_DIR=$(pwd)

export SECRETS_DIR=$(pwd)/../secrets/

export GCS_BUCKET_URI="gs://mushroom-app-trainer" [REPLACE WITH YOUR BUCKET NAME]

export GCP_PROJECT="mlproject01-207413" [REPLACE WITH YOUR PROJECT]

# Build the image based on the Dockerfile

docker build -t $IMAGE_NAME -f Dockerfile .

# M1/2 chip macs use this line

#docker build -t $IMAGE_NAME --platform=linux/arm64/v8 -f Dockerfile .

# Run Container

docker run --rm --name $IMAGE_NAME -ti \

-v "$BASE_DIR":/app \

-v "$SECRETS_DIR":/secrets \

-e GOOGLE_APPLICATION_CREDENTIALS=/secrets/model-trainer.json \

-e GCP_PROJECT=$GCP_PROJECT \

-e GCS_BUCKET_URI=$GCS_BUCKET_URI \

-e WANDB_KEY=$WANDB_KEY \ [MAKE SURE YOU HAVE THIS VARIABLE SET]

$IMAGE_NAME

- Make sure you are inside the

model-trainingfolder and open a terminal at this location - Run

sh docker-shell.shordocker-shell.batfor windows - The

docker-shellfile assumes you have theWANDB_KEYas an environment variable and is passed into the container

- Open & Review

model-training>package>setup.py - All required third party libraries needed for training are specified in

setup.py - Open & Review

model-training>package>trainer>task.py - All training code for the mushroom app models are present in

task.py

- This script will create a

trainer.tar.gzfile with all the training code bundled inside it - Then this script will upload this packaged file to your GCS bucket can call it

mushroom-app-trainer.tar.gz

- Open & Review

model-training>cli.sh cli.shis a script file to make callinggcloud ai custom-jobs createeasier by maintaining all the parameters in the script- Make any required changes to your

cli.shfile: - List of

ACCELERATOR_TYPEare:- NVIDIA_TESLA_T4

- NVIDIA_TESLA_K80

- NVIDIA_TESLA_P100

- NVIDIA_TESLA_P4

- NVIDIA_TESLA_A100

- NVIDIA_TESLA_V100

- List of some

GCP_REGIONare:- us-central1

- us-east1

- us-east4

- us-south1

- us-west1

- ...

- Run

sh cli.sh

- Edit your

cli.shto not pass theaccelerator-typeandaccelerator-count - Run

sh cli.sh

- Go to Vertex AI Custom Jobs

- You will see the newly created job ready to be provisioned to run.

- Go to WandB

- Select the project

mushroom-training-vertex-ai - You will view the training metrics tracked and automatically updated

- Open & Review

model-training>cli-multi-gpu.sh - Open & Review

model-training>package>trainer>task_multi_gpu.py cli-multi-gpu.shis a script file to make callinggcloud ai custom-jobs createeasier by maintaining all the parameters in the script- Make any required changes to your

cli-multi-gpu.sh - Run

sh cli-multi-gpu.sh