💥 GiT: the first successful LLM-like general vision model unifies various vision tasks only with a vanilla ViT

This repo is the official implementation of paper: GiT: Towards Generalist Vision Transformer through Universal Language Interface as well as the follow-ups. We have made every effort to ensure that the codebase is clean, concise, easily readable, state-of-the-art, and relies only on minimal dependencies.

GiT: Towards Generalist Vision Transformer through Universal Language Interface

Haiyang Wang*, Hao Tang*, Li Jiang

$^\dagger$ , Shaoshuai Shi, Muhammad Ferjad Naeem, Hongsheng Li, Bernt Schiele, Liwei Wang$^\dagger$

- Primary contact: Haiyang Wang ( wanghaiyang6@stu.pku.edu.cn ), Hao Tang ( tanghao@stu.pku.edu.cn )

- 💫 What we want to do

- 🤔 Introduction

- 👀 Todo

- 🚀 Main Results

- 🛠️ Quick Start

- 👍 Acknowledgments

- 📘 Citation

We aim to unify the model architecture of vision and language through a plain transformer, reducing human biases such as modality-specific encoders and task-specific heads. A key advancement in deep learning is the shift from hand-crafted to autonomously learned features, inspiring us to reduce human-designed aspects in architecture. Moreover, benefiting from the flexibility of plain transformers, our framework can extend to more modalities like point clouds and graphs.

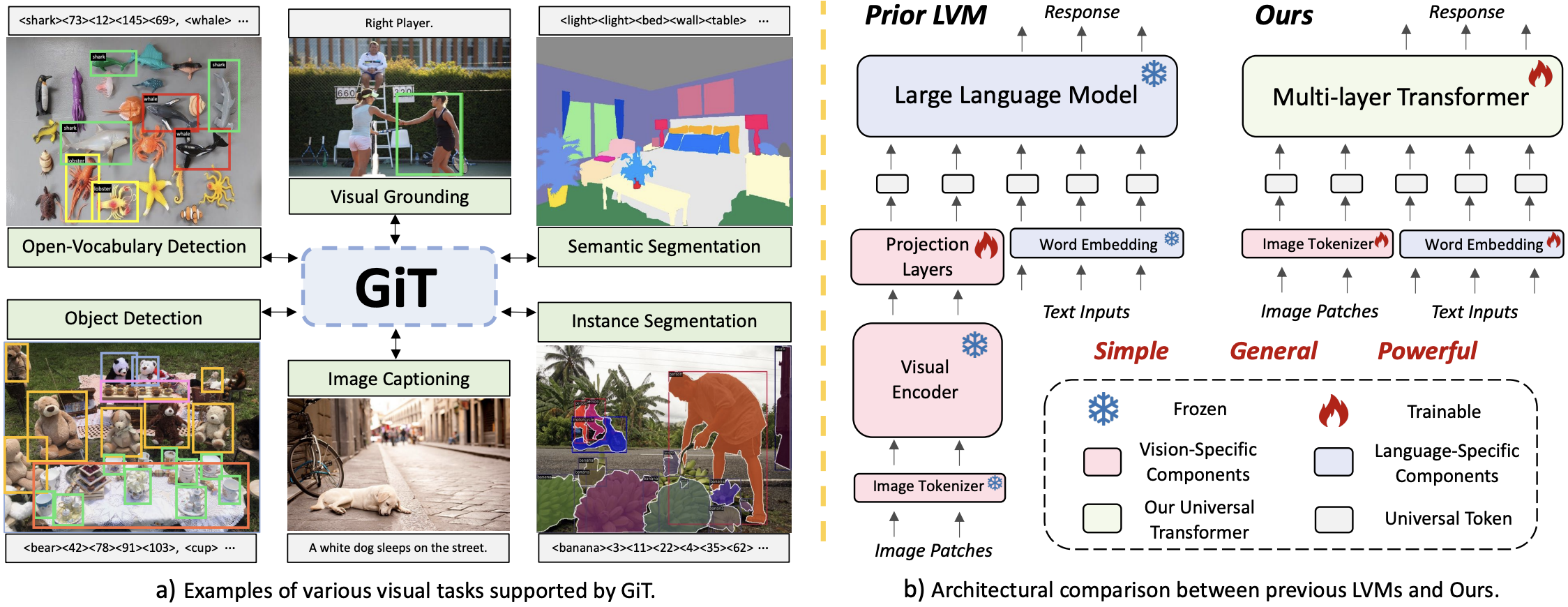

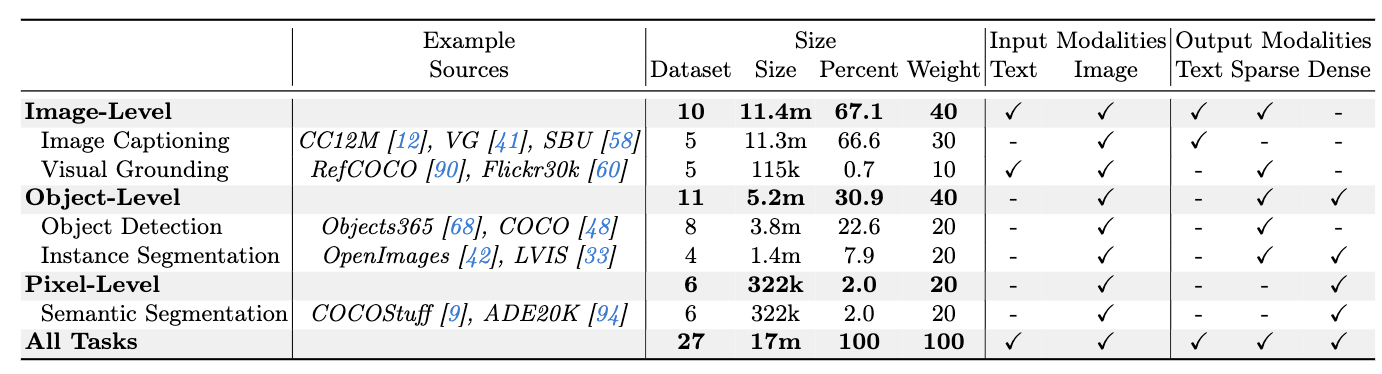

Building a universal computation model across all tasks stands as the cornerstone of artificial intelligence, reducing the need for task-specific designs. In this project, we introduce GiT (Generalist Vision Transformer). GiT has the following characteristics:

- 😮 Minimalist architecture design similar to LLM: GiT consists solely of a single transformer, without the inclusion of additional vision encoders and adapters.

- 🚀 Covering all types of visual understanding tasks: GiT addresses a spectrum of visual tasks, including object-level tasks (e.g., object detection), pixel-level tasks (e.g., semantic segmentation), and vision-language tasks (e.g., image captioning).

- 🤗 Achieving multi-task ability by unified language interface: Similar to LLM, GiT observes the task synergy effect in multi-task training. It fosters mutual enhancement across tasks, leading to significant improvements compared to isolated training.

- 🔥 Strong performance on zero-shot and few-shot benchmark: GiT scales well with model size and data, demonstrating remarkable generalizability across diverse scenarios after training on 27 datasets.

- [24-3-15] 🚀 Training and inference Code is released.

- [24-3-15] 👀 GiT is released on arXiv.

- Release the arXiv version.

- SOTA performance of generalist model on multi-tasking benchmark.

- SOTA performance of generalist model on zero- and few-shot benchmark.

- Clean up and release the inference code.

- Clean up and release the training code.

- Engineering Optimization (faster).

- Joint Training including Language (stronger).

- Code Refactoring (now is also a little dirty, sorry for that).

| Model | Params | Metric | Perfomance | ckpt | log | config |

|---|---|---|---|---|---|---|

| GiT-Bdetection | 131M | mAP | 45.1 | ckpt | log | config |

| GiT-Binsseg | 131M | mAP | 31.4 | ckpt | log | config |

| GiT-Bsemseg | 131M | mIoU | 47.7 | ckpt | log | config |

| GiT-Bcaption | 131M | BLEU-4 | 33.7 | ckpt | log | config |

| GiT-Bgrounding | 131M | Acc@0.5 | 83.3 | ckpt | log | config |

| Model | Params | Detection | Ins Seg | Sem Seg | Caption | Grounding | ckpt | log | config |

|---|---|---|---|---|---|---|---|---|---|

| GiT-Bmulti-task | 131M | 46.7 | 31.9 | 47.8 | 35.3 | 85.8 | ckpt | log | config |

| GiT-Lmulti-task | 387M | 51.3 | 35.1 | 50.6 | 35.7 | 88.4 | ckpt | log | config |

| GiT-Hmulti-task | 756M | 52.9 | 35.8 | 52.4 | 36.2 | 89.2 | ckpt | log | config |

| Model | Params | Detection | Ins Seg | Sem Seg | Caption | Grounding |

|---|---|---|---|---|---|---|

| GiT-Bsingle-task | 131M | 45.1 | 31.4 | 47.7 | 33.7 | 83.3 |

| Improvement | +1.6 | +0.5 | +0.1 | +1.6 | +2.5 | |

| GiT-Bmulti-task | 131M | 46.7 | 31.9 | 47.8 | 35.3 | 85.8 |

| Model | Params | Cityscapes (Det) |

Cityscapes (Ins Seg) |

Cityscapes (Sem Seg) |

SUN RGB-D | nocaps | ckpt | log | config |

|---|---|---|---|---|---|---|---|---|---|

| GiT-Bmulti-task | 131M | 21.8 | 14.3 | 34.4 | 30.9 | 9.2 | ckpt | log | config |

| GiT-Buniversal | 131M | 29.1 | 17.9 | 56.2 | 37.5 | 10.6 | ckpt | log | config |

| GiT-Luniversal | 387M | 32.3 | 20.3 | 58.0 | 39.9 | 11.6 | ckpt | log | config |

| GiT-Huniversal | 756M | 34.1 | 18.7 | 61.8 | 42.5 | 12.6 | ckpt | log | config |

| Model | Params | DRIVE | LoveDA | Potsdam | WIDERFace | DeepFashion | config |

|---|---|---|---|---|---|---|---|

| GiT-Bmulti-task | 131M | 34.3 | 24.9 | 19.1 | 17.4 | 23.0 | config |

| GiT-Buniversal | 131M | 51.1 | 30.8 | 30.6 | 31.2 | 38.3 | config |

| GiT-Luniversal | 387M | 55.4 | 34.1 | 37.2 | 33.4 | 49.3 | config |

| GiT-Huniversal | 756M | 57.9 | 35.1 | 43.4 | 34.0 | 52.2 | config |

conda create -n GiT python=3.8

conda activate GiT

# We only test in 1.9.1, may be other versions are also worked.

pip install torch==1.9.1+cu111 torchvision==0.10.1+cu111 torchaudio==0.9.1 -f https://download.pytorch.org/whl/torch_stable.html

pip install -U openmim

mim install "mmengine==0.8.3"

mim install "mmcv==2.0.1"

pip install "transformers==4.31.0"

git clone git@github.com:Haiyang-W/GiT.git

cd GiT

pip install -v -e .

pip install -r requirements/optional.txt

pip install -r requirements/runtime.txt

# if you face ChildFailedError, please update yapf

pip install yapf==0.40.1- Please download pretrained text embedding from huggingface and organize the downloaded files as follows:

GiT

|──bert_embed.pt

|——bert_embed_large.pt

|——bert_embed_huge.pt

- (Optional) Install Java manually for image caption evaluation. Without Java, you can train image caption normally, but fail in caption evaluation.

- (Optional) Install lvis api for LVIS dataset.

# current path is ./GiT

cd ..

pip install git+https://github.com/lvis-dataset/lvis-api.git

Multi-tasking benchmark contains coco2017 for object detection and instance segmentation, ade20k for semantic segmentation, coco caption for image caption, and refcoco series for visual grounding.

GiT

|──data

| |──ade

| | |──ADEChallengeData2016

| | | |──annorations

| | | | |──training & validation

| | | |──images

| | | | |──training & validation

| | | |──objectInfo150.txt

| | | |──sceneCategories.txt

| |──coco

| | |──annotations

| | | |──*.json

| | |──train2017

| | | |──*.jpg

| | |──val2017

| | | |──*.jpg

| |──coco_2014

| | |──annotations

| | | |──*.json

| | | |──coco_karpathy_test.json

| | | |──coco_karpathy_train.json

| | | |──coco_karpathy_val_gt.json

| | | |──coco_karpathy_val.json

| | |──train2014

| | | |──*.jpg

| | |──val2014

| | | |──*.jpg

| | |──refcoco

| | | |──*.p

We use 27 datasets in universal training. For more details about dataset preparation, please refer to here.

🚨 We only list part of the commands (GiT-B) below. For more detailed commands, please refer to here.

Detection

bash tools/dist_train.sh configs/GiT/single_detection_base.py ${GPU_NUM} --work-dir ${work_dir}GiT-B

bash tools/dist_train.sh configs/GiT/multi_fivetask_base.py ${GPU_NUM} --work-dir ${work_dir}GiT-B

bash tools/dist_train.sh configs/GiT/universal_base.py ${GPU_NUM} --work-dir ${work_dir}Detection

bash tools/dist_test.sh configs/GiT/single_detection_base.py ${ckpt_file} ${GPU_NUM} --work-dir ${work_dir}GiT-B

bash tools/dist_test.sh configs/GiT/multi_fivetask_base.py ${ckpt_file} ${GPU_NUM} --work-dir ${work_dir}Please download universal pretrain weight from huggingface and organize files as follows:

GiT

|──universal_base.pth

|——universal_large.pth

|——universal_huge.pth

Zero-shot

bash tools/dist_test.sh configs/GiT/zero-shot/zero_shot_cityscapes_det_base.py ${ckpt_file} ${GPU_NUM} --work-dir ${work_dir}Few-shot

bash tools/dist_train.sh configs/GiT/few-shot/few_shot_drive_det_base.py ${GPU_NUM} --work-dir ${work_dir}If you want to use GiT on your own dataset, please refer here for more details.

If your GPU memory is insufficient, you can reduce the resolution like here, where we lower the detection resolution to 672. It requires ~20 hours of training and reaches ~41.5 mAP.

- MMDetection The codebase we built upon. Thanks for providing such a convenient framework.

- BLIP We extract text embedding from BLIP pretrain models and use the web caption filtered by BLIP. Thanks for their efforts in open source and cleaning the dataset.

Please consider citing our work as follows if it is helpful.

@article{wang2024git,

title={GiT: Towards Generalist Vision Transformer through Universal Language Interface},

author={Haiyang Wang and Hao Tang and Li Jiang and Shaoshuai Shi and Muhammad Ferjad Naeem and Hongsheng Li and Bernt Schiele and Liwei Wang},

journal={arXiv preprint arXiv:2403.09394},

year={2024}

}