Object detection is one of the most fundamental and challenging problems in computer vision. It deals with detecting instances of a certain class in digital images and forms the basis of many other computer vision tasks such as instance segmentation, image captioning, object tracking, etc.

Most of the early object detection algorithms were built based on handcrafted features. Due to the lack of effective image representation at that time, it was necessary to design sophisticated feature representations and a variety of speed up skills to exhaust the usage of limited computing resources.

Object detection had reached a plateau after 2010 as the performance of hand-crafted features became saturated. Small gains were obtained by building ensemble systems and minor variants of successful models.

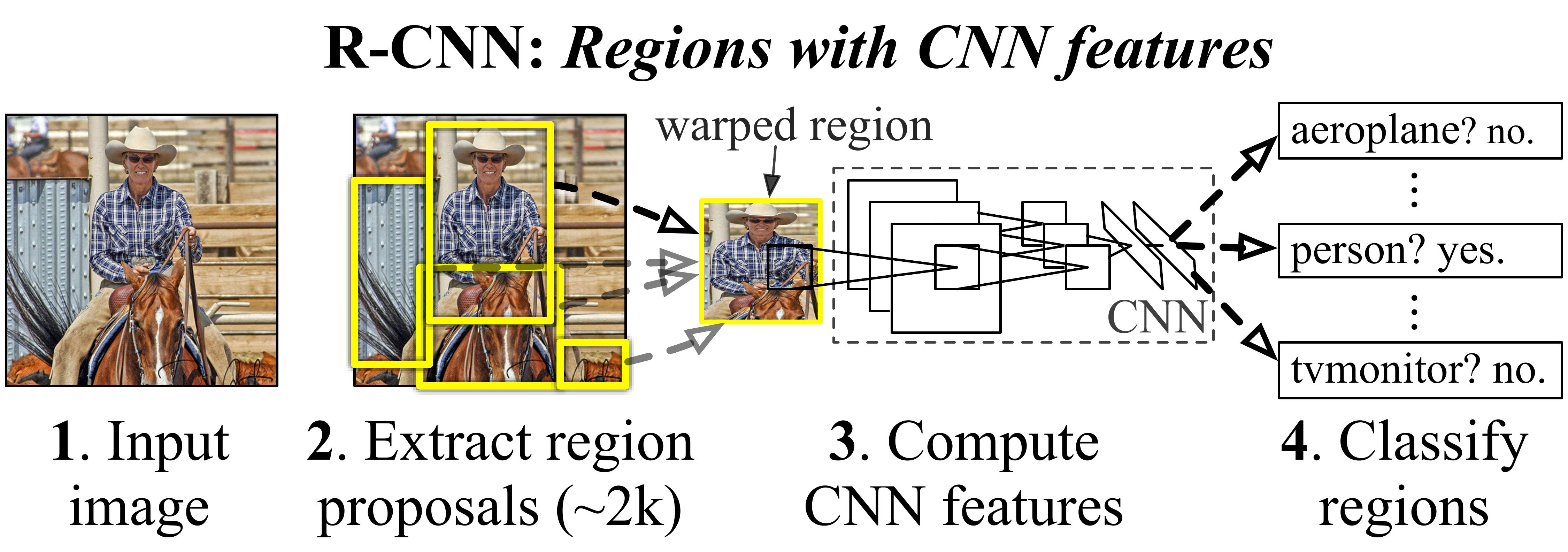

In 2012, the world saw the rebirth of convolutional neural networks. A deep convolutional network is able to learn robust and high-level feature representations of an image. In 2014, R.Girshick et. al. proposed the Regions with CNN features (RCNN) for object detection and since then, object detection started to evolve at an unprecedented speed.

Region-based ConvNet is a natural combination of heuristic region proposal method and ConvNet feature extractor. From an image, around 2000 bounding box proposals are generated using selective search. Those proposed regions are cropped and warped to a fixed-size 227 x 227 image. AlexNet is then used to extract a feature vector for each warped image. An SVM model is then trained to classify the object in the warped image using its features.

R-CNNs are composed of three main parts.

Selective search is performed on the input image to select multiple high-quality proposed regions. These proposed regions are generally selected on multiple scales and have different shapes and sizes. The category and ground-truth bounding box of each proposed region is labelled.

A pre-trained CNN is selected and placed, in truncated form, before the output layer. It transforms each proposed region into the input dimensions required by the network and uses forward computation to output the fetures extracted from the proposed regions.

The features and labelled category of eeach proposed region are combined as an example to train multiple support vector machines for object classification. In our case, each support vector machine is used to determine whether an example belongs to a certain category or not.

git clone https://github.com/Computer-Vision-IIITH-2021/project-revision.gitconda create --name envname python=3.8

conda activate envnameEnsure that you install all the dependencies in the virtual environment before running the program. We have used Python 3.8 during the development process. Do ensure that you have the same version before running the code.

cd src

python3 test.pyDo note that the training process may take several hours. The team members used the Ada High Performance Cluster of IIIT Hyderabad for training the model.

Dolton Fernandes |

George Tom |

Naren Akash R J |

All the team members are undergraduate research students at the Center for Visual Information Technology, IIIT Hyderabad, India.

The software can only be used for personal/research/non-commercial purposes. To cite the original paper:

@INPROCEEDINGS{7410526,

author={Girshick, Ross},

booktitle={2015 IEEE International Conference on Computer Vision (ICCV)},

title={Fast R-CNN},

year={2015},

volume={},

number={},

pages={1440-1448},

doi={10.1109/ICCV.2015.169}}