This repo contains code and data for our EMNLP 2023 paper about assessing topic model output with Large Language Models.

@inproceedings{stammbach-etal-2023-revisiting,

title = "Revisiting Automated Topic Model Evaluation with Large Language Models",

author = "Stammbach, Dominik and

Zouhar, Vil{\'e}m and

Hoyle, Alexander and

Sachan, Mrinmaya and

Ash, Elliott",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-main.581",

doi = "10.18653/v1/2023.emnlp-main.581",

pages = "9348--9357"

}

pip install --upgrade openai pandasDownload topic words and human annotations from the paper Is Automated Topic Model Evaluation Broken? from their github repository.

Following (Hoyle et al., 2021), we randomly sample intruder words which are not in the top 50 topic words for each topic.

python src-human-correlations/generate_intruder_words_dataset.py We can then call an LLM to automatically annotate the intruder words for each topic.

python src-human-correlations/chatGPT_evaluate_intruders.py --API_KEY a_valid_openAI_api_keyFor the ratings task, simply call the file which rates topic word sets (no need to generate a dataset first)

python src-human-correlations/chatGPT_evaluate_topic_ratings.py --API_KEY a_valid_openAI_api_key(In case the openAI API breaks, we simply save all output in a json file, and would restart the script while skipping all already annotated datapoints.)

We evaluate using a bootstrapp appraoch where we sample human annotations and LLM annotations for each datapoint. We then average these sampled annotation, and compute a spearman's rho for each bootstrapped sample. We report the mean spearman's rho over all 1000 bootstrapped samples.

python src-human-correlations/human_correlations_bootstrap.py --filename coherence-outputs-section-2/ratings_outfile_with_dataset_description.jsonl --task ratingsDownload fitted topic models and metadata for two datasets (bills and wikitext) here and unzip

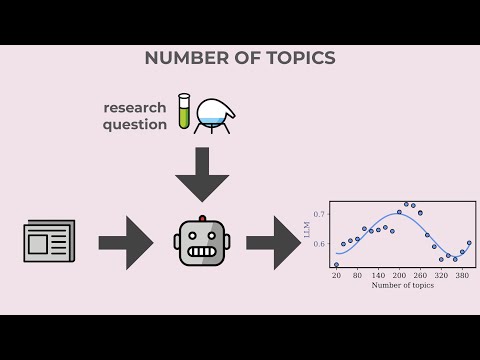

To run LLM ratings of topic word sets on a dataset (wiki or NYT) with broad or specific ground-truth example topics, simply run:

python src-number-of-topics/chatGPT_ratings_assignment.py --API_KEY a_valid_openAI_api_key --dataset wikitext --label_categories broadWe also assign a document label to the top documents belonging to a topic, following Doogan and Buntine, 2021. We then average purity per document collection, and the number of topics with on averag highest purities is the preferred cluster of this procedure.

To run LLM label assignments on a dataset (wiki or NYT) with broad or specific ground-truth example topics, simply run:

python src-number-of-topics/chatGPT_document_label_assignment.py --API_KEY a_valid_openAI_api_key --dataset wikitext --label_categories broadpython src-number-of-topics/LLM_scores_and_ARI.py --label_categories broad --method label_assignment --dataset bills --label_categories broad --filename number-of-topics-section-4/document_label_assignment_wikitext_broad.jsonlPlease contact Dominik Stammbach regarding any questions.