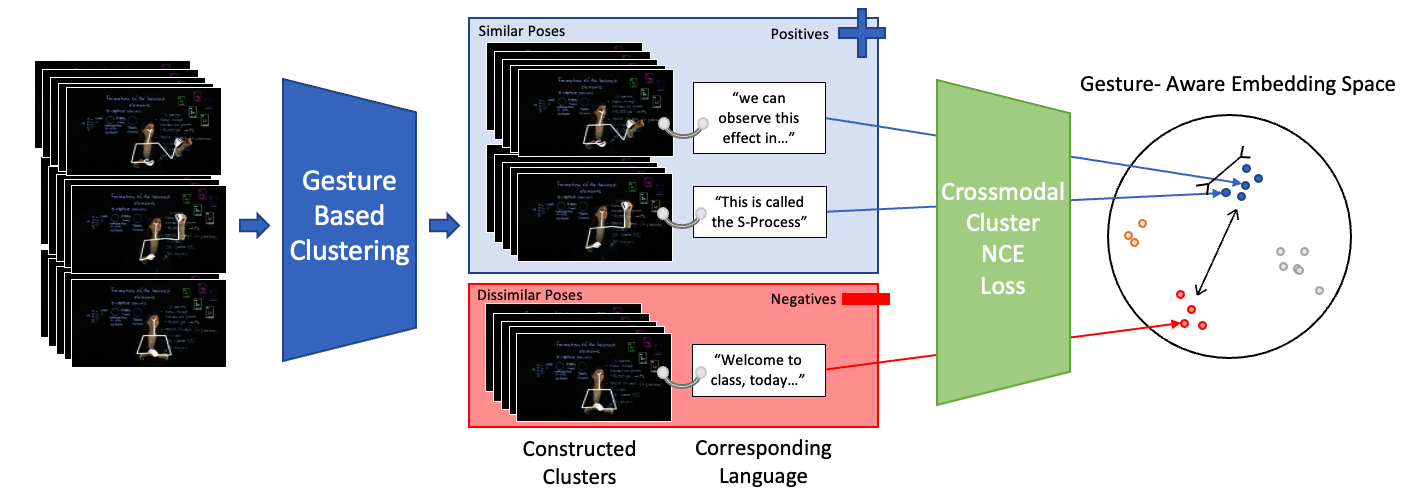

This is the official repository for the paper Crossmodal clustered contrastive learning: Grounding of spoken language to gesture

Dong Won Lee, Chaitanya Ahuja, Louis-Philippe Morency - GENEA @ ICMI 2021

Links: Paper, Presentation Video, Dataset Website [1]

This repo has information on the training code and pre-trained models.

For the dataset, we refer you to:

- Dataset Website for downloading the dataset

- Dataset Repo for scripts to download the audio files and other dataloader arguments.

For the purposes of this repository, we assume that the dataset is downloaded to ../data/

This repo is divided into the following sections:

Clone only the master branch,

git clone https://github.com/dondongwon/CC_NCE_GENEA.git- pycasper

mkdir ../pycasper

git clone https://github.com/chahuja/pycasper ../pycasper

ln -s ../pycasper/pycasper .- Create an anaconda or a virtual enviroment and activate it

pip install -r requirements.txtTo train a model from scratch, run the following script after chaging directory to src,

python train.py

-path2data ../data ## path to data files

-seed 11212 / manual seed

-cpk 1GlobalNoDTW \ ## checkpoint name which is a part of experiment file PREFIX

-exp 1 \ ## creates a unique experiment number

-speaker '["lec_cosmic"]' \ ## Speaker

-save_dir save/aisle \ ## save directory

-num_cluster 8 \ ## number of clusters in the Conditional Mix-GAN

-model JointLateClusterSoftContrastiveGlobalDTW_G \ ## Name of the model

-fs_new '15' \ ## frame rate of each modality

-modalities '["pose/normalize", "audio/log_mel_400", "text/tokens"]' \ ## all modalities as a list. output modality first, then input modalities

-gan 1 \ ## Flag to train with a discriminator on the output

-loss L1Loss \ ## Choice of loss function. Any loss function torch.nn.* will work here

-window_hop 5 \ ## Hop size of the window for the dataloader

-render 0 \ ## flag to render. Default 0

-batch_size 32 \ ## batch size

-num_epochs 20 \ ## total number of epochs

-stop_thresh 3 \ number of consequetive validation loss increses before stopping

-overfit 0 \ ## flag to overfit (for debugging)

-early_stopping 1 \ ## flag to perform early stopping

-dev_key dev_spatialNorm \ ## metric used to choose the best model

-feats '["pose", "velocity", "speed"]' \ ## Festures used to make the clusters

-note 1GlobalNoDTW \ ## Notes about the model

-dg_iter_ratio 1 \ ## Discriminator Generator Iteration Ratio

-repeat_text 0 \ ## tokens are not repeated to match the audio frame rate

-num_iters 100 \ ## breaks the loop after number of iteration

-min_epochs 10 \ ## early stopping can occur after these many epochs occur

-optim AdamW \ ## AdamW optimizer

-lr 0.0001 \ ## Learning Rate

-DTW 0 \ ## Use dynamic time warping to construct clusters in the output space of poses

Example scripts for training models in the paper can be found as follows,

python sample.py \

-load <path2weights> \ ## path to PREFIX_weights.p file

-path2data ../data ## path to datapython render.py \

-render 20 \ ## number of intervals to render

-load <path2weights> \ ## path to PREFIX_weights.p file

-render_text 1 ## if 1, render text on the video as well.

-path2data ../data ## path to data[1] - Ahuja, Chaitanya et al. "Style Transfer for Co-Speech Gesture Animation: A Multi-Speaker Conditional Mixture Approach" ECCV 2020.

[2] - Kucherenko, Taras, et al. "Gesticulator: A framework for semantically-aware speech-driven gesture generation." ICMI 2020.

[3] - Ginosar, Shiry, et al. "Learning individual styles of conversational gesture." CVPR 2019.If you enjoyed this work, I would recommend the following projects which study different axes of nonverbal grounding,