Code for the paper "PortraitNet: Real-time portrait segmentation network for mobile device". @ CAD&Graphics 2019

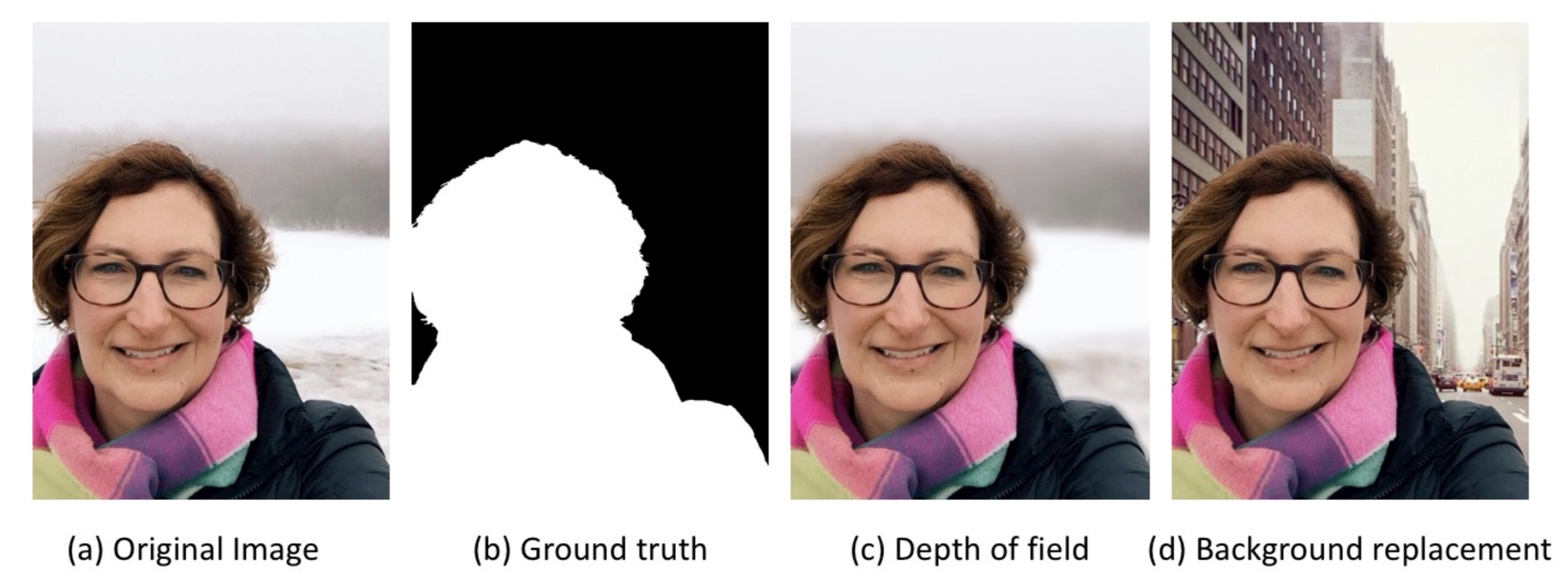

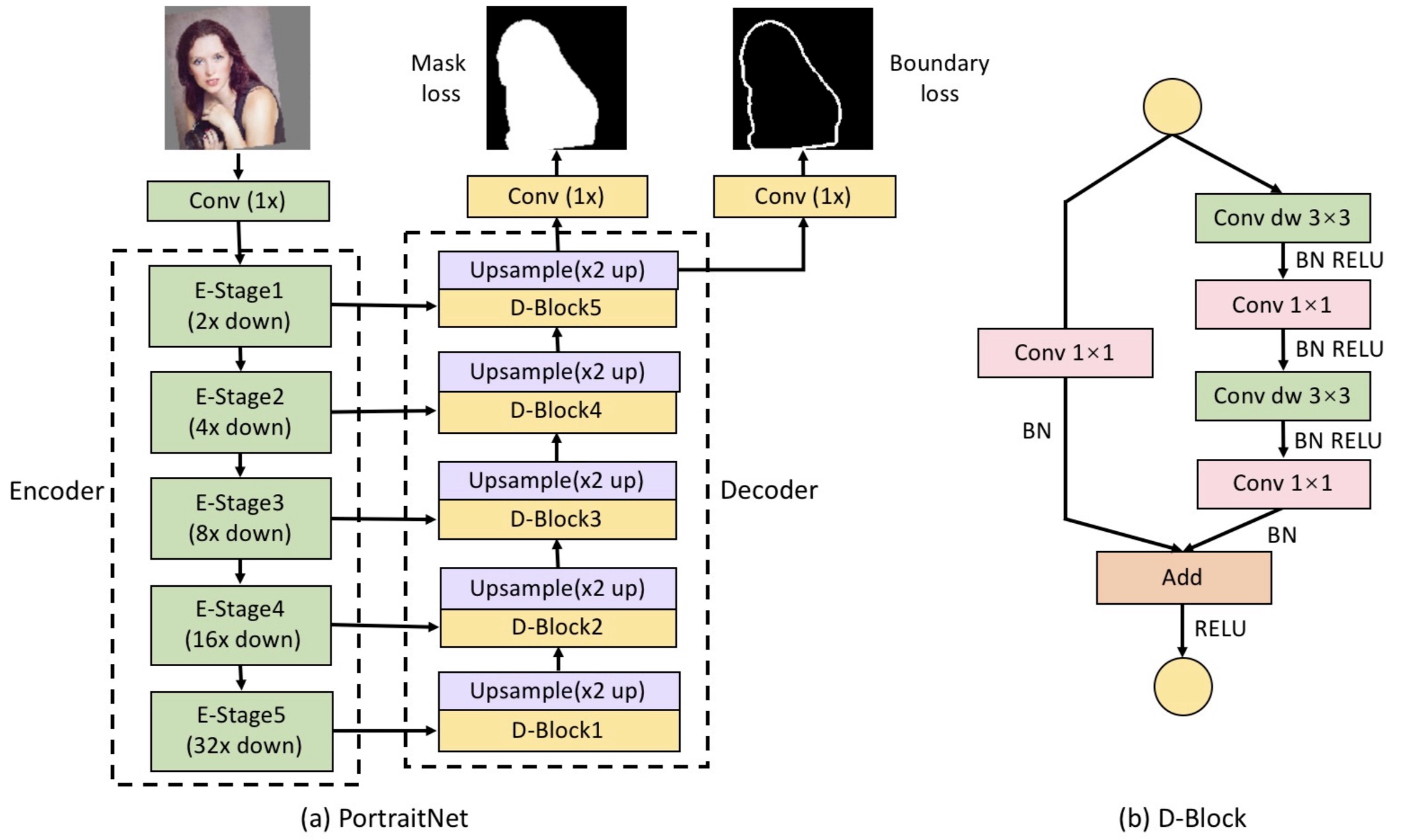

We propose a real-time portrait segmentation model, called PortraitNet, that can run effectively and efficiently on mobile device. PortraitNet is based on a lightweight U-shape architecture with two auxiliary losses at the training stage, while no additional cost is required at the testing stage for portrait inference.

- python 2.7

- PyTorch 0.3.0.post4

- Jupyter Notebook

- pip install easydict matplotlib tqdm opencv-python scipy pyyaml numpy

-

EG1800 Since several image URL links are invalid in the original EG1800 dataset, we finally use 1447 images for training and 289 images for validation.

-

Supervise-Portrait Supervise-Portrait is a portrait segmentation dataset collected from the public human segmentation dataset Supervise.ly using the same data process as EG1800.

- Download the datasets (EG1800 or Supervise-Portriat). If you want to training at your own dataset, you need to modify data/datasets.py and data/datasets_portraitseg.py.

- Prepare training/testing files, like data/select_data/eg1800_train.txt and data/select_data/eg1800_test.txt.

- Select and modify the parameters in the folder of config.

- Start the training with single gpu:

cd myTrain

python2.7 train.py

In the folder of myTest:

- you can use

EvalModel.ipynbto test on testing datasets. - you can use

VideoTest.ipynbto test on a single image or video.

Using tensorboard to visualize the training process:

cd path_to_save_model

tensorboard --logdir='./log'

from Dropbox:

- mobilenetv2_eg1800_with_two_auxiliary_losses(Training on EG1800 with two auxiliary losses)

- mobilenetv2_supervise_portrait_with_two_auxiliary_losses(Training on Supervise-Portrait with two auxiliary losses)

- mobilenetv2_total_with_prior_channel(Training on Human with prior channel)

from Baidu Cloud:

- mobilenetv2_eg1800_with_two_auxiliary_losses(Training on EG1800 with two auxiliary losses)

- mobilenetv2_supervise_portrait_with_two_auxiliary_losses(Training on Supervise-Portrait with two auxiliary losses)

- mobilenetv2_total_with_prior_channel(Training on Human with prior channel)