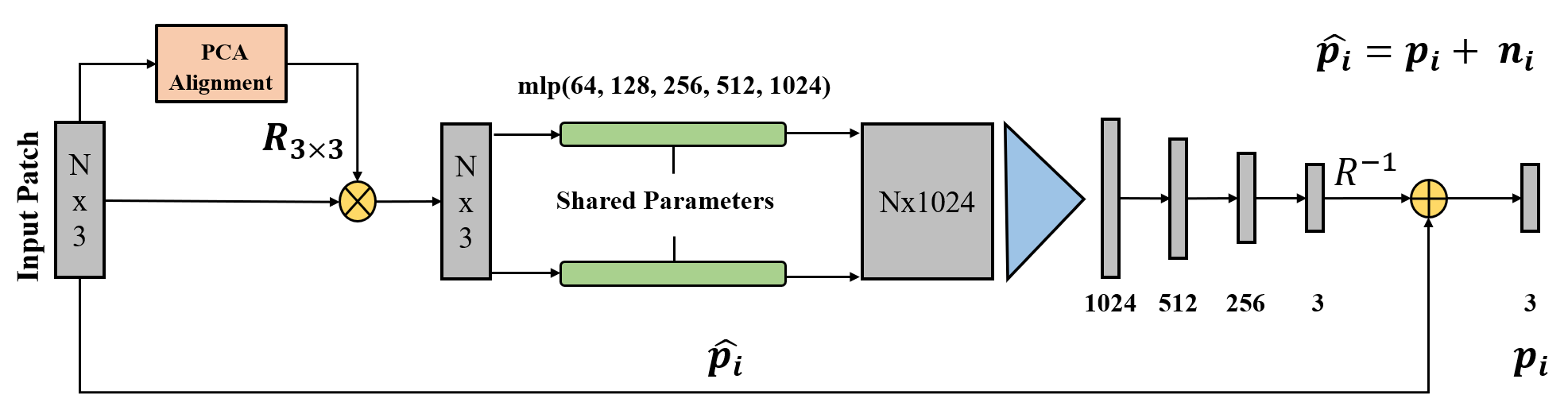

This is our implementation of Pointfilter, a network that automatically and robustly filters point clouds by removing noise and preserving their sharp features.

The pipeline is built based on PointNet (a patch-based version of PointNet). Instead of using STN for alignment, we align the input patches by aligning their principal axes of the PCA with the Cartesian space.

- Python 3.6

- PyTorch 1.5.0

- Windows 10 and VS 2017 (VS 2017 is used for compling the chamfer operators)

- CUDA and CuDNN (CUDA 10.1 & CuDNN 7.5)

- TensorboardX (2.0) if logging training info.

You can download the training datasets used in this work from the following link, or prepare yourself datasets and change corresponding codes in Pointfilter_DataLoader.py. Create a folder named Dataset and unzip the files on it. In the datasets the input and ground truth point clouds are stored in different files with '.npy' extension. For each clean point cloud name.npy with normals name_normal.npy, there are 5 correponsing noisy models named as name_0.0025.npy, name_0.005.npy, name_0.01.npy, name_0.015.npy, and name_0.025.npy.

Install required python packages:

pip install numpy

pip install scipy

pip install plyfile

pip install scikit-learn

pip install tensorboardX (only for training stage)

pip install torch==1.5.0+cu101 torchvision==0.6.0+cu101 -f https://download.pytorch.org/whl/torch_stable.htmlClone this repository:

git clone https://github.com/dongbo-BUAA-VR/Pointfilter.git

cd PointfilterCompile Chamfer Opertors (only for evaluation)

cd ./Pointfilter/Customer_Module/chamfer_distance

python setup.py installUse the script train.py to train a model in the our dataset (the re-trained model will be saved at ./Summary/Train):

cd Pointfilter

python train.pycd Pointfilter

python test.py --eval_dir ./Summary/pre_train_modelcd Pointfilter

python test.py --eval_dir ./Summary/TrainIf you use our work, please cite our paper:

@article{zhang2020pointfilter,

title={Pointfilter: Point cloud filtering via encoder-decoder modeling},

author={Zhang, Dongbo and Lu, Xuequan and Qin, Hong and He, Ying},

journal={IEEE Transactions on Visualization and Computer Graphics},

year={2020}

}

This code largely benefits from following repositories: