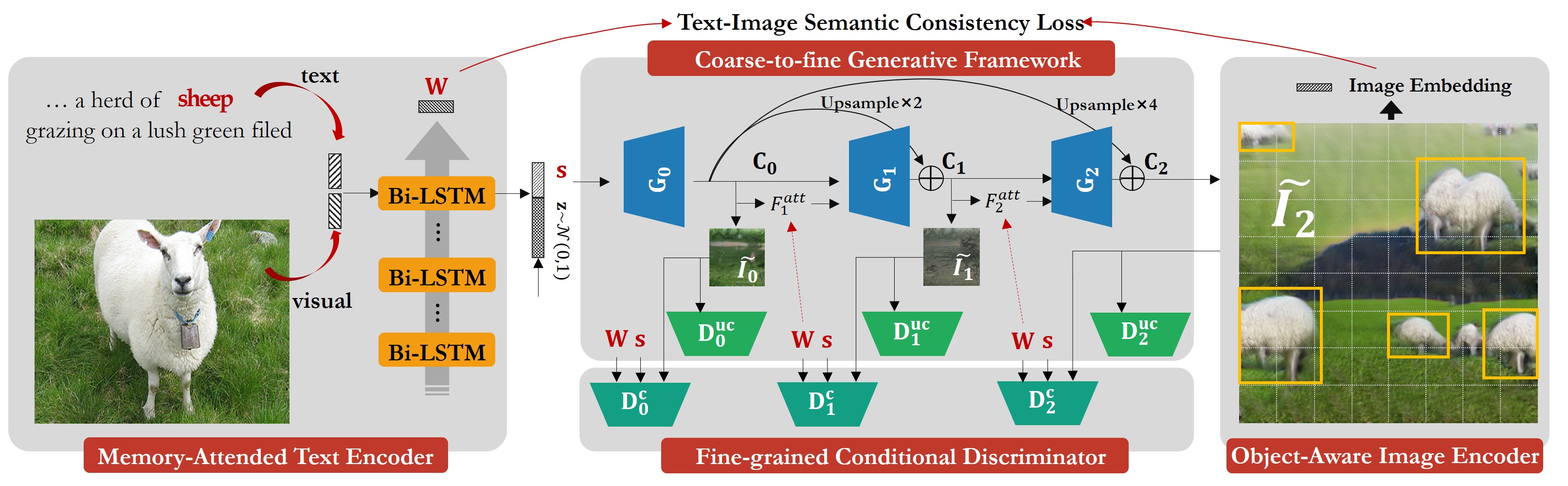

Pytorch implementation for reproducing CPGAN results in the paper CPGAN: Full-Spectrum Content-Parsing Generative Adversarial Networks for Text-to-image Synthesis by Jiadong Liang, Wenjie Pei, Feng Lu.

- Create anaconda virtual environment

conda create -n CPGAN python=2.7

conda activate CPGAN- Install PyTorch and dependencies from http://pytorch.org

conda install pytorch=1.0.1 torchvision==0.2.1 cudatoolkit=10.0.130- PIP Install

pip install python-dateutil easydict pandas torchfile nltk scikit-image h5py pyyaml- Clone this repo:

https://github.com/dongdongdong666/CPGAN.git-

Download train2014-text.zip from here and unzip it to data/coco/text/.

-

Unzip val2014-text.zip in data/coco/text/.

-

Download memory features for each word from here and put the features in memory/.

-

Download Inception Enocder , Generator , Text Encoder and put these models in models/.

Sampling

- Set

B_VALIDATION:Falsein "/code/cfg/eval_coco.yml". - Run

python eval.py --cfg cfg/eval_coco.yml --gpu 0to generate examples from captions listed in "data/coco/example_captions.txt". Results are saved to "outputs/Inference_Images/example_captions".

Validation

- Set

B_VALIDATION:Truein "/code/cfg/eval_coco.yml". - Run

python eval.py --cfg cfg/eval_coco.yml --gpu 0to generate examples for all captions in the validation dataset. Results are saved to "outputs/Inference_Images/single". - Compute inception score for the model trained on coco.

python caculate_IS.py --dir ../outputs/Inference_Images/single/- Compute R precison for the model trained on coco.

python caculate_R_precison.py --cfg cfg/coco.yml --gpu 0 --valid_dir ../outputs/Inference_Images/single/TBA

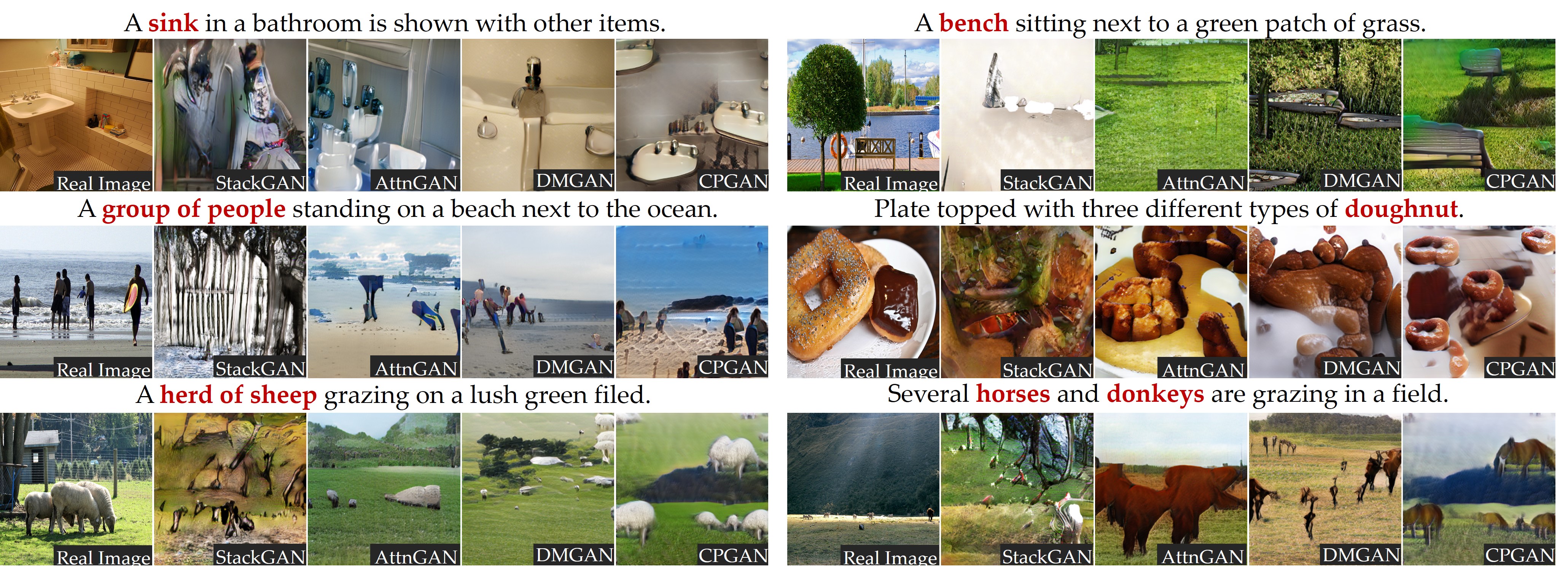

- Qualitative comparison between different modules of our model for ablation study, the results of AttnGAN are also provided for reference.

- Qualitative comparison between our CPGAN with other classical models for text-to-image synthesis.

Note that after cleaning and refactoring the code of the paper, the results are slightly different. We use the Pytorch implementation to measure inception score and R precision.

| Model | R-precision↑ | IS↑ |

|---|---|---|

| Reed | (-) | 7.88 ± 0.07 |

| StackGAN | (-) | 8.45 ± 0.03 |

| Infer | (-) | 11.46 ± 0.09 |

| SD-GAN | (-) | 35.69 ± 0.50 |

| MirrorGAN | (-) | 26.47 ± 0.41 |

| SEGAN | (-) | 27.86 ± 0.31 |

| DMGAN | 88.56% | 30.49 ± 0.57 |

| AttnGAN | 82.98% | 25.89 ± 0.47 |

| objGAN | 91.05% | 30.29 ± 0.33 |

| CPGAN | 93.59% | 52.73 ± 0.61 |

If you find CPGAN useful in your research, please consider citing:

@misc{liang2019cpgan,

title={CPGAN: Full-Spectrum Content-Parsing Generative Adversarial Networks for Text-to-Image Synthesis},

author={Jiadong Liang and Wenjie Pei and Feng Lu},

year={2019},

eprint={1912.08562},

archivePrefix={arXiv},

primaryClass={cs.CV}

}