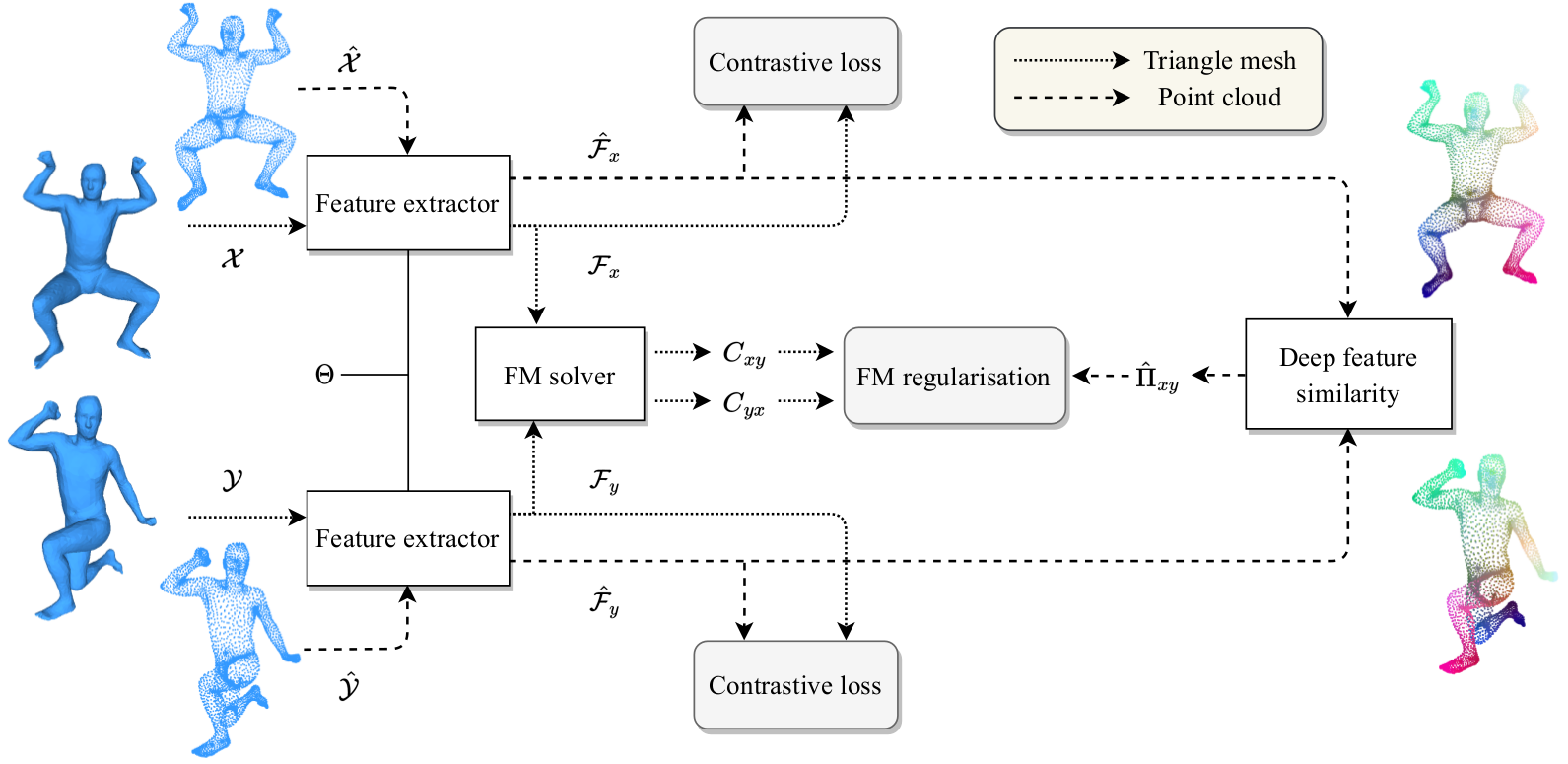

Self-Supervised Learning for Multimodal Non-Rigid 3D Shape Matching (CVPR 2023, selected as highlight)

conda create -n fmnet python=3.8 # create new viertual environment

conda activate fmnet

conda install pytorch cudatoolkit -c pytorch # install pytorch

pip install -r requirements.txt # install other necessary libraries via pipTo train and test datasets used in this paper, please download the datasets from the following links:

We thank the original dataset providers for their contributions to the shape analysis community, and that all credits should go to the original authors.

For data preprocessing, we provide preprocess.py to compute all things we need (LBO eigenfunctions, geodesic distance matrix). Here is an example for FAUST remesh data. The provided data should have a subfolder off containing all triangle mesh files.

python preprocess.py --data_root ../data/FAUST_r/ --no_normalize --n_eig 128To train a model for 3D shape matching. You only need to write or use a YAML config file. In the YAML config file, you can specify everything around training. Here is an example to train.

python train.py --opt options/train.yaml You can visualize the training process via TensorBoard.

tensorboard --logdir experiments/After finishing training. You can evaluate the model performance using a YAML config file similar to training. Here is an example to evaluate.

python test.py --opt options/test.yaml You can find the pre-trained models on SURREAL-5k dataset in checkpoints for reproducibility.

The implementation of DiffusionNet is modified from the official implementation,

the computation of the FM unsupervised loss and FM solvers are modified from SURFMNet-pytorch

and DPFM, the Sinkhorn normalization is adapted from tensorflow implementation.

We thank the authors for making their codes publicly available.

If you find the code is useful, please cite the following paper

@inproceedings{cao2023,

title = {Self-Supervised Learning for Multimodal Non-Rigid 3D Shape Matching},

author = {D. Cao and F. Bernard},

year = {2023},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)}

}