🚧 Warning: This project is still under development, code and features may be modified and deprecated

- Support for open domain APIs

- Train more lora for more tasks, and add them to model's select list

- Make model automatically select which lora is needed to complete the user's task

- Support for multi-round chat

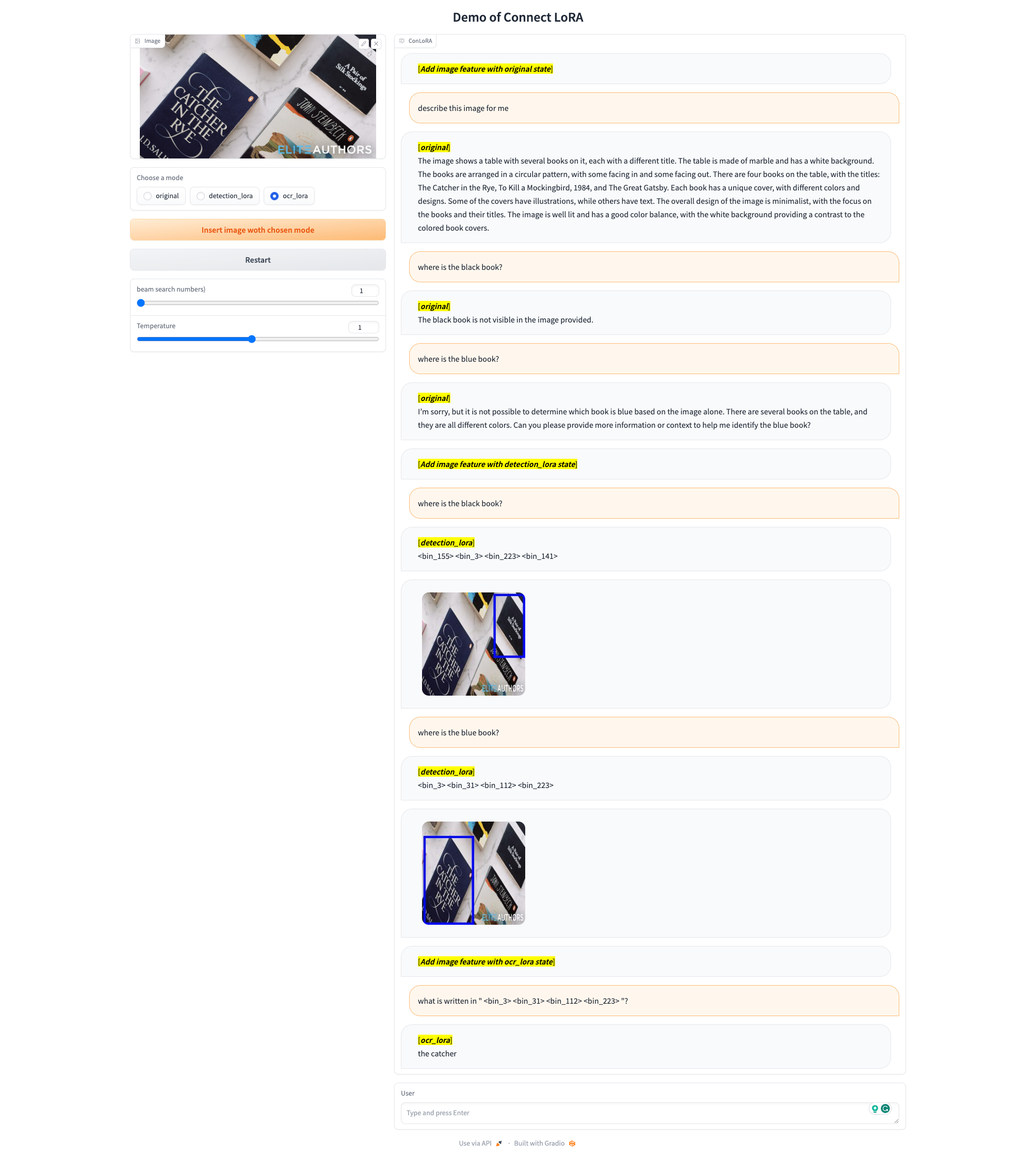

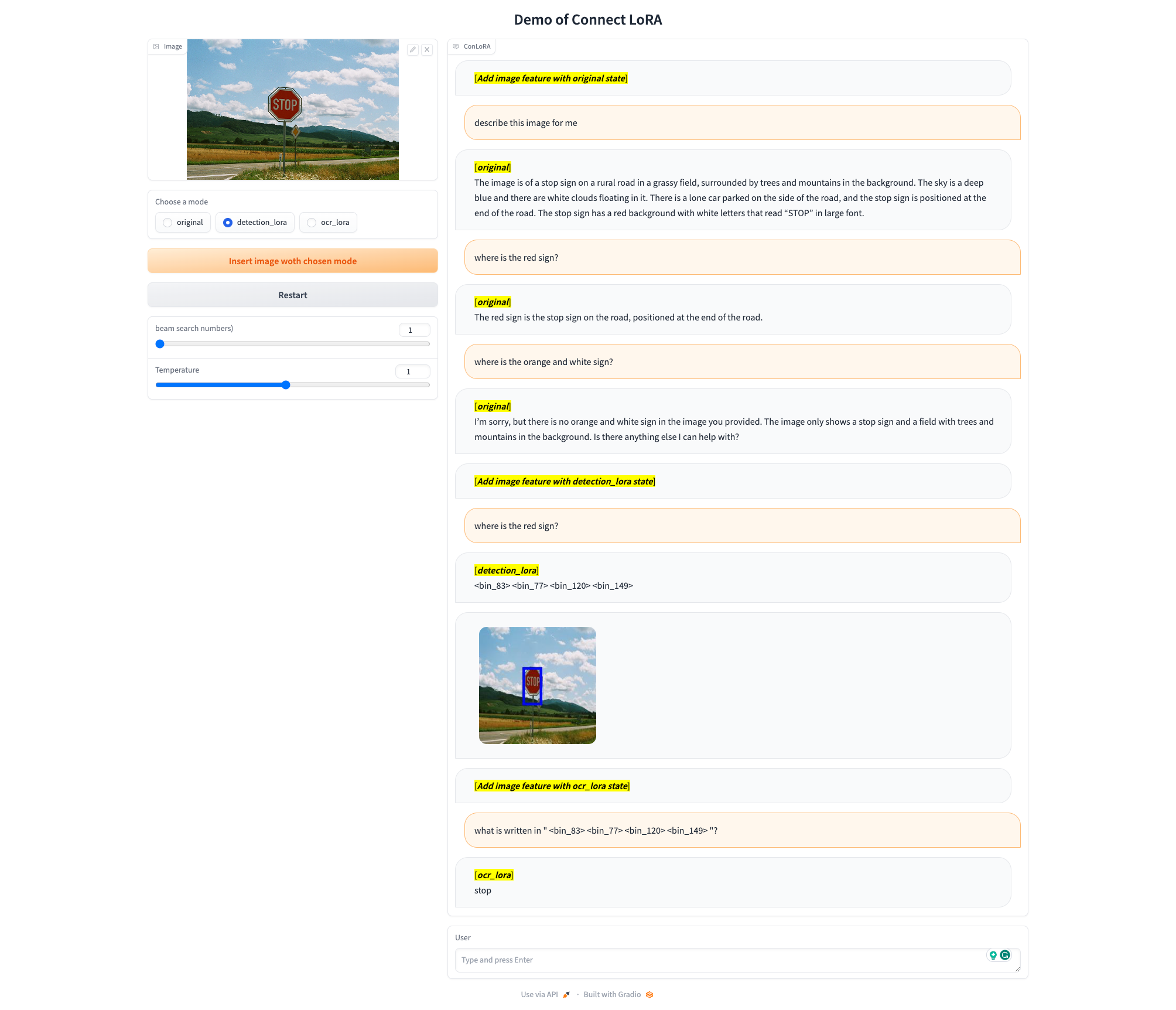

- Let user choose lora type in the demo interface

- Lora for OCR task

- Lora for visual grounding task

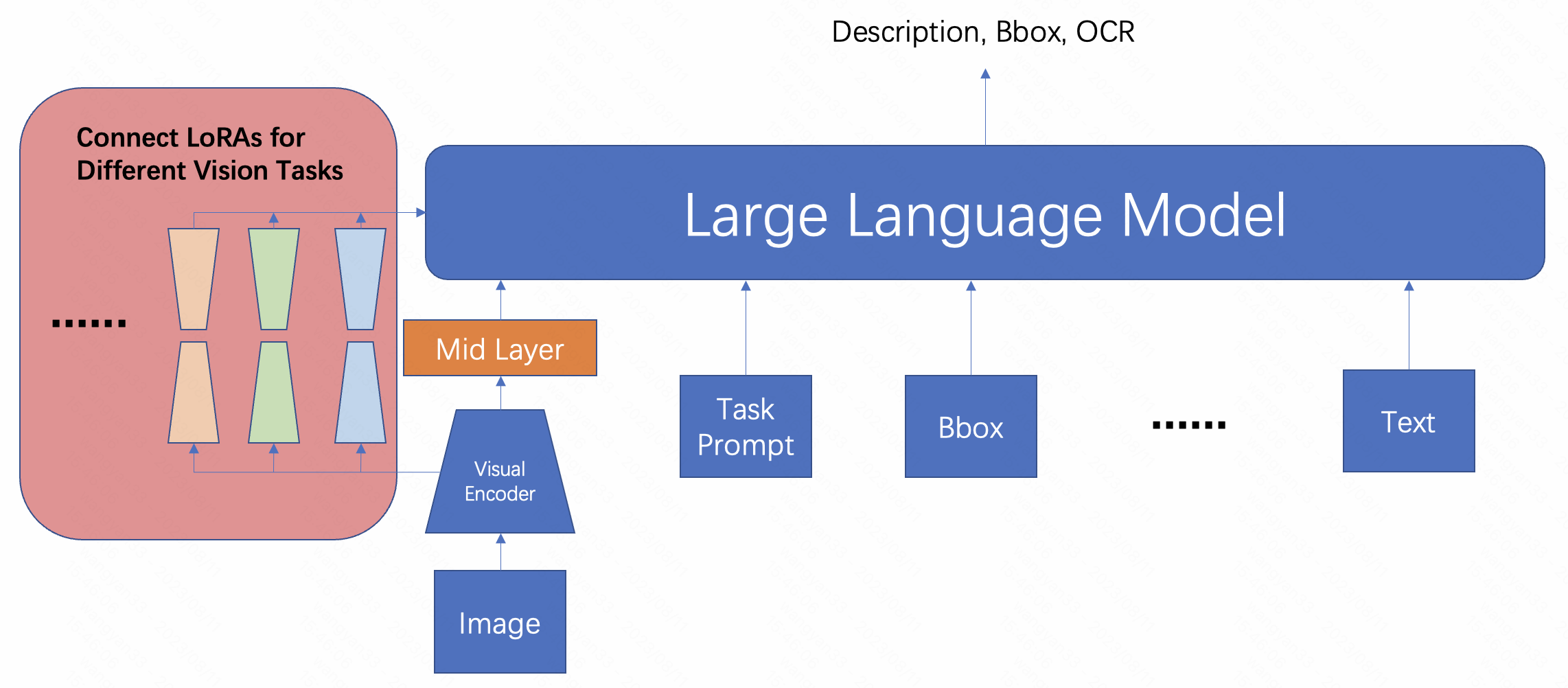

- This project is started from Mini-GPT4 which is a image-text QA project.

- We use LoRA in ViT, Mid-layer(Q-former) and LLm to align visual and language feature as well as different tasks.

- We only add LoRA for upper layer of ViT, which allows one vision model to be adapted to different vision tasks.

- Mini-GPT4 This repository is currently built upon Mini-GPT4, thanks for their codes and pretrained weight.

This repository is under BSD 3-Clause License. Many codes are based on Lavis with BSD 3-Clause License here.