This is the codebase for the GAPSLAM paper. This work aims to infer marginal posteriors of robot poses and landmark locations encountered in SLAM via real-time operation. The paper has been accepted at IROS 2023. Link to the arXiv version

@inproceedings{huang23gapslam,

title={{GAPSLAM}: Blending {G}aussian Approximation and Particle Filters for Real-Time Non-{G}aussian {SLAM}},

author={Qiangqiang Huang and John J. Leonard},

booktitle={Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst.},

year={2023}

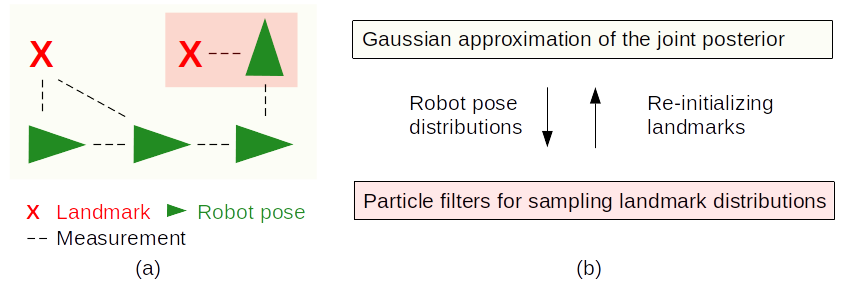

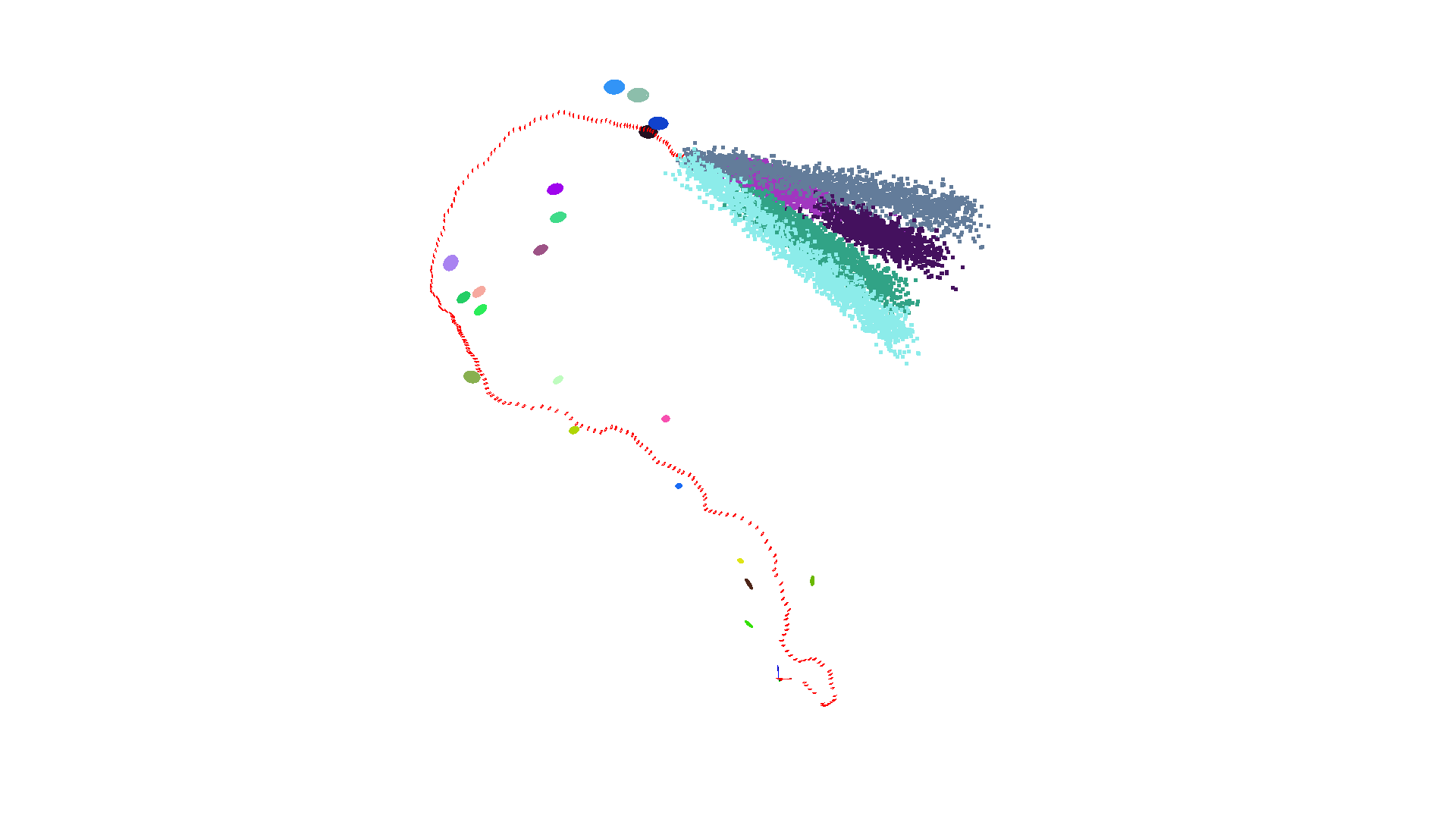

}Illustration of our method: (a) a SLAM example, where the robot moves along poses in green and makes measurements to landmarks in red, and (b) our method, which blends Gaussian approximation in yellow and particle filters in pink. The Gaussian approximation, centered on the maximum a posteriori (MAP) estimate, provides robot pose distributions on which the particle filters are conditioned to draw samples that represent landmark distributions. If a sample attains a higher probability than the MAP estimate when evaluating the posterior, landmarks in the Gaussian solver will be re-initialized by that sample.

We provide the code and examples of the algorithms described in the paper. A teaser video about the work:

gapslam_video_h264.mp4

The following instruction was tested on Ubuntu 20.04.

sudo apt-get install gcc libc6-dev

sudo apt-get install gfortran libgfortran3

sudo apt-get install libsuitesparse-devgit clone git@github.com:doublestrong/GAPSLAM.git

cd GAPSLAM

conda env create -f env.yaml

conda activate gapslam

pip install -r requirements.txt

python setup.py installWe provide examples for range-only SLAM and object-based bearing-only SLAM. In the range-only SLAM example, we compare GAPSLAM with other methods that also aim at full posterior inference. In the object-based SLAM example, we demonstrate that GAPSLAM can be scaled to 3D SLAM and generalized to other types of measurements (e.g., bearing-only).

We provide a few scripts in the following directory to run the range-only SLAM experiment. All results will be saved in the file folder RangeOnlyDataset.

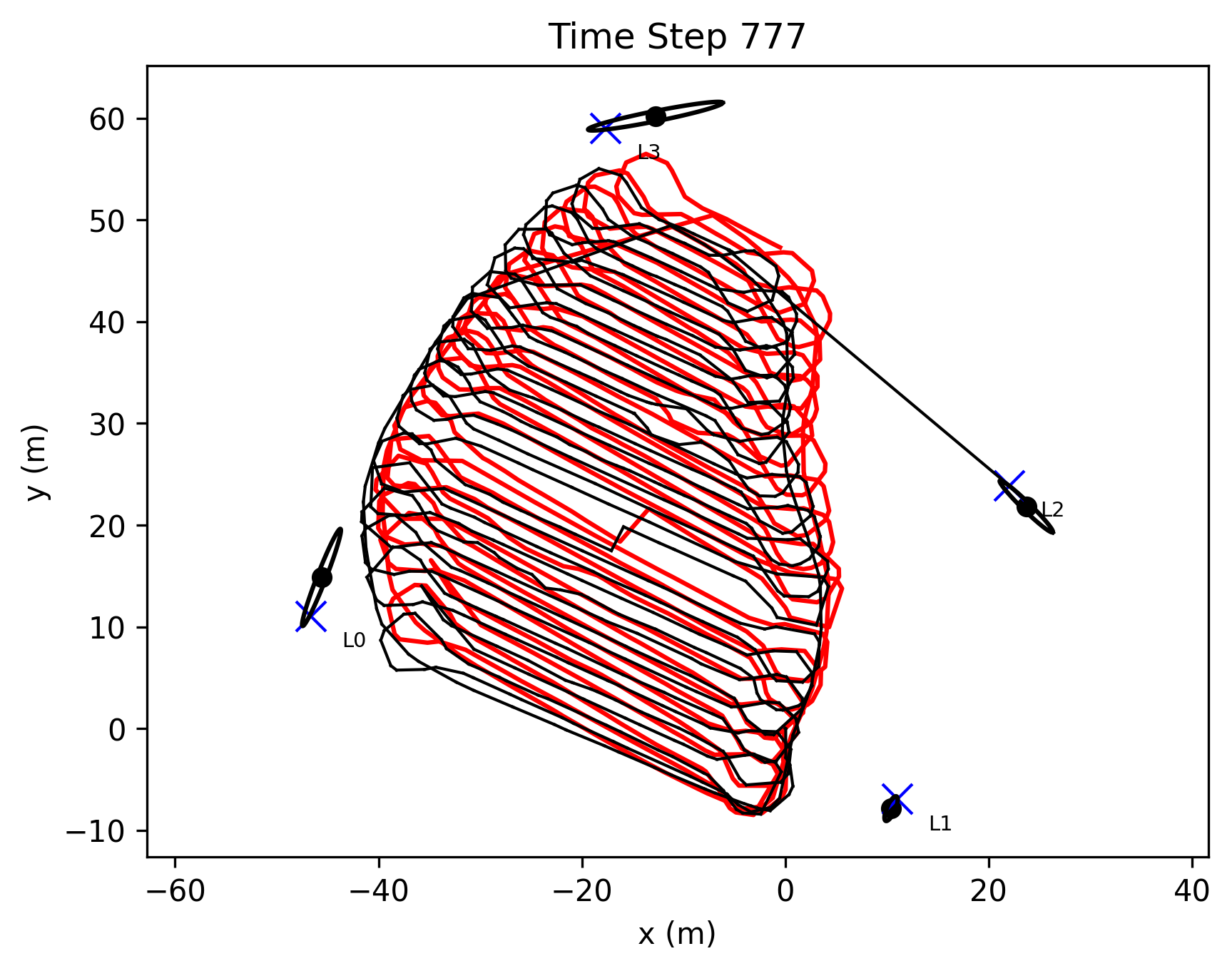

cd example/slam/rangeOnlySLAMIf you just want the final estimate of the robot path and landmark positions,

python run_gapslam.pyand then, in RangeOnlyDataset/Plaza1EFG/batch_gap1, you can find

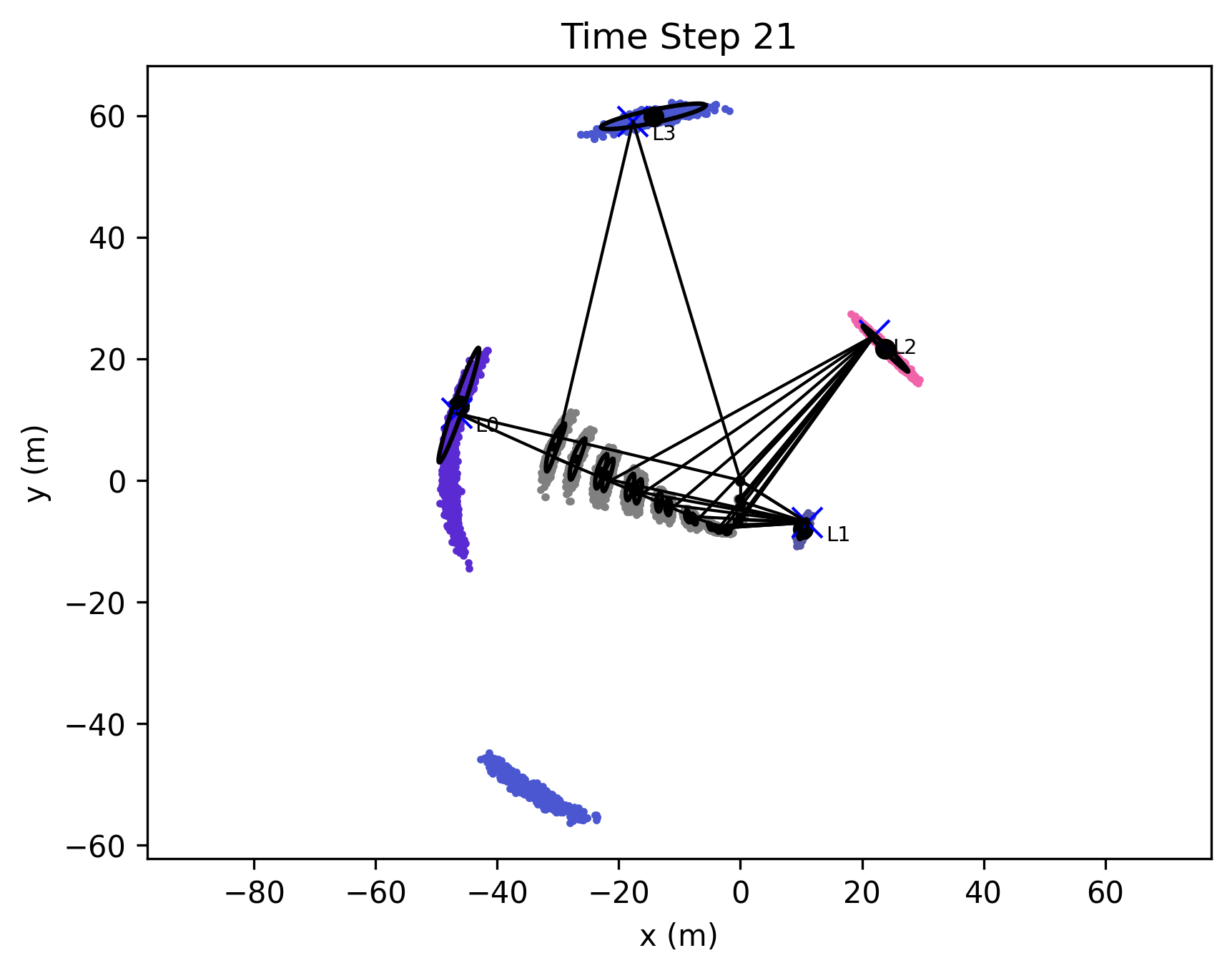

If you want to see how posteriors of robot poses and landmark positions evolve,

python run_gapslam_visual.pyand then, in RangeOnlyDataset/Plaza1EFG/gap1, you can find

Methods for comparison:

-

RBPF-SOG, which is our implementation of algorithms in this paper

python run_sog_rbpf.py python run_sog_rbpf_visual.py

-

NSFG, which is our implementation of algorithms in this paper

python run_nsfg.py

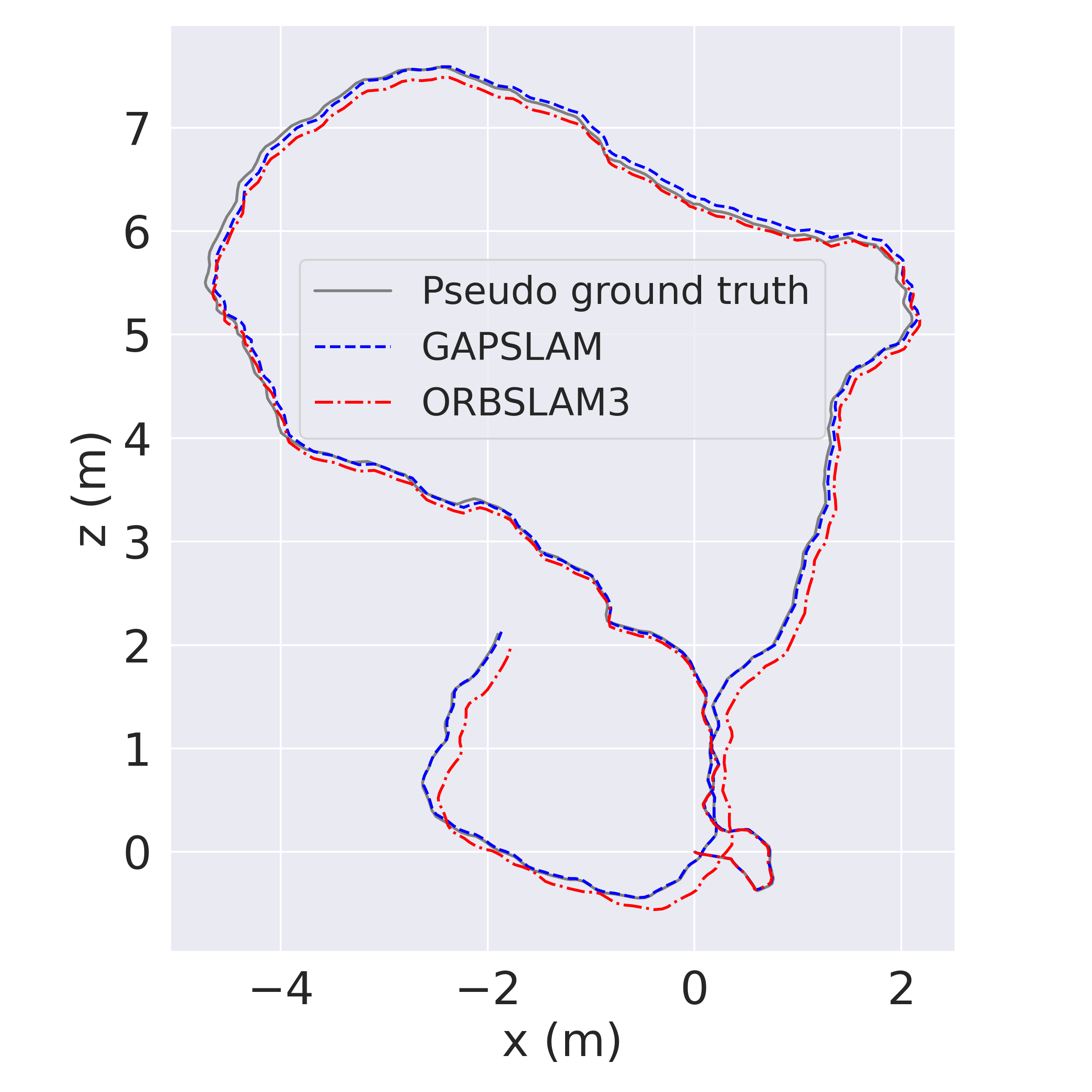

We provide you with some data of visual odometry and object detection to test our code. Unzip and extract the data to example/slam/objectBasedSLAM/realworld_objslam_data. If you just want the final estimate of the robot path and object locations,

cd example/slam/objectBasedSLAM

python realworld_office_gapslam.pyand then, in realworld_objslam_data/results/run0, you can find

If you want to get the visualization of posteriors of object locations,

python realworld_office_gapslam_visual.pyand then in realworld_objslam_data/results/run1 you can find visuals as follows: