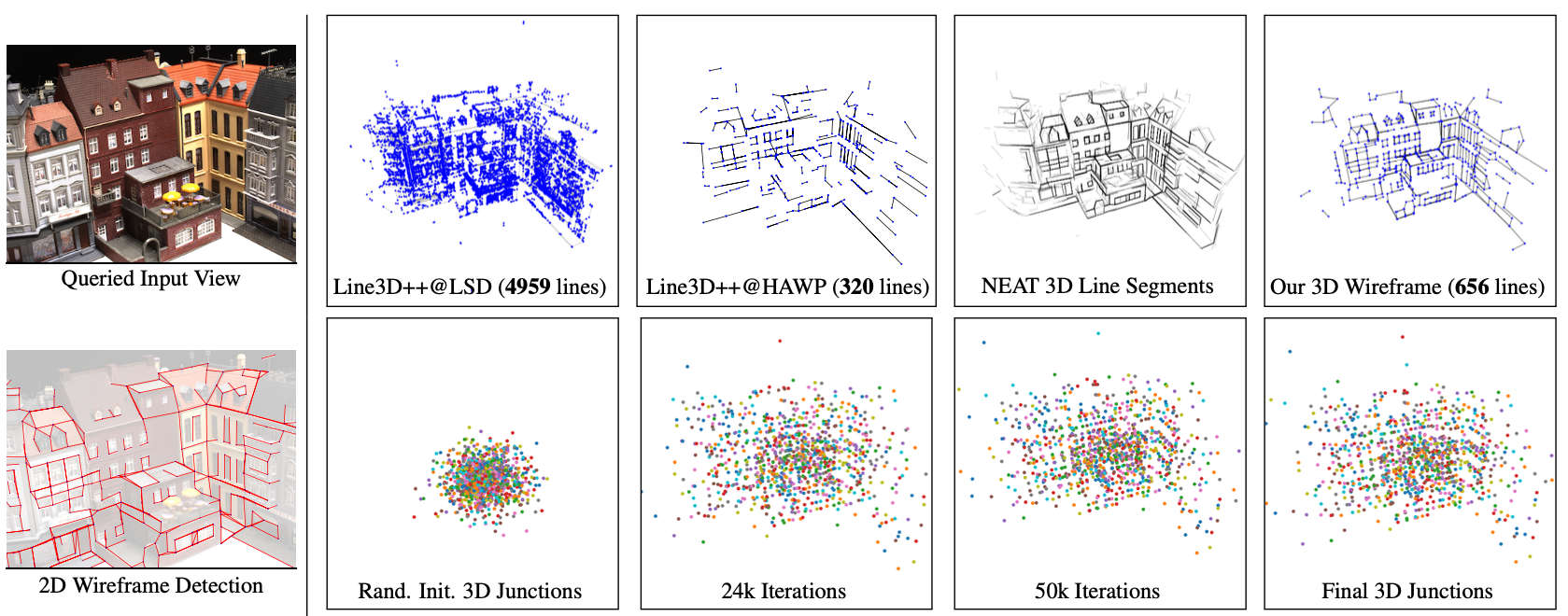

Volumetric Wireframe Parsing from Neural Attraction Fields

Nan Xue, Bin Tan, Yuxi Xiao, Liang Dong, Gui-Song Xia, Tianfu Wu

2023

This project is built on volsdf. We also thank the four anonymous reviewers for their feedback on the paper writing, listed as follows (copied from the CMT system):

- "The proposed method is novel and very interesting and the authors' rebuttal address some of my concerns. However, as pointed out by other reviewers, many parts need to be revised to clarify the proposed method, and another careful review is necessary after the revisions. Therefore, my final rating is Borderline Reject." by Reviewer 2

- "After reviewing the opinions of other reviewers and reading the rebuttal, I have observed common issues in terms of writing and explanation. Despite my experience of over ten years in the field of 3D vision, I found it challenging to follow the submission, which is its main weakness. However, during the rebuttal, the authors made efforts to address most of the concerns raised, including the completion metric. They compared their approaches with more recent research works and demonstrated superior quantitative performance compared to the comparison methods. Moreover, I find the idea of reconstructing a wireframe using implicit fields to be a novel and intriguing research direction. Considering this work as a pioneering effort, I am inclined to have a positive stance on it." by Reviewer 3

- "After reading the other reviews and the authors' responses, I still lean on rejection. As pointed out by all the reviewers, the paper needs significant rework on writing. Even after reading the authors' feedback, some key details are still lacking or confusing. I do not think the paper in its current form is ready for publication, even adding the explanation provided in the authors' response. Quality-wise, although some quantitative improvement is achieved, the visual quality does not improve much. In addition, there exist many spurious strokes in almost every result, making it difficult to use in real applications -- additional errors could be introduced due to the spurious artifacts." by Reviewer 4

- "After reading rebuttals and other reviews, I think this paper is not ready to be published as its writing need significant revisions. The overall idea nad method is novel and interesting, while the current draft has too many unclear points and I sincerely believe this paper could be hugely improved by revision." by Reviewer 5

git clone https://github.com/cherubicXN/neat.git --recursive

conda create -n neat python=3.10

conda activate neat

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu117

3. Install hawp from third-party/hawp

cd third-party/hawp

pip install -e .

cd ../..

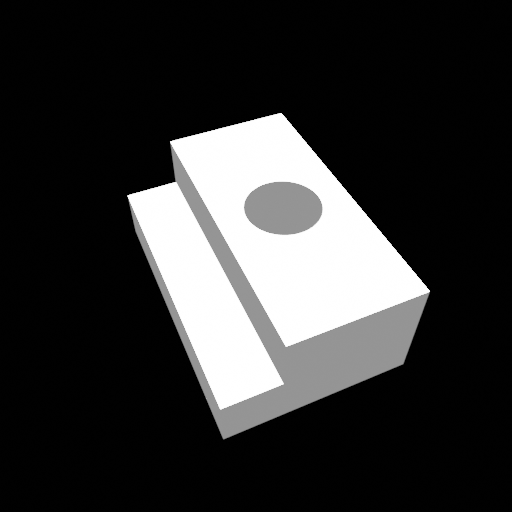

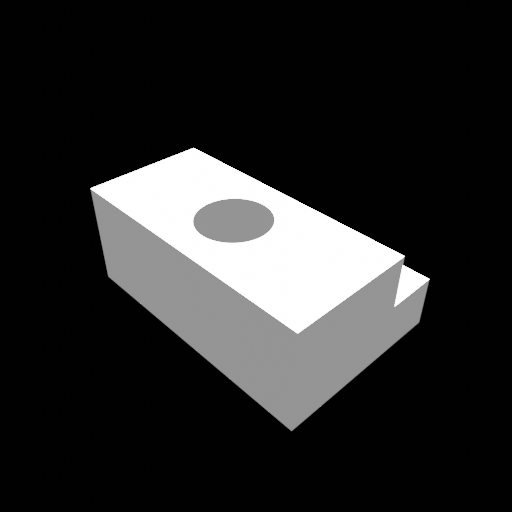

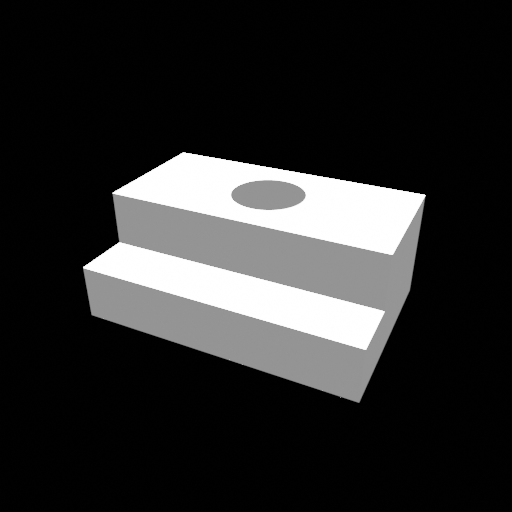

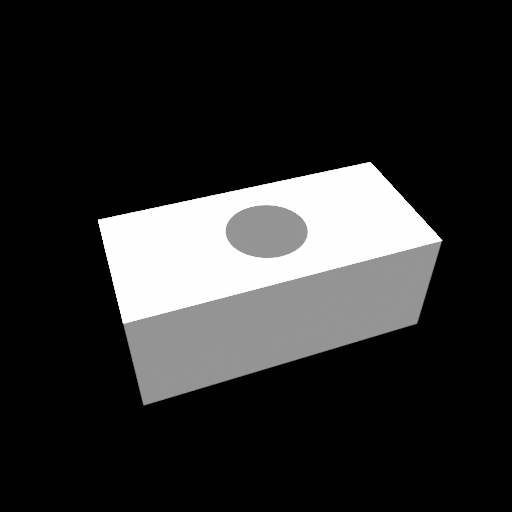

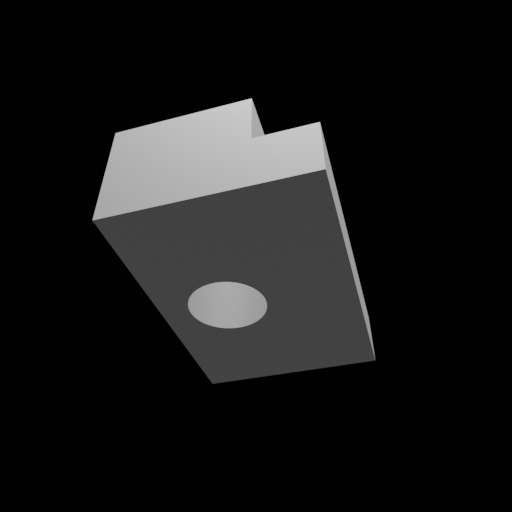

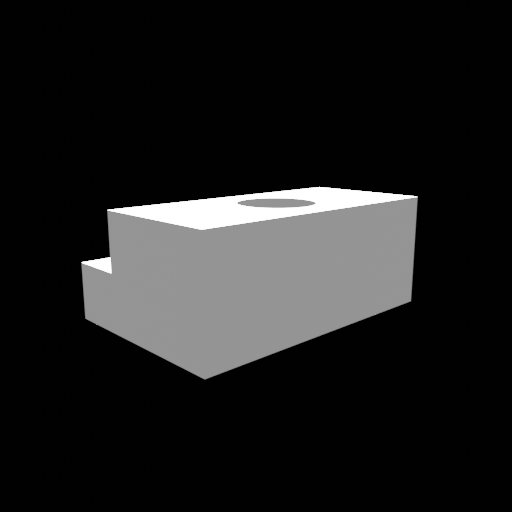

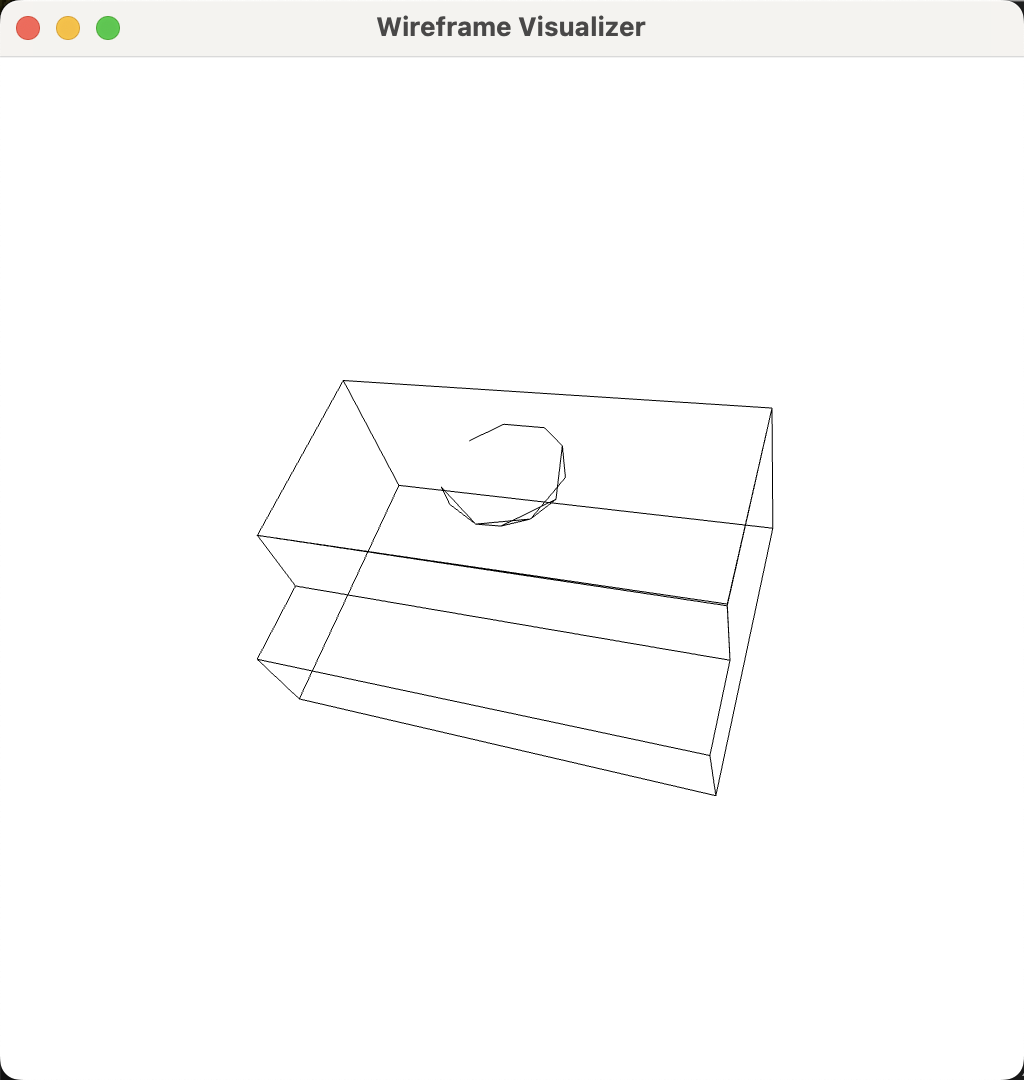

pip install -r requirements.txtA toy example on a simple object from the ABC dataset

-

Step 1: Training or Optimization

python training/exp_runner.py \ --conf confs/abc-debug/abc-neat-a.conf \ --nepoch 2000 \ # Number of epochs for training/optimization --tbvis # Use tensorboard to visualize the 3D junctions -

Step 2: Finalize the NEAT wireframe model

python neat-final-parsing.py --conf ../exps/abc-neat-a/{timestamp}/runconf.conf --checkpoint 1000After running the above command line, you will get 4 files at

../exps/abc-neat-a/{timestamp}/wireframeswith the prefix of{epoch}-{hash}*, where{epoch}is the checkpoint you evaluated and{hash}is an hash of hyperparameters for finalization.The four files are with the different suffix strings:

{epoch}-{hash}-all.npzstores the all line segments from the NEAT field,{epoch}-{hash}-wfi.npzstores the initial wireframe model without visibility checking, containing some artifacts in terms of the wireframe edges,{epoch}-{hash}-wfi_checked.npzstores the wireframe model after visibility checking to reduce the edge artifacts,{epoch}-{hash}-neat.pthstores the above three files and some other information in thepthformat.

-

Step 3: Visualize the 3D wireframe model by

python visualization/show.py --data ../exps/abc-neat-a/{timestamp}/wireframe/{filename}.npz- Currently, the visualization script only supports the local run.

- The open3d (v0.17) plugin for tensorboard is slow

- Precomputed results

- Data preparation

- Evaluation code

If you find our work useful in your research, please consider citing

@article{NEAT-arxiv,

author = {Nan Xue and

Bin Tan and

Yuxi Xiao and

Liang Dong and

Gui{-}Song Xia and

Tianfu Wu},

title = {Volumetric Wireframe Parsing from Neural Attraction Fields},

journal = {CoRR},

volume = {abs/2307.10206},

year = {2023},

url = {https://doi.org/10.48550/arXiv.2307.10206},

doi = {10.48550/arXiv.2307.10206},

eprinttype = {arXiv},

eprint = {2307.10206}

}