______ ______ ______ ______ ______ ______ /\ __ \/\ ___\/\ __ \/\ __ \ /\ __ \/\ ___\ \ \ __/\ \ \___\ \ __ \ \ __/ \ \ __ \ \ _\_ \ \_\ \ \_____\ \_\ \_\ \_\ \ \_\ \_\ \_____\ \/_/ \/_____/\/_/\/_/\/_/ \/_/\/_/\/_____/

Representation Learning for Content-Sensitive Anomaly Detection in Industrial Networks

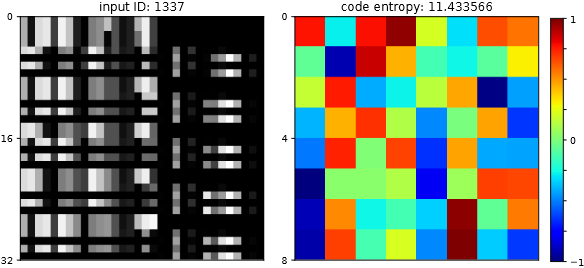

Using a convGRU-based autoencoder, this thesis proposes a framework to learn spatial-temporal aspects of raw network traffic in an unsupervised and protocol-agnostic manner. The learned representations are used to measure the effect on the results of a subsequent anomaly detection and are compared to the application without the extracted features. The evaluation showed, that the anomaly detection could not effectively be enhanced when applied on compressed traffic fragments for the context of network intrusion detection. Yet, the trained autoencoder successfully generates a compressed representation (code) of the network traffic, which hold spatial and temporal information. Based on the models residual loss, the autoencoder is also capable of detecting anomalies by itself. Lastly, an approach for a kind of model interpretability (LRP) was investigated in order to identify relevant areas within the raw input data, which is used to enrich alerts generated by an anomaly detection method.

Master thesis submitted on 13.11.2021

- tex

- finished paper

- talk

- initial presentation

- TUC presentation

- thesis defense

- src

- pcap -> dataset

- dataloader

- pytorch convGRU AE

- anomaly detection

- evaluation

- visualization

- demo notebooks

- experiment helper script

- LRP

- experiments

- baseline

- SWAT

- VOERDE

thesis ├── src │ ├── lib pcapAE Framework │ │ ├── CLI.py...................handle argument passing │ │ ├── H5Dataset.py.............data loading │ │ ├── ConvRNN.py...............convolutional recurrent cell implementation │ │ ├── decoder.py...............decoder logic │ │ ├── encoder.py...............encoder logic │ │ ├── model.py.................network orchestration │ │ ├── pcapAE.py................framework interface │ │ ├── earlystopping.py.........training heuristic │ │ └── utils.py.................helper functions │ └── requirements.txt │ │ │ ├── ad scikit-learn AD Framework │ │ ├── AD model blueprints │ │ ├── AD.py.. .................AD wrappers │ │ └── utils.py.................helper functions │ │ │ ├── main.py......................framework interaction │ ├── pcap2ds.py...................convert packet captures to datasets │ └── test_install.py..............rudimentary installation test │ ├── exp Experiments │ ├── data.ods.....................results │ ├── dim_redu.py..................experiment script │ └── exp_wrapper.sh...............execute experiments │ ├── test Jupyter Notebooks │ ├── demo.ipynb...................Jupyter Notebook demo │ ├── vius_tool.ipynb..............PCAP analysis │ └── LRP.ipynb....................LRP Heatmap generation │ ├── tex Writing │ ├── slides │ │ ├── init.pdf..................init presentation │ │ ├── mid.pdf...................mid presentation │ │ └── end.pdf...................defense presentation │ ├── main.pdf......................thesis paper │ └── papers.bib....................sources │ ├── LICENSE └── README.md

apt-get install tshark capinfosapt-get install python3 pip3

pip install -r requirements.txt-

GPU=>10.2

pip install torch torchvision

CPU ONLY

pip install torch==1.7.1+cpu torchvision==0.8.2+cpu torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html

cd src/test/

./test_install.sh./test_pcapAE.sh [--CUDA][+] generate h5 data set from a set of PCAPs

| short | long | default | help |

|---|---|---|---|

-h |

--help |

show this help message and exit | |

-p |

--pcap |

None |

path to pcap or list of pcap pathes to process |

-o |

--out |

None |

path to output dir |

-m |

--modus |

None |

gradient decent strategy |

-g |

--ground |

None |

path to optional evaluation packet level ground truth .csv |

-n |

--name |

None |

data set optional name |

-t |

--threads |

1 |

number of threads |

--chunk |

1024 |

square number fragment size | |

--oneD |

process fragemnts in one dimension | ||

--force |

force to delete output dir path |

python3 pcap2ds.py -p <some.pcap> -o <out_dir> --chunk 1024 --modus byte [-g <ground_truth.csv>][+] pcapAE API wrapper

train an autoencoder with a given h5 data set

| short | long | default | help | options |

|---|---|---|---|---|

-h |

--help |

show this help message and exit | ||

-t |

--train |

path to dataset to learn | ||

-v |

--vali |

path to dataset to validate | ||

-f |

--fit |

path to data set to fit AD | ||

-p |

--predict |

path to data to make a predict on | ||

-m |

--model |

path to model to retrain or evaluate | ||

-b |

--batch_size |

128 |

number of samples per pass | [2,32,512,1024] |

-lr |

--learn_rate |

0.001 |

starting learning rate between | [1,0) |

-fi |

--finput |

1 |

number input frames | [1,3,5] |

-o |

--optim |

adamW |

gradient decent strategy | [adamW, adam, sgd] |

-c |

--clipping |

10.0 |

gradient clip value | [0,10] |

--fraction |

1 |

fraction of data to process | (0, 1] | |

-w |

--workers |

0 |

number of data loader worker threads | [0, 8] |

--loss |

MSE |

loss criterion | [MSE] | |

--scheduler |

cycle |

learn rate scheduler | [step ; cycle ; plateau] | |

--cell |

GRU |

network cell type | [GRU ; LSTM] | |

--epochs |

144 |

number of epochs | ||

--seed |

1994 |

seed to fixing randomness | ||

--noTensorboard |

do not start tensorboard | |||

--cuda |

enable GPU support | |||

--verbose |

verbose output | |||

--cache |

cache dataset to GPU | |||

--retrain |

retrain given model | |||

--name |

experiment name prefix | |||

--AD |

use AD framework | |||

--grid_search |

use AD gridsearch |

# pcapAE training

python3 main.py --train <TRAIN_SET_PATH> --vali <VALI_SET_PATH> [--cuda]

# pcapAE data compression (pcap -> _codes_)

python3 main.py --model <PCAPAE_MODEL> --fit <FIT_SET_PATH> --predict <PREDICT_SET_PATH> [--cuda]

# shallow ML anomaly detection training

python3 main.py --AD --model *.yaml --fit <REDU_FIT_SET_PATH> [--predict <REDU_PREDICT_SET_PATH>] [--grid_search]

# test training AD on new data

python3 main.py --model <AD_MODLE_PATH> --predict <REDU_SET_PATH>

# naive baseline

python3 main.py --baseline pcapAE --model <PCAPAE_MODEL> --predict <PREDICT_SET_PATH>

# raw baseline

python3 main.py --baseline noDL --AD --model ../test/blueprints/base_if.yaml --fit <FIT_SET_PATH> --vali <VALI_SET_PATH> --predict <PREDICT_SET_PATH>

- Data

- dataset =

[VOERDE, SWaT] - preprocessing =

[byte, packet, flow] - fragment size =

[16**2, 32**2] - sequence length =

[1, 3 ,5]

- dataset =

- Representation Learning

- optimizer =

[adamW, adam, SGD] - scheduler =

[step, cycle, plateau] - learn rate =

[.1, .001] - cell =

[GRU, LSTM] - loss function =

[MSE, BCE] - batch size =

[2, 512, 1024] - max epochs =

[144]

- optimizer =

- Debian 10 | Intel i5-6200U ~200 CUDA Cores

- Ubuntu 18.04 | AMD EPYC 7552 ~1500 CUDA Cores

- Cent OS 7.9 | GTX 1080 2560 CUDA Cores

- Ubuntu 20.04 | RTX 3090 10496 CUDA Cores