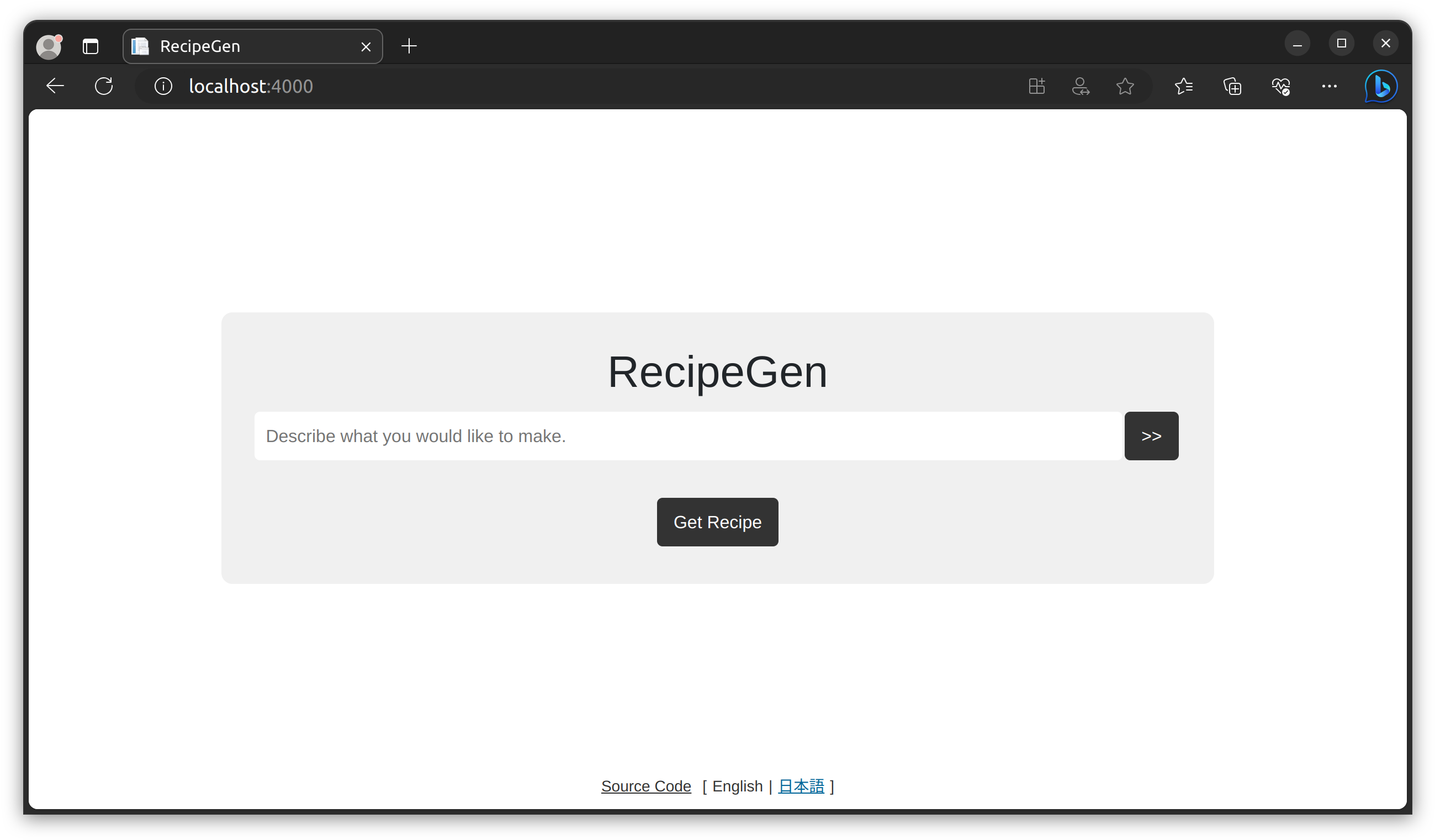

Welcome to RecipeGen, an open-source application powered by Generative AI that curates innovative and unique recipes based on your descriptions. Whether you're craving a "delicious crispy salad", need an "appetizer for a pirate-themed party", or wish to blend two different cuisines, RecipeGen is here to assist.

There is a working version of RecipeGen with a few extra features running at YumPop.ai.

This project is implemented using ASP.NET Core 7 WebAPI and a React client. It can use either the OpenAI or Azure OpenAI services.

Should you encounter any issues getting started, please create an issue.

Before you begin, ensure you have met the following requirements:

- You have obtained a key from either OpenAI or Azure OpenAI services.

- You have installed the latest version of Docker and Visual Studio Code (if not using Codespaces).

- You have a basic understanding of ASP.NET Core, React, and Generative AI.

The most straightforward way to run the application is with Docker:

OpenAI

docker run -e OpenAiKey=<your OpenAI key> -p 8080:8080 ghcr.io/drewby/recipegen:mainAzure OpenAI

docker run -e AzureOpenAIUri=<your Azure OpenAI Uri> -e AzureOpenAiKey=<your Azure OpenAI key> -e OpenAIModelName=<your model deployment name> -p 8080:8080 ghcr.io/drewby/recipegen:mainAfter launching, you can open your browser and navigate to http://localhost:8080 to start creating recipes.

You can also run the application in the provided devcontainer using a tool like Visual Studio Code, or in GitHub Codespaces. This will allow you to modify parts of the application and experiment.

If you create a Github Codespace, you can skip the first two steps below.

-

Fork and clone the repository:

git clone https://github.com/drewby/RecipeGen.git -

Open the project in Visual Studio Code: Upon opening, you will be prompted to "Reopen in Container". This will initiate the build of the Docker container.

-

Set Environment Variables: Populate either the

OpenAIKeyor theAzureOpenAIUriandAzureOpenAIKeyvalues in a new.envfile in the root of the project. For Azure OpenAI, you'll also want to define theOpenAIModelNamewhich should match the name you gave your deployment. There is a.env.samplefor reference. -

Start the webapi: Press

F5orShift-F5. -

Start the React client: Open a terminal with

Ctrl-Shift-`. Change directories tosrc/app. Runnpm install, and thennpm start.

Interacting with RecipeGen is simple and intuitive.

-

Get Started: Click on

Get Recipeto let RecipeGen generate a random recipe for you. -

Customization: You can customize the recipe by typing any description in the provided input box. This could be a specific dish you have in mind or a general idea of what you want to eat.

-

Advanced Options: For more specific requirements, click on the

>> optionsbutton. Here, you can:- Add Ingredients: Specify what ingredients you want in your recipe.

- Exclude Ingredients: Mention any ingredients you want to avoid.

- Specify Allergies: Indicate any food allergies.

- Diet Restrictions: Mention any dietary restrictions, like vegetarian, vegan, gluten-free, etc.

- World Cuisines: Select specific cuisines, such as Italian, Mexican, Chinese, etc.

- Meal Types: Specify the type of meal like breakfast, lunch, dinner, snack, etc.

- Dish Types: Choose the type of dish you want to cook, like dessert, main course, appetizer, etc.

Below are the configuration options for RecipeGen. These can be set either in the appsettings.json file or as environment variables in the .env file.

Logging.LogLevel.Default: Controls the default log level for the application.AllowedHosts: Defines the hosts allowed to access the application. Setting this to "*" permits all hosts.OpenAIKey: Your OpenAI key if you're using OpenAI service directly.AzureOpenAIUri: Your Azure OpenAI Uri, if you're using the Azure OpenAI service.AzureOpenAIKey: Your Azure OpenAI key, if you're using the Azure OpenAI service.PromptFile: The path and filename for the prompt template.OpenAIModelName: The model name of the OpenAI service to use. For Azure OpenAI users, this is the name given to your model deployment. For OpenAI service users, this is the actual model name (e.g., "gpt-3.5-turbo").OpenAIMaxTokens: The maximum number of tokens that the model will generate for each prompt.OpenAIFrequencyPenalty: The penalty for using more frequent tokens (range: -2.0 to 2.0).OpenAITemperature: A parameter controlling the randomness of the models output. Higher values generate more random output (range: 0 to 2).OpenAIPresencePenalty: The penalty for using new tokens (range: -2.0 to 2.0).

Note: If both OpenAIKey and AzureOpenAIKey are provided, the application will default to using Azure.

Refer to the .env.sample in the root of the project for a reference configuration.

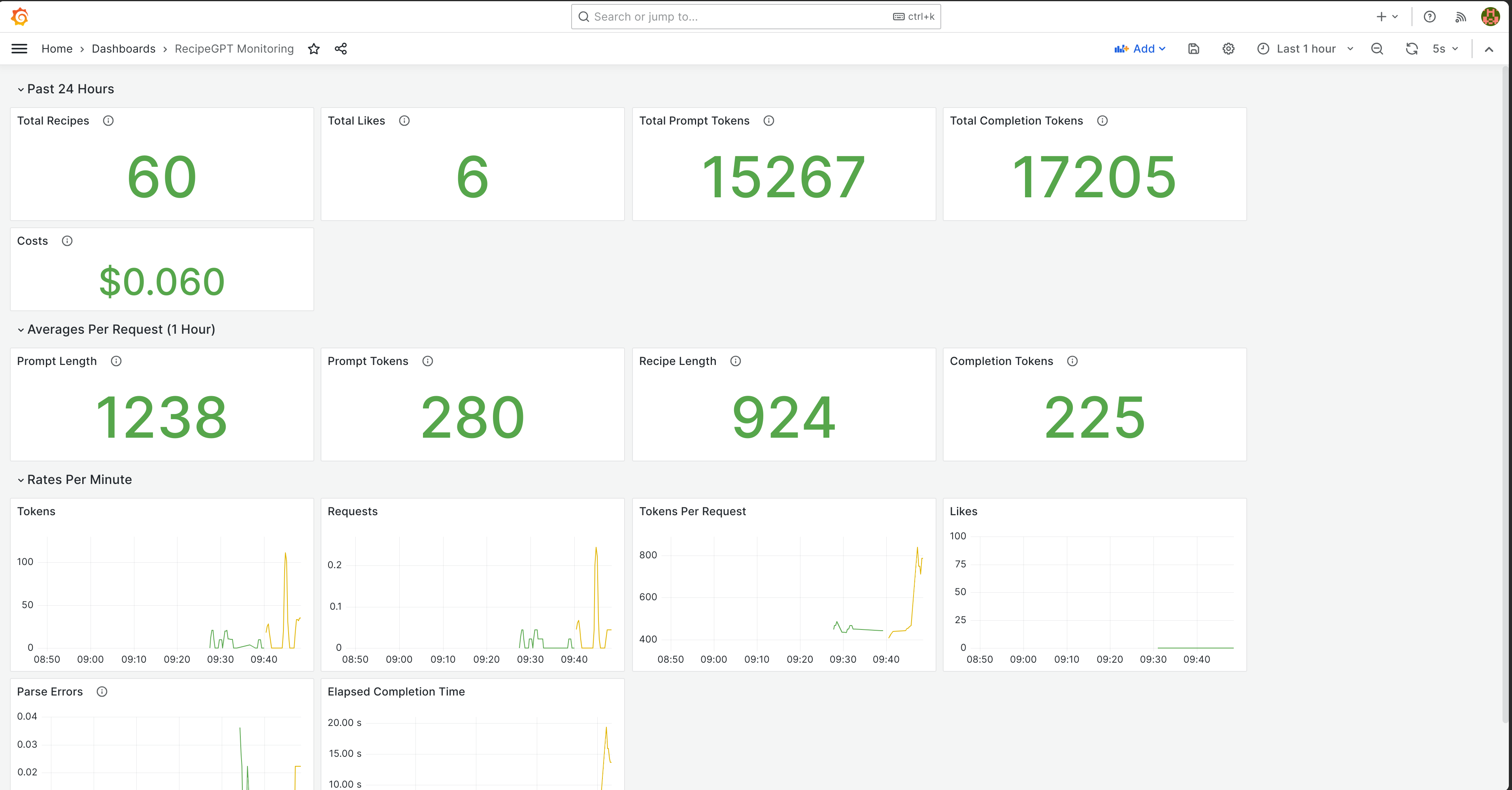

Observability is a crucial attribute of RecipeGen, allowing us to monitor and understand the application's behavior, user interactions, and the performance of the underlying Generative AI model. It offers real-time insights into the system, supporting data-driven decisions for continuous improvement.

The dev container includes an instance of Prometheus at http://localhost:9090, which gathers metrics from the running application. There is also an instance of Grafana at http://localhost:3000 with a built-in dashboard for visualizing the metrics. The grafana username is admin and the password is admin.

Here's an overview of the key metrics set up for RecipeGen, their unit of measurement, and the insights they provide:

-

recipegen_recipecount(count): This counts the total number of recipes generated, indicating the system's overall usage. -

recipegen_promptlength(bytes): This tracks the length of the prompt. It's useful in studying the complexity of requests and understanding the user interactions. The prompt length is impacted by the prompt template as well as the number of inputs from the user. -

recipegen_recipelength(bytes): This measures the length of the recipe generated. This metric can provide insights into the complexity of the generated recipes and how changes to the prompt impact the complexity. -

recipegen_recipetime(ms): This measures the time taken to generate a recipe. It's an essential metric for performance analysis and optimization. -

recipegen_completiontokens(count): This counts the number of tokens generated as part of the chat completion. -

recipegen_prompttokens(count): This tracks the number of prompt tokens generated, providing a measure of the complexity of the input. -

recipegen_likedrecipes(count): This counts the number of recipes that users liked, offering a useful proxy for user satisfaction and the quality of the model's outputs for a given prompt template. -

recipegen_dislikedrecipes(count): This counts the number of recipes that users disliked. -

recipegen_parseerror(count): This counts the number of errors occuring when parsing the response from the OpenAI model.

Along with the above, we include several tags/attributes with each metric.

maxtokensfrequencypenaltypresencepenaltytemperaturemodelprompttemplatefinishreasonlanguage

With these metrics, we can build various visualizations and a monitoring dashboards. An example is below. Note that in the visualizations with both green and yellow, there was a change in prompt template.

Of course, these visualizations will become more interesting with more usage of the service.

Based on these metrics, we can make data-driven decisions to optimize how we use the Large Language Model. For instance, adjust parameters like temperature, frequency penalty, or presence penalty to see if they impact the complexity and quality of recipes generated. If the finishreason tag frequently shows 'length', indicating that the model often runs out of tokens, we may consider increasing the maxtokens limit.

Furthermore, monitoring the rate of recipe generation (recipegen_recipecount) and the rate of token usage (recipegen_prompttokens + recipegen_completiontokens) is important due to the rate limits imposed by the OpenAI service on the number of Requests Per Minute (RPM) and Tokens Per Minute (TPM). If we approach these limits, we may need to adjust our service usage or consider scaling up.

We can also estimate our real-time costs by calculating the token usage with the pricing provided by the OpenAI or Azure OpenAI servicees.

The observability features in RecipeGen facilitate a continuous feedback loop that can help us improve system performance and the user experience.

Interested in contributing to RecipeGen? Follow the guidelines provided in the CONTRIBUTING.md file.

This project uses the MIT license.