FlashOcc on UniOcc and RenderOcc

FlashOcc on UniOcc

| Config | train times | mIOU | FPS(Hz) | Flops(G) | Params(M) | Model | Log |

|---|---|---|---|---|---|---|---|

| UniOcc-R50-256x704 | - | - | - | - | - | - | - |

| M4:FO(UniOcc)-R50-256x704 | - | - | - | - | - | - | - |

| UniOcc-R50-4D-Stereo-256x704 | - | 38.46 | - | - | - | baidu | baidu |

| M5:FO(UniOcc)-R50-4D-Stereo-256x704 | - | 38.76 | - | - | - | baidu | baidu |

| Additional:FO(UniOcc)-R50-4D-Stereo-256x704(wo-nerfhead) | - | 38.44 | - | - | - | baidu | baidu |

| UniOcc-STBase-4D-Stereo-512x1408 | - | - | - | - | - | - | - |

| M6:FO(UniOcc)-STBase-4D-Stereo-512x1408 | - | - | - | - | - | - | - |

FPS are tested via TensorRT on 3090 with FP16 precision. Please refer to Tab.2 in paper for the detail model settings for M-number.

Many thanks to these excellent open source projects:

Related Projects:

FlashOcc on RenderOcc

Readme from ofiginal RenderOcc

(Visualization of RenderOcc's prediction, which is supervised only with 2D labels.)

(Visualization of RenderOcc's prediction, which is supervised only with 2D labels.)

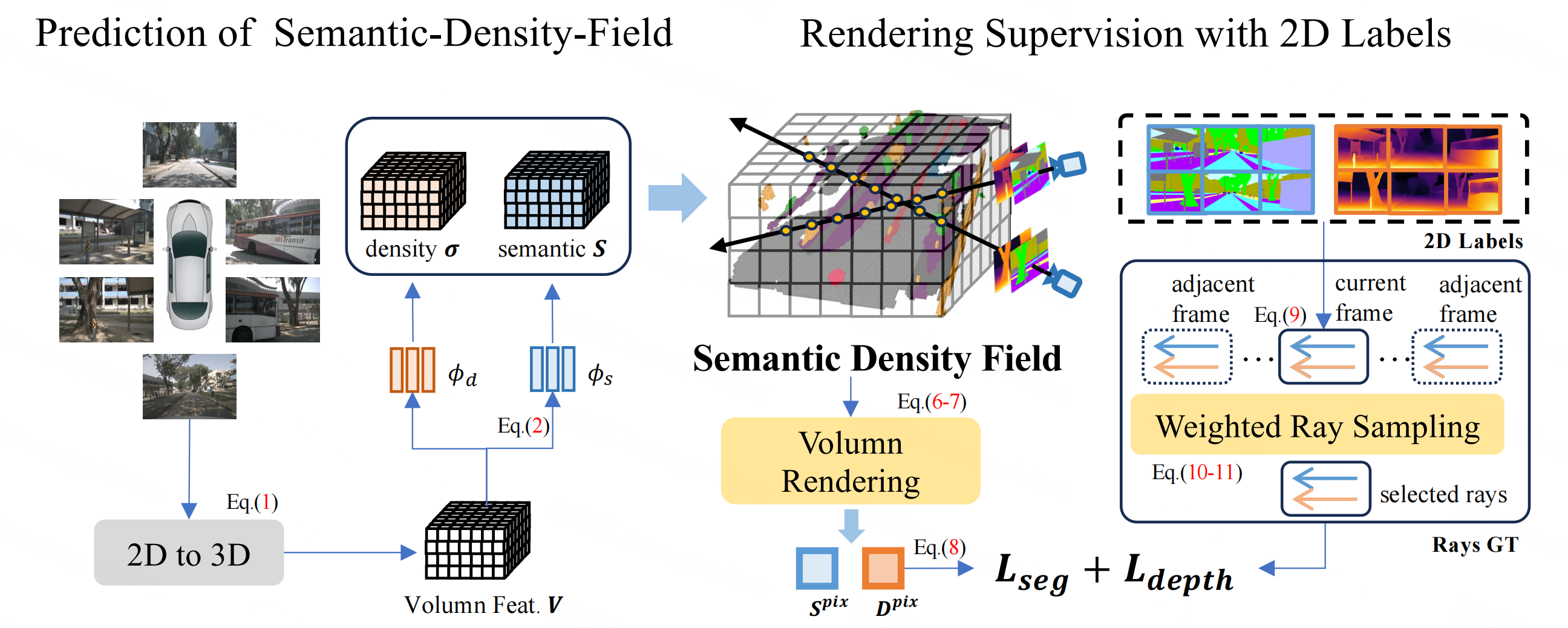

RenderOcc is a novel paradigm for training vision-centric 3D occupancy models only with 2D labels. Specifically, we extract a NeRF-style 3D volume representation from multi-view images, and employ volume rendering techniques to establish 2D renderings, thus enabling direct 3D supervision from 2D semantics and depth labels.

-

Train

# Train RenderOcc with 8 GPUs ./tools/dist_train.sh ./configs/renderocc/renderocc-7frame.py 8 -

Evaluation

# Eval RenderOcc with 8 GPUs ./tools/dist_test.sh ./configs/renderocc/renderocc-7frame.py ./path/to/ckpts.pth 8 -

Visualization

# TODO

| Method | Backbone | 2D-to-3D | Lr Schd | GT | mIoU | Config | Log | Download |

|---|---|---|---|---|---|---|---|---|

| RenderOcc | Swin-Base | BEVStereo | 12ep | 2D | 24.46 | config | log | model |

- More model weights will be released later.

Many thanks to these excellent open source projects:

Related Projects:

If this work is helpful for your research, please consider citing:

@article{pan2023renderocc,

title={RenderOcc: Vision-Centric 3D Occupancy Prediction with 2D Rendering Supervision},

author={Pan, Mingjie and Liu, Jiaming and Zhang, Renrui and Huang, Peixiang and Li, Xiaoqi and Liu, Li and Zhang, Shanghang},

journal={arXiv preprint arXiv:2309.09502},

year={2023}

}