This repo included a collection of models (transformers, attention models, GRUs) mainly focuses on the progress of time series forecasting using deep learning. It was originally collected for financial market forecasting, which has been organized into a unified framework for easier use.

For beginners, we recommend you read this paper or the brief introduction we provided to learn about time series forecasting. And read the paper for a more comprehensive understanding.

With limited ability and energy, we only test how well the repo works on a specific dataset. For problems in use, please leave an issue. The repo will be kept updated.

See the dev branch for recent updates or the master branch not works.

- Python 3.7

- matplotlib == 3.1.1

- numpy == 1.21.5

- pandas == 0.25.1

- scikit_learn == 0.21.3

- torch == 1.7.1

Dependencies can be installed using the following command:

pip install -r requirements.txt- Download data provided by the repo. You can obtain all the six benchmarks from Tsinghua Cloud or Google Drive. All the datasets are well pre-processed and can be used easily.

- Train the model and predict.

- Step1. set

modele.g. "autoformer". - Step2. set

dataset, i.e. assign aDatasetclass to feed data into the pre-determined model. - Step3. set some essential params included

data_path,file_name,seq_len,label_len,pred_len,features,T,M,S,MS. Default params we has provided can be set from data_parser ifdatais provided. - Other. Sometimes it is necessary to revise

_process_one_batchand_get_datainexp_main.

- Step1. set

A simple command included three parameters correspond to the above three steps:

python -u main.py --model 'autoformer' --dataset 'ETTh1' --data "ETTh1" To run on your customized data, a DataSet class must be provided in data_loader.py, then add the Dataset to Exp_Basic.py. Need to be noted that elements ejected from the DataSet class must conform to the model's requirement.

See Colab Examples for detail: We provide google colabs to help reproducing and customing our repo, which includes experiments(train and test), forecasting, visualization and custom data.

Ses the repo for more details on ETT dataset.

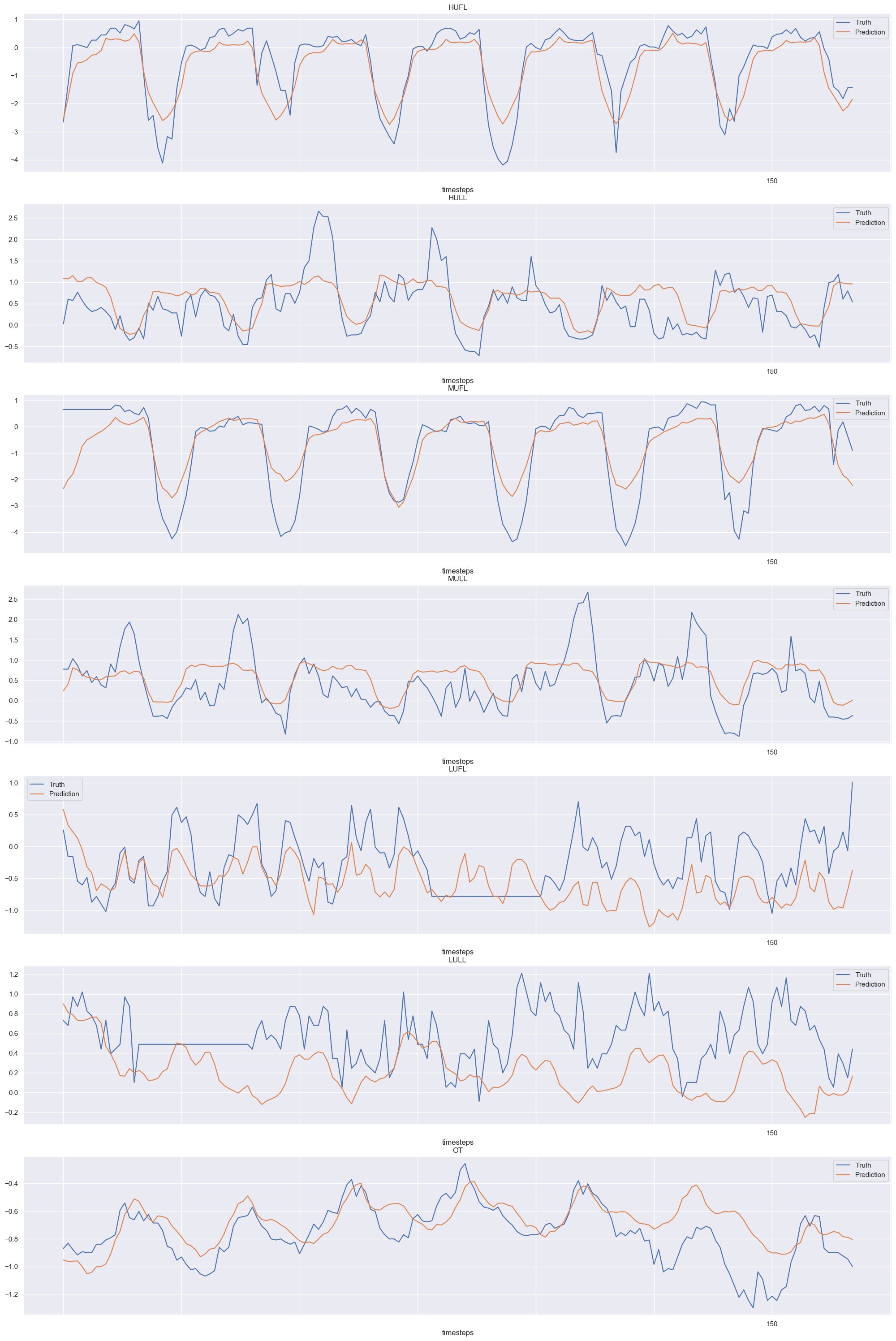

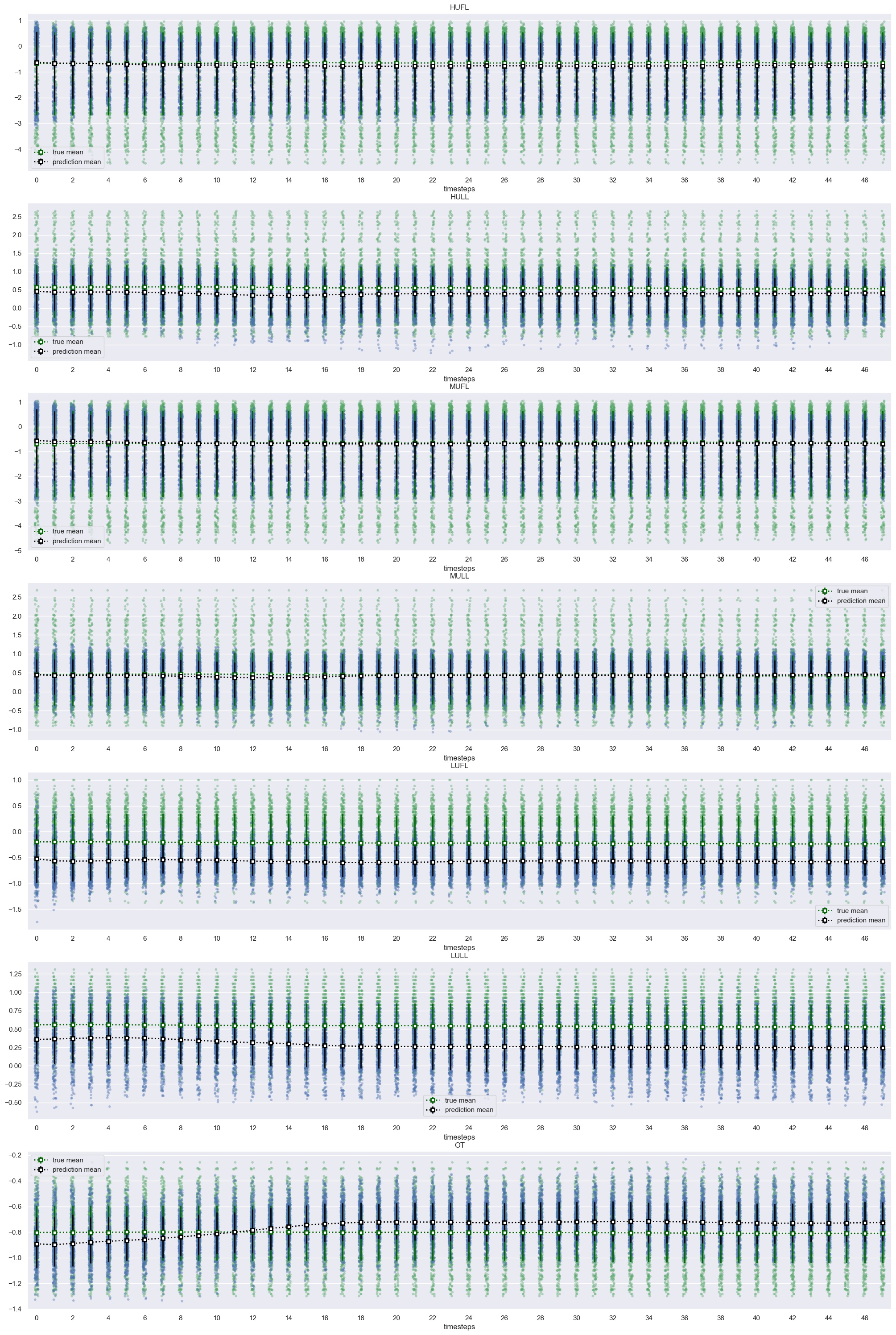

Autoformer result for the task (only 2 epoches for train, test 300+ samples):

Figure 1. Autoformer results (randomly choose a sample).

Figure 2. Autoformer results (values distribution).

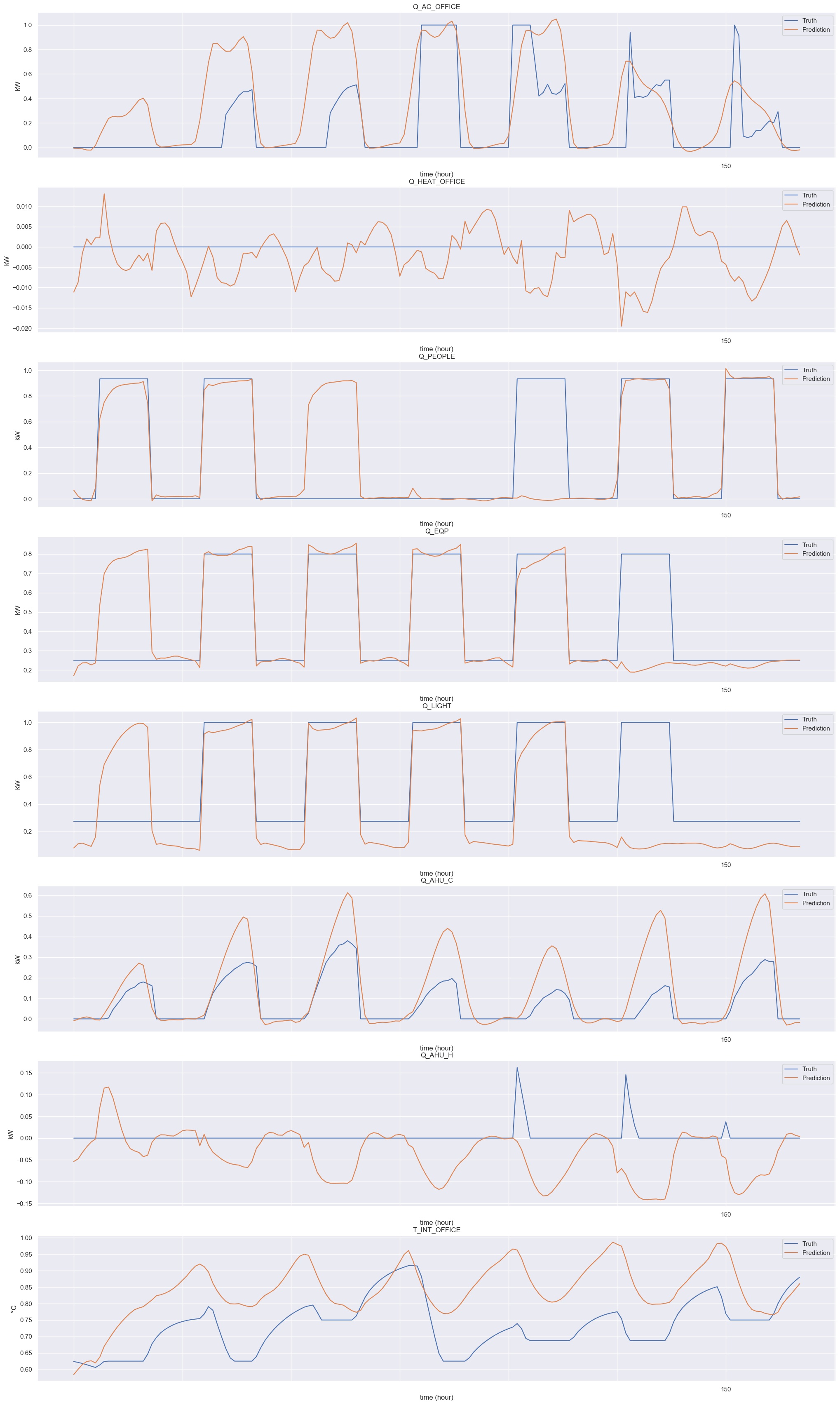

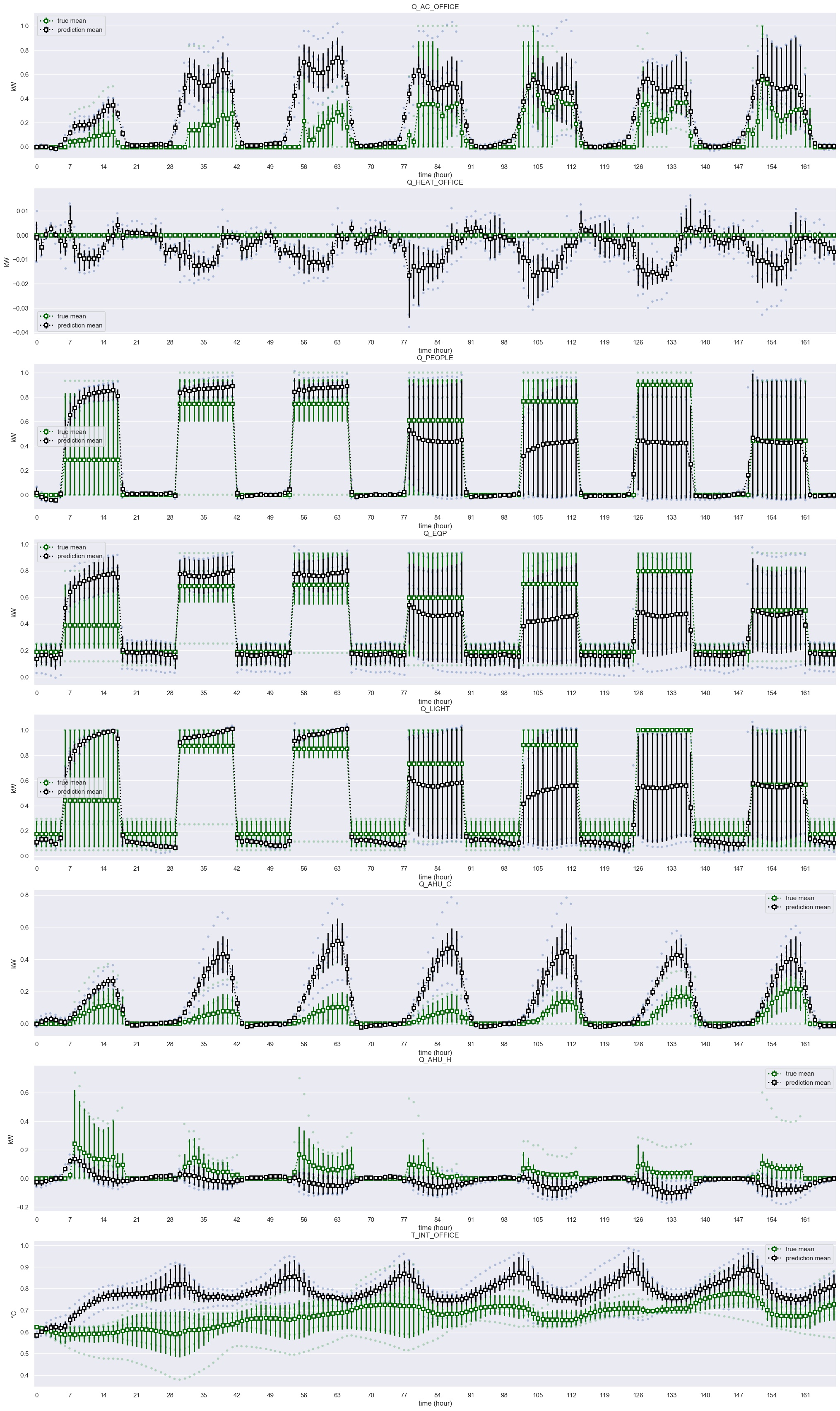

This challenge aims at introducing a new statistical model to predict and analyze energy consumptions and temperatures in a big building using observations stored in the Oze-Energies database. More details can be seen from the repo. A simple model(Seq2Seq) result for the task (only 2 epoches for train, test 2 samples):

Figure 3. GRU results (randomly choose a sample).

Figure 4. GRU results (values distribution).

We will keep adding series forecasting models to expand this repo.

- Add probability estimation function.

- Improve the network structure(especially attention network) according to our data scenario.

- Add Tensorboard to record exp.

Usually we will encounter three forms of data:

- multi files(usual caused by multi individual) which will cause oom if load all of them. Every separate file contaies train, vail, test.

- sigle file contaied train, vail, test.

- multi separate files (usual three) i.e. train, vail, test.

For 1, we load a file (train, vail, test dataset) iteratively in a epoch until all files are loaded and fed to a model. i.e.exp_multi.py. For 2, 3, we load train, vail, test dataset before starting training. i.e. exp_single.py.

If you have any questions, feel free to contact hyliu through Email (hyliu_sh@outlook.com) or Github issues.

To complete the project, we referenced the following repos. Informer2020, AdjustAutocorrelation, flow-forecast, pytorch-seq2seq, Autoformer.