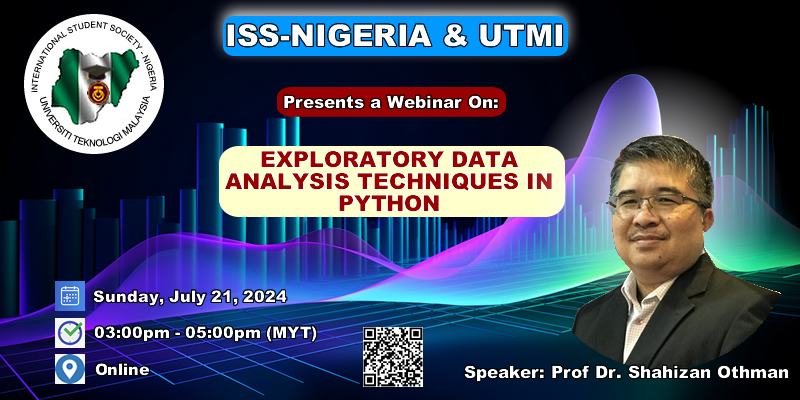

The webinar titled "Exploratory Data Analysis Techniques in Python" is designed to provide participants with a comprehensive understanding of how to effectively analyze data using Python. Scheduled for Sunday, July 21, 2024, from 03:00 pm to 05:00 pm (MYT), this online event is organized by ISS-Nigeria and UTMI. The session will be led by Assoc Prof Dr. Shahizan Othman, a renowned expert in data analysis, who will guide attendees through various techniques and methodologies essential for exploratory data analysis.

Participants will learn practical applications and best practices for using Python libraries such as pandas and Jupyter Notebooks to manipulate, clean, and visualize data. This webinar is ideal for both beginners and experienced professionals looking to enhance their data analysis skills. With a focus on hands-on learning, attendees will gain valuable insights that can be applied to real-world data science projects.

Exploratory Data Analysis (EDA) is a crucial step in the data analysis process that involves examining and summarizing a dataset to understand its characteristics, identify patterns, and gain insights into the data. EDA is typically performed before more advanced statistical and machine learning techniques are applied and helps in forming hypotheses, selecting appropriate modeling approaches, and ensuring data quality. Here are some key components and techniques used in EDA:

Exploratory Data Analysis (EDA) is a crucial step in the data analysis process that involves examining and summarizing a dataset to understand its characteristics, identify patterns, and gain insights into the data. EDA is typically performed before more advanced statistical and machine learning techniques are applied and helps in forming hypotheses, selecting appropriate modeling approaches, and ensuring data quality. Here are some key components and techniques used in EDA:

-

Data Summary: Begin by understanding the basic information about the dataset, such as the number of rows and columns, data types, missing values, and summary statistics (mean, median, standard deviation, etc.).

-

Data Visualization: Visualizing data through plots and charts can provide a clearer understanding of its distribution and patterns. Common types of visualizations include histograms, box plots, scatter plots, and bar charts.

-

Data Distribution: Analyze the distribution of variables to determine whether they follow normal, uniform, or other types of distributions. This can impact the choice of statistical tests and modeling techniques.

-

Correlation Analysis: Explore the relationships between variables using correlation matrices, scatter plots, and other correlation measures. This helps identify potential dependencies and multicollinearity.

-

Outlier Detection: Identify and handle outliers in the data. Outliers can significantly affect statistical measures and model performance.

-

Categorical Variables: Examine the distribution of categorical variables through frequency tables, bar plots, and pie charts. This helps understand the composition of categorical data.

-

Data Transformation: Apply transformations (e.g., log transformation, standardization) to make the data more suitable for analysis, especially if it doesn't meet assumptions of statistical methods.

-

Feature Engineering: Create new variables or features that might be more informative or relevant for the analysis. This could involve aggregating, combining, or extracting information from existing variables.

-

Missing Data Handling: Deal with missing data, either by imputing missing values or excluding incomplete records. The choice of method depends on the nature of the data and the problem at hand.

-

Hypothesis Testing: If relevant, perform hypothesis tests to determine whether observed differences or relationships in the data are statistically significant.

-

Data Transformation: Consider scaling or encoding categorical variables for modeling. This can include one-hot encoding, label encoding, or other techniques.

-

Dimensionality Reduction: Use techniques like Principal Component Analysis (PCA) or t-Distributed Stochastic Neighbor Embedding (t-SNE) to reduce the dimensionality of the data while preserving important information.

-

Time Series Analysis: For time series data, analyze trends, seasonality, and autocorrelation patterns. Techniques like autocorrelation plots and decomposition can be helpful.

-

Geospatial Analysis: When dealing with geographic data, use maps, geospatial plots, and spatial statistics to understand spatial patterns and relationships.

-

Text Analysis: If the dataset contains text data, perform text mining and sentiment analysis to extract insights from the textual content.

EDA is an iterative process, and the specific techniques and tools used can vary depending on the nature of the data and the objectives of the analysis. It plays a crucial role in gaining an initial understanding of the data, guiding subsequent analysis, and making informed decisions about the next steps in a data science or analytical project.

✅️ The main purpose of EDA is to help you look at the data before making any assumptions. In addition to better understanding the patterns in the data or detecting unusual events, it also helps you find interesting relationships between variables.

✅️ Data scientists can use exploratory analysis to ensure that the results they produce are valid and relevant to desired business outcomes and goals.

✅️ EDA also helps stakeholders by verifying that they are asking the right questions.

✅️ EDA can help to answer questions about standard deviations, categorical variables, and confidence intervals.

✅️ After the exploratory analysis is completed and the predictions are determined, its features can be used for more complex data analysis or modeling, including machine learning.

👉 Python is a popular programming language for data science and has several libraries and tools that are commonly used for EDA such as:

- Pandas: a library for data manipulation and analysis.

- Numpy: a library for numerical computing in Python.

- Scikit-learn: Scikit-learn is a machine learning library, but it also includes tools for data preprocessing, feature selection, and dimensionality reduction, which are essential for EDA.

- Matplotlib: a plotting library for creating visualizations.

- Seaborn: a library based on matplotlib for creating visualizations with a higher-level interface.

- Plotly: an interactive data visualization library.

In EDA, you might perform tasks such as cleaning the data, handling missing values, transforming variables, generating summary statistics, creating visualizations (e.g. histograms, scatter plots, box plots), and identifying outliers. All of these tasks can be done using the above libraries in Python.

- developers.google: Good Data Analysis

- Datascience using Python: Exploratory_Data_Analysis

- Towardsdatascience: What is Exploratory Data Analysis?

- Wikipedia: Exploratory data analysis

- r4ds: Exploratory Data Analysis

- careerfoundry:What Is Exploratory Data Analysis?

- How To Conduct Exploratory Data Analysis in 6 Steps

- A Five-Step Guide for Conducting Exploratory Data Analysis

- I asked ChatGPT to do Exploratory Data Analysis with Visualizations

| Exercise | Objective | Description |

|---|---|---|

| 1. Introduction to Google Colab | Familiarize yourself with Google Colab | Create a new notebook, write a simple Python script to print "Hello, World!", and explore basic features like adding text cells, running code cells, and saving your notebook. |

| 2. Loading Data with Pandas | Learn how to load datasets into pandas DataFrames | Download a sample dataset (e.g., Titanic dataset from Kaggle), upload it to Google Colab, and load it into a pandas DataFrame. Display the first few rows using the head() method. |

| 3. Data Cleaning and Preprocessing | Understand how to clean and preprocess data | Identify and handle missing values in the dataset. Use methods like dropna() to remove missing values or fillna() to fill them with appropriate values. Convert data types if necessary. |

| 4. EDA - Descriptive Statistics | Perform basic descriptive statistics to understand the dataset | Use pandas methods like describe(), mean(), median(), and std() to calculate summary statistics for numerical columns. Create frequency tables for categorical columns. |

| 5. Data Visualization with Matplotlib and Seaborn | Visualize data to uncover patterns and insights | Create various plots such as histograms, box plots, and scatter plots using Matplotlib and Seaborn. For example, visualize the distribution of ages in the Titanic dataset and explore relationships between different features. |

| 6. Correlation Analysis | Analyze correlations between different features | Calculate the correlation matrix using the corr() method in pandas. Visualize the correlation matrix using a heatmap in Seaborn to identify strongly correlated features. |

| 7. Identifying Outliers | Detect and handle outliers in the dataset | Use statistical methods and visualizations like box plots to identify outliers in numerical data. Explore techniques to handle outliers, such as removing them or transforming the data. |

| 8. Exercise: Marketing | Complete All Steps with Marketing dataset. | |

| 9. Exercise: Titanic Dataset | Complete all steps with the Titanic dataset. |

EDA is a vital but time-consuming task in a data project. Here are 10 open-source tools that generate an EDA report in seconds.

| Library | Description | Web | Github |

|---|---|---|---|

| SweetViz | - In-depth EDA report in two lines of code. - Covers information about missing values, data statistics, etc. - Creates a variety of data visualizations. - Integrates with Jupyter Notebook. |

🌐 | |

| Pandas-Profiling | - Generate a high-level EDA report of your data in no time. - Covers info about missing values, data statistics, correlation etc. - Produces data alerts. - Plots data feature interactions. |

🌐 | |

| DataPrep | - Supports Pandas and Dask DataFrames. - Interactive Visualizations. - 10x Faster than Pandas based tools. - Covers info about missing values, data statistics, correlation etc. - Plots data feature interactions. |

🌐 | |

| AutoViz | - Supports CSV, TXT, and JSON. - Interactive Bokeh charts. - Covers info about missing values, data statistics, correlation etc. - Presents data cleaning suggestions. |

🌐 | |

| D-Tale | - Runs common Pandas operation with no-code. - Exports code of analysis. - Covers info about missing values, data statistics, correlation etc. - Highlights duplicates, outliers, etc. - Integrates with Jupyter Notebook. |

🌐 | |

| dabl | - Primarily provides visualizations. - Covers wide range of plots: Scatter pair plots. Histograms. - Target distribution. |

🌐 | |

| QuickDA | - Get overview report of dataset. - Covers info about missing values, data statistics, correlation etc. - Produces data alerts. - Plots data feature interactions. |

🌐 | |

| Datatile | - Extends Pandas describe(). - Provides column stats: column type count, missing, column datatype. - Mostly statistical information. |

🌐 | |

| Lux | - Provides visualization recommendations. - Supports EDA on a subset of columns. - Integrates with Jupyter Notebook. - Exports code of analysis. |

🌐 | |

| ExploriPy | - Performs statistical testing. - Column type-wise distribution: Continuous, Categorical - Covers info about missing values, data statistics, correlation etc. |

🌐 |

Please create an Issue for any improvements, suggestions or errors in the content.

You can also contact me using Linkedin for any other queries or feedback.