NOW PART OF rsparse

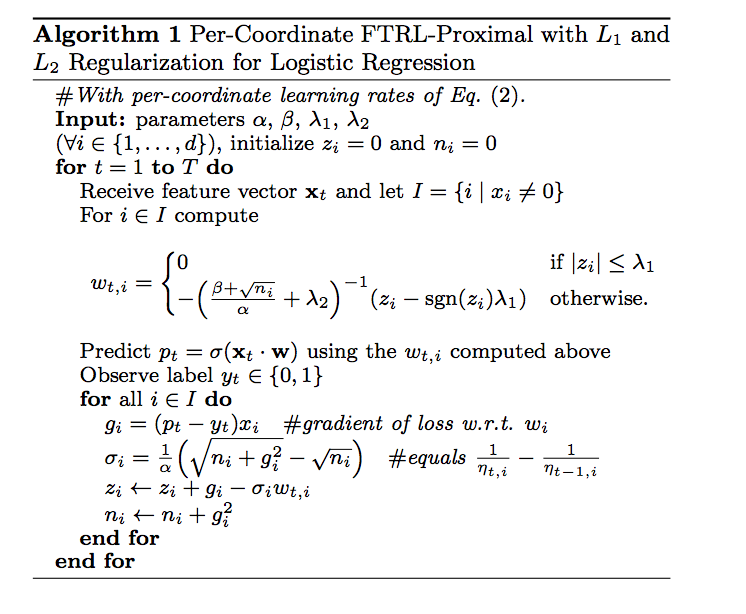

R package which implements Follow the proximally-regularized leader algorithm. It allows to solve very large problems with stochastic gradient descend online learning. See Ad Click Prediction: a View from the Trenches for example.

- Online learning - can easily learn model in online fashion

- Fast (I would say very fast) - written in

Rcpp - Parallel, asyncronous. Benefit from multicore systems (if your compiler supports openmp) - Hogwild! style updates under the hood

- Only logistic regerssion implemented at the moment

- Core input format for matrix is CSR -

Matrix::RsparseMatrix. Hoewer common RMatrix::CpasrseMatrix( akadgCMatrix) will be converted automatically

- gaussian, poisson family

- vignette

- improve test coverage (but package battle tested on kaggle outbrain competition and contribute to our 13 place)

library(Matrix)

library(FTRL)

N_SMPL = 5e3

N_FEAT = 1e3

NNZ = N_SMPL * 30

set.seed(1)

i = sample(N_SMPL, NNZ, TRUE)

j = sample(N_FEAT, NNZ, TRUE)

y = sample(c(0, 1), N_SMPL, TRUE)

x = sample(c(-1, 1), NNZ, TRUE)

odd = seq(1, 99, 2)

x[i %in% which(y == 1) & j %in% odd] = 1

m = sparseMatrix(i = i, j = j, x = x, dims = c(N_SMPL, N_FEAT), giveCsparse = FALSE)

X = as(m, "RsparseMatrix")

ftrl = FTRL$new(alpha = 0.01, beta = 0.1, lambda = 20, l1_ratio = 1, dropout = 0)

ftrl$partial_fit(X, y, nthread = 1)

accuracy_1 = sum(ftrl$predict(X, nthread = 1) >= 0.5 & y) / length(y)

w = ftrl$coef()

ftrl$partial_fit(X, y, nthread = 1)

accuracy_2 = sum(ftrl$predict(X, nthread = 1) >= 0.5 & y) / length(y)

accuracy_2 > accuracy_1